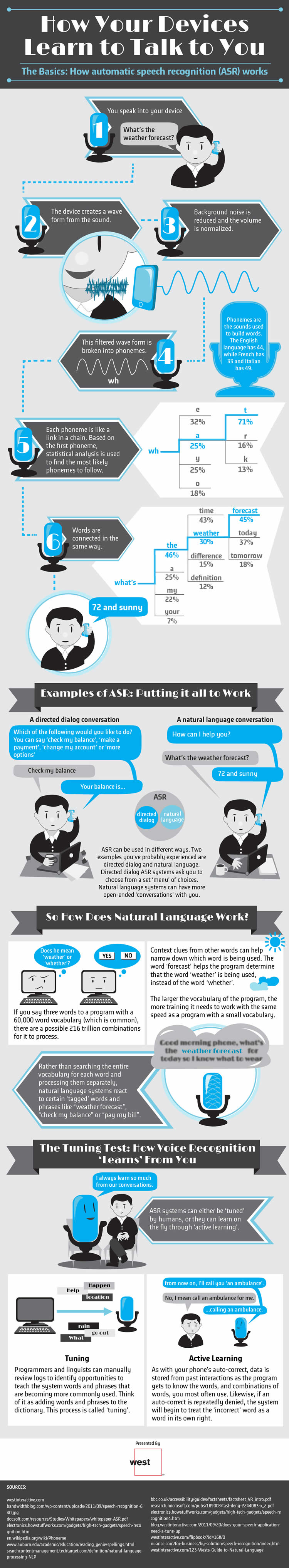

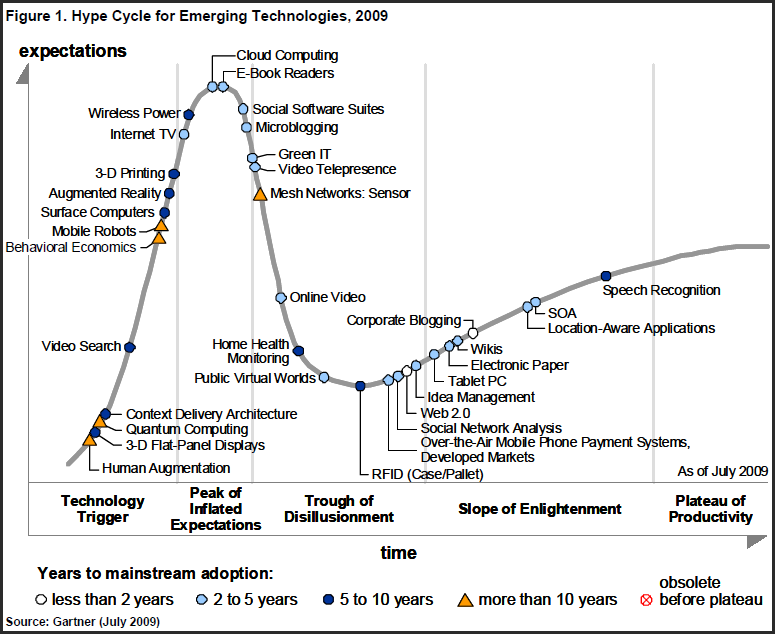

The prize for developing a successful speech recognition technology is enormous. Speech is the quickest and most efficient way for humans to communicate. Speech recognition has the potential of replacing writing, typing, keyboard entry, and the electronic control provided by switches and knobs. It just needs to work a little better to become accepted by the commercial marketplace. Progress in speech recognition will likely come from the areas of artificial intelligence and neural networks as much as through DSP itself. Don't think of this as a technical difficulty; think of it as a technical opportunity.

~ Steven W. Smith, The Scientist and Engineer's Guide to Digital Signal Processing

Automatic Writing, Robert Ashley 1979

(from Wikipedia)

"Automatic Writing" is a piece that took five years to complete and was released by Lovely Music Ltd. in 1979. Ashley used his own involuntary speech that results from his mild form of Tourette's Syndrome as one of the voices in the music. This was obviously considered a very different way of composing and producing music. Ashley stated that he wondered since Tourette's Syndrome had to do with "sound-making and because the manifestation of the syndrome seemed so much like a primitive form of composing whether the syndrome was connected in some way to his obvious tendencies as a composer".[25]

Ashley was intrigued by his involuntary speech, and the idea of composing music that was unconscious. Seeing that the speech that resulted from having Tourette's could not be controlled, it was a different aspect from producing music that is deliberate and conscious, and music that is performed is considered "doubly deliberate" according to Ashley.[25] Although there seemed to be a connection between the involuntary speech, and music, the connection was different due to it being unconscious versus conscious.

Ashley's first attempts at recording his involuntary speech were not successful, because he found that he ended up performing the speech instead of it being natural and unconscious. "The performances were largely imitations of involuntary speech with only a few moments here and there of loss control".[25] However, he was later able to set up a recording studio at Mills College one summer when the campus was mostly deserted, and record 48 minutes of involuntary speech. This was the first of four "characters" that Ashley had envisioned of telling a story in what he viewed as an opera. The other three characters were a French voice translation of the speech, Moog synthesizer articulations, and background organ harmonies. "The piece was Ashley's first extended attempt to find a new form of musical storytelling using the English language. It was opera in the Robert Ashley way".[1]

Harpy Speech Recognition System (1976)

Result of the DARPA-funded 1971 "Speech Understanding Research" programme, IBM, Carnegie Mellon University & Stanford Research.