Background

Twitch_Plays_Max (2020) is an internet sound installation and was created as a part of Roots of the Future, a collaborative project between 6 composition students of the Royal Conservatoire and 5 Scenography students from the Academy of Theatre and Dance of Amsterdam.7 This project was also going to feature the Amici trio, whose instrumentation is Harp, Double Bass and Percussion, but due to the Corona pandemic, this was not possible. This project became somewhat of a flipping point in my artistic creation. As stated before, I had become disillusioned with score writing and didn’t want to engage with this medium anymore. I started flirting with other kinds of ways of transmitting my ideas to musicians and part of it was even reconsidering what could be used as an instrument.

Due to the mismatch between the number of composers and scenographers, I ended up working solo and was tasked with the creation of “interlude music”, that is, music to be played during the stage changes between the pieces resulting from the Composer/Scenographer collaborations. Each interlude would last from 3 to 5 minutes. Though sceptical at first, I intended to make the best out of this assignment. I had recently bought a second-hand Nintendo Switch, the newest instalment of the Nintendo gaming console series, and discovered that the controllers (called Joy-Con) could be connected to a computer via the Bluetooth protocol. I knew immediately that I wanted to use these as interfaces for the musicians. The only downside of these controllers is that you can’t access the gyroscope and accelerometer data without having to hack the Joy-Con itself. I then started doing some experiments with these controllers, mainly surveying what kind of control data I could get from them, creating sound engines with Max/MSP and sketching possible mappings between the controllers and the sound engines. The main idea of the piece became associating “interlude music” with leisure time and exploring the idea of performance downtime as playtime. This would give the musicians an opportunity to unwind between each piece by interacting with a playful and improvisatory system.

On March 15th of 2020, a Sunday, Mark Rutte announced that The Netherlands would begin its smart lockdown, the first of many seemingly carefully designed set of restrictions to hinder the spread of Coronavirus while also catering to typical Dutch liberal values of personal freedom, no matter the cost. During this televised address, I was drinking beers with some Portuguese friends in Amsterdam at the Delirium Café, near Muziekgebouw. We were told by the bartender that the establishment needed to close sooner and we switched from a nonchalant environment of a pub to a cosy, but in this case, almost dystopian domestic domain.

This situation put a complete stop to my "interlude-leisure music" project. We couldn't meet anymore in a live setting so we started planning for a fully online concert. One of the first ideas I had was adapting the Joy-Con controller piece by having myself play Mario Kart DS (the first iteration of the game I ever played) on a digital Nintendo DS emulator with a live-generated soundtrack. I decided to scrap this in favour of a piece inspired by Twitch Plays Pokémon, a somewhat uncanny online event that happened around 2014. In this event, an unknown person hosted a 24-hour Twitch livestream of a Pokémon Blue game. People attending the livestream were then able to write commands in the chatroom in order to play the game itself, at a distance. These commands corresponded to the buttons on a Game Boy Advance: Left, Right, Up, Down, A, B, Start and Select.

While living in a quarantined world the concept of this livestream of course resonated with me. The idea of people separated by hundreds of kilometres, connected only by fibre optic cables but working towards a common goal (even if just to reach the final trainer of the game) was a very poetic, hopeful and inspiring vision.

Concluding thoughts

After going through the extraordinary scenario that is a global pandemic, after an event like Roots of the Future and especially after being so inspired by the poetic promise of Digital Networks, I realised that I couldn’t go back to trying to write traditionally notated scores that fetishised and obsessed over systems of pitch relationships. In my mind, a parallel was drawn between this feeling of letting go of my “lattice” oriented music with how humankind could not go back to so-called pre-pandemic “normalcy”. The collective trauma brought on by such a situation cannot easily be forgotten. At least, I haven’t. If we, as a global society, strive to forget and merely gloss over it then, I suggest, we are actively doing a disservice to all social inequalities and injustices that were exposed to unprecedented levels due to unprepared governing bodies and structural “-isms” and phobias. Similarly, if I were to go back to systems of pitch and duration, I would be acknowledging that the development that had happened in my artistry and character had actually been inconsequential. Andrew Littlejohn, anthropologist and assistant professor at Leiden University, reflects on “normalcy” and the coronavirus and writes in the Leiden Anthropology blog:

“(...) our ongoing destruction of biodiversity through logging, mining, and ranching—which produce the wood, minerals, and meat composing our “normalcy”—enables new viruses to species jump. How invisible infrastructures of persuasion structuring our political economies produce a politics unable to cope with coronavirus. (...) If such accounts are correct, then as another anthropologist, Steve Caton, argued more than two decades ago, ‘we have to rethink our notion of the event. It isn’t a periodic or a cyclic phenomenon which appears in a moment of disruption, only then to be reabsorbed by the normative order; it is in a sense always already there, though under the surface or in the background, and then appears spectacularly for a while.’ ” 9

Work setup and realisation

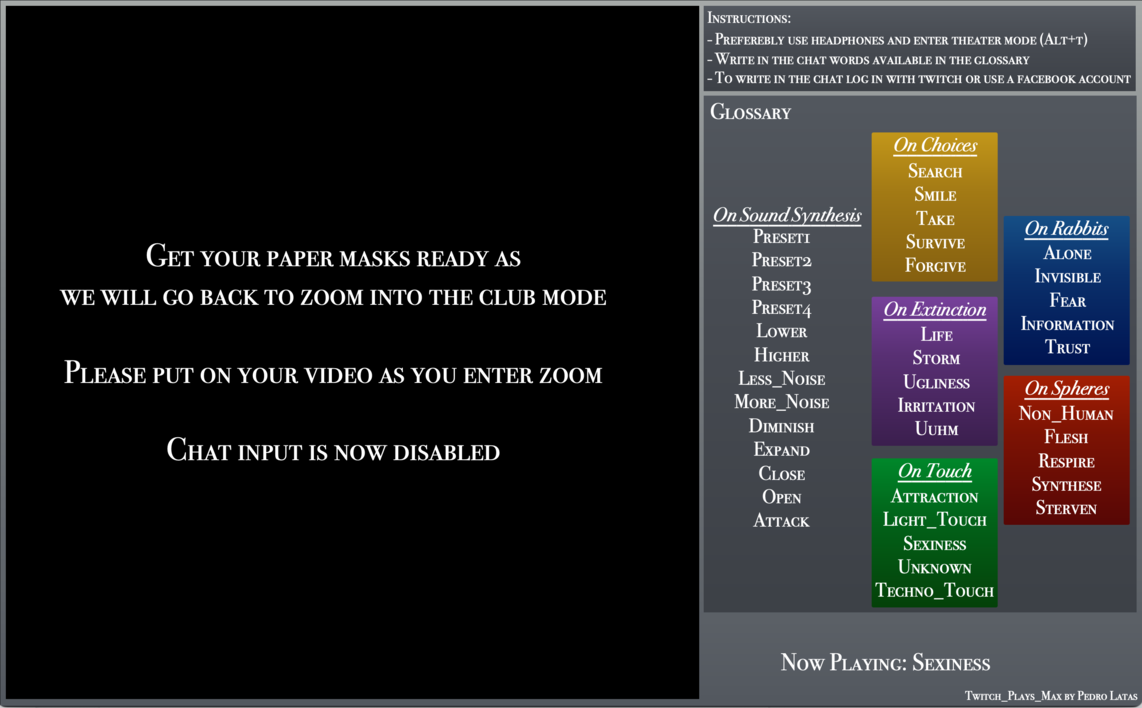

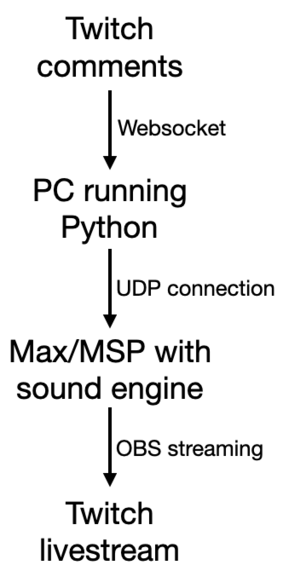

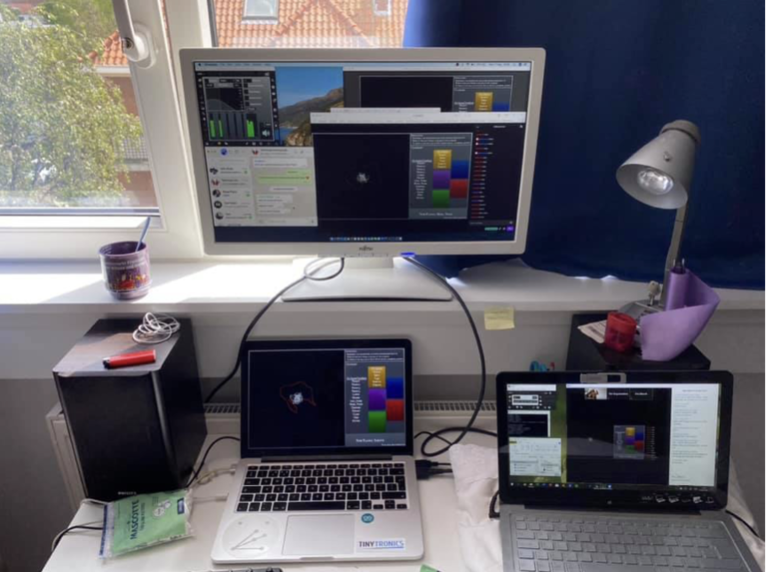

In order to create my own interactive system based on Twitch Plays Pokémon I had to use code that creates a Websocket (TCP/IP connection) between the Twitch comment section and my own computer. After some online searching, I was able to find a repository by Geert Verhoeff with the necessary Python code to run such a system.8 The Python code could only be run on a PC machine so a second computer was used to run the WebSocket code. The data gathered was then sent to my main Mac computer, via a local UDP connection, which also ran a Max/MSP sound engine and a streaming service. The following scheme and picture summarise the connections made and the physical setup:

The interface streamed back to Twitch included the glossary of words participants could write on the chat and a visual feedback screen based on oscilloscope representations of the sound output. The glossary was composed of words relating to the other projects of Roots of the Future (“On Choices”, “On Rabbits”, “On Extinction”, “On Spheres”, “On Touch”) and words relating to synthesis parameters of a constant drone. Given the fact that many of the people present at the online concert didn’t have a musical background, there was an attempt to keep the words referring to sound synthesis somewhat ambiguous and “non-technical”, though moderately accurate with the sound modifications. Because this piece was still presented as an “interlude piece”, instead of the words pertaining to the other projects being all present from the beginning they were uncovered throughout the 5 iterations of the piece.