2. Instruments, techniques and choices

This chapter contains a brief description of my electronic equipment. I find it natural to call the units involved instruments, machines and/or devices, since their functions at different times might be associated with all of these labels. I will describe some important features and techniques, while the musical aspects relating to some of these techniques will be further described and discussed elsewhere, particularly in Chapter 3. Reflections about my choice of instruments on an operational level will be presented as I go along, while other important and underlying reasons for these choices will be reflected on towards the end of the chapter. I wish to emphasise that these descriptions are not based on the technological aspects of music technology. Being a trained musician, not a technologist, I focus on the practical use of my instruments and the sounding results, not on the technology involved.

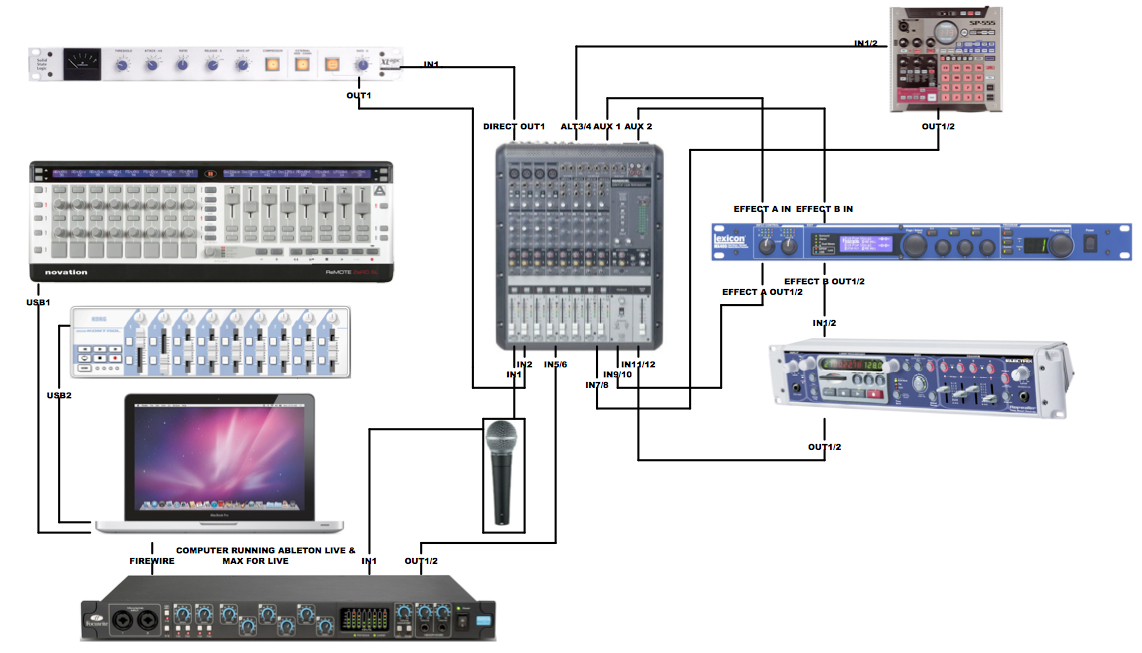

2.1 Technical setup

This is an overview of my present setup. (A larger version will be found in the appendix.) As shown in the figure, the microphone signal is split and sent both to the mixer and to the audio interface. In the centre is the Mackie Onyx 1220 mixer, which receives input from all of the connected devices. Then, clockwise from the upper right:

Roland SP 555 Creative sample workstation (input from Alt 3-4, output to mixer Ch. 7/8)

Lexicon MX 400 Dual stereo/Surround Reverb Effects Processor, processors A & B (input from aux 1&2, output: A to mixer Ch. 9/10, B to Electrix Repeater Loop Based Recorder)

Electrix Repeater Loop Based Recorder (input from Lexicon B-processor, output to mixer Ch. 11/12)

Focusrite Saffire Pro 40 Audio Interface (input from split mic signal, output to mixer Ch. 5/6)

MacBookPro computer, running Ableton Live and Max for Live (connected through FireWire to audio interface)

Korg Nano Kontrol, controlling Ableton Live with Max for Live patches (USB- connection)

Novation Remote Zero, controlling the Granular/Filter patch in Max for Live (USB- connection)

SSL- compressor (input from Direct Out 1, output to mixer Ch.2)

2.2 The electronic devices and their functions as instruments

My equipment has changed, and also expanded since I started working with electronics in the early 90s. The devices I use have been chosen for special reasons, based on earlier experiences. These choices are not choices between either practical aspects/usability or musical qualities. The motivation for and results of my choices are often intertwined. On the one hand, the usability of a device clearly has consequences for the musical use and results, and on the other hand, musical motivation gives premises for the choice of instruments, the setup of controllers and the mapping of the various functions. In a way, performing with live electronics, you build your own instrument, even if the different components consist mainly of mass-produced devices. Furthermore, this is an ongoing process, always with room for improvements and changes. It is also a fact that usability is not always concerned with the structure and design of the device, but is also dependent on personal preferences and not least habits and internalised skills. I will describe the different devices in my setup, focusing on what made me choose them, how I use them in relation to each other, and, where relevant, their strengths and shortcomings.

2.2.1 Mixer, Mackie Onyx 1220

This is a 12-channel analogue mixer that I obtained in 2003[13]. It is relatively large (for travelling), but I chose this model for two main reasons: it has high quality pre-amps, and it has volume -faders (not knobs). There are also several options available for sending signals to other devices, as shown in my setup overview: aux sends 1&2, direct outs and routing on each channel (Alt. 3-4 out). This makes it possible to connect to and mix between all of the devices. I could not work this setup with a mixer that did not have these options.

The importance of faders instead of knobs is also reflected in my choice of loop machine and MIDI-controllers. The reason for this is my physical experiences of having more “contact with” the levels and being more in control of them. There is also a visual aspect in this choice; it is much easier for me to obtain a visual impression of the levels by watching faders rather than knobs.

If I were to wish for a perfect mixer, it would be smaller in size, but with the same features as this one – and it should have 4 aux sends!

2.2.2 Lexicon MX 400 Dual stereo/Surround Reverb Effects Processor

![]()

I obtained this after my first effect-machine broke down in 2004, and I wanted it because of how it sounded, for its programming options and for practical reasons, i.e. getting two processors in one machine/unit. I send from Aux 1&2 (mixer) to processors A & B. I use one bank of programmed reverbs and effects, sorted by numbers and names, and choose which processor I operate (by turning the select knob). Processor B sends through the Repeater Loop Machine back to the mixer, so the effect signal can be recorded there if necessary. Some of the effects are used as programmed by the manufacturer, but most of the ones I use have been programmed (the different pitch shifter effects) or modified (the reverbs, chorus and flanger) by me .

System and overview

When I first started using the machine, I needed a system that seemed logical to me, in order to remember where I could find the different effects and reverbs. This is especially important during a live performance when you need to be able to make choices quickly. I have 34 different programmed reverbs and effects that I use at present, and I sort them in a (to me) natural order: from 1-20 I move from “dry” reverbs to longer reverbs, and also from short delays to longer delays. From 20- 30 I have different chorus, flanger and pitch-shifter effects, and from 30-34 some “special effects”. Furthermore, the aux 1 on the mixer is dedicated mainly to “reverbs and delays”, while the aux 2 is for chorus, flanger, pitch-shifter and “special effects”.

Usability

The effects can be programmed, named and sorted rather easily, and the names of the effects show up in the display. Ideally I would have one display and one program select button for each processor, but the lack of this is probably the price to pay for having one machine unit instead of two

2.2.3 Electrix Repeater Loop Based Recorder

4 separate loops with faders

This is the loop machine that replaced my earlier loop machine (a Lexicon Jam Man) in 2001. The Jam Man had options for overdubbing each loop, but you had to choose which one to play at a time. The biggest difference in changing to the Repeater was the option available for creating four different loops with overdubs, that could be played back at the same time with separated volume controllers, one fader for each loop. This was a great improvement, and something that I had really wanted. Another big improvement was the option for storing recorded loops on memory cards. I used this option a lot in the beginning, especially for solo performances, while nowadays I tend to use the Roland sampler for storing recorded sounds and loops (for reasons that I will return to later). The Repeater also has other important features that I use, like the option for reversing and panning the loops.

Example II, 1:

Usability

The recording, overdubbing and playback of loops is fast and easy, as is the selection of tracks. Controlling with faders is very important, and so is the option to have the machine mounted in a rack rather than placed on a table. (It is placed next to the reverbs and effects-machine, which I often operate in close combination with the looping). Working with this machine is limited by the fact that the length of the first loop defines the length of the other loops, even if they are separated on different channels. The method used for finding previously recorded loops from the memory card, and using them, is also a bit awkward.[14]After I acquired my first Repeater, several newer loop machines have been produced, with different features. There are also new options available for designing computer programs which functions as loop machines. Still, based on what I know about the field of “outboard-devices”, and the fact that my computer is assigned to other tasks in my setup, I think that this is one of the best solutions I can have for the time being.

2.2.4 Roland SP 555 Creative sample workstation

Being both sampler and effect-machine, the Roland SP 555[15]has functions that overlap the Repeater loop machine and the Lexicon effect machine, but it has a very different structure. It has a lot of different functions and I will focus on what is important for me now with this machine, and at the same time look at how it is complementary to the instruments it overlaps with.

Pre –recorded sound library with easy access

Pre-recorded sounds are accessible in a quite different way here, than on the Repeater: the Repeater loop-machine operates with numbers on a display, selected one at a time by turning and pressing a knob, and playing a maximum of four loops at the same time. This Roland machine has 16 sounds for each of the 10 banks (8 of them stored on memory cards), assigned to 16 pads ‒ so it is faster and easier to find and play back the samples. Also, the Roland has an option for playing back a sound “one time only”; it does not automatically loop the sound like the Repeater does. As mentioned before, the consequences of this for me are that most of my pre-recorded sound samples are played back from this machine.

Organising sounds

Some different “setups” of sound-samples are designed for specific projects, but I also have “palettes” that are possible sources for improvisational use in several projects (as demonstrated in Chapter 3). As with the Repeater, I have to remember the sounds as numbers and banks, and this can sometimes be a challenge. The grouping of sounds that belong together has been one of my strategies here, and it is also important to prepare and remember (“go through the sounds”) during the hours before a performance.

Pads and control functions

When triggering pads on the Roland, there are three options for playback: the sample can be played back once, it can be repeated as a loop, or you can select “Gated playback”, which makes the sound stop when you release the pad. This last function is especially important because of the percussive effect that you do not get with faders on the Repeater, and using “on and off” will not give the same sensation as the gated pad-function. The pads can also, if selected, be pressure-sensitive. The pads as controllers, especially when triggered in “gate” mode, give a very physical feeling of playing (more than just controlling).

Example II, 2:

Filter and D-beam control

The effects on the Roland Machine can be assigned to each sound sample, and also to everything that is routed from my mixer and into the machine. One of the effects I use quite often is the EQ filter-effect called Super Filter. By using 3 knobs, I can choose between four types of filters[16] and adjust the cut-off frequency and the amount of peak in this area. Like all the effects on this machine, this is a very “rough” type of processing, and my choices are also very “rough”, with the most important being the variation in sound and the kind of transformation that the filtering gives. It is also an effect that I use sometimes instead of the volume control to make the sound fade in and out. In addition to the filter-effect that can be assigned to each sample, there is an option for filtering the total sound of all the samples and sounds by using a so-called “D-beam controller function” in “filter” mode. I can then control the cut-off frequency by moving my hand over a sensor on the machine after selecting a pre-programmed filter (assigned to the pads). The movement of my hand produces quite a different effect and sensation than when using the knobs.

Example II, 3: With Michael Duch (see 4.2.4).

Other effects

As mentioned earlier, I use the Lexicon effect machine for different reverbs, delays and pitch-shift effects. The effects I use from the Roland are the more extreme ones, which I do not have on the Lexicon, e.g. the Ring Modulator, the Slicer, the DJFX Looper, the Fuzz and Overdrive (more about this in Chapter 3). Alternative sources for producing these effects could be more specialised effect-boxes (one dedicated to each effect), or also possibly plug-ins in Ableton Live. More specialised effect-boxes might sound better, but for the time being I have placed priority on having a user-friendly setup, and I will return to this later on.

Usability

As described here, the Roland has some qualities that complement my setup, and this is primarily due to the structure of the machine. Both the pads as controllers and the visual oversight serve to make this an important instrument, especially for working with pre-recorded sounds. As with live recording and the playback of loops, I find the Repeater to be more suitable for intuitive operations, especially when working with several layers. (For me, the live sampling -operation does not seem to be quite as easy on the Roland if you want to make more than one loop with overdubs “on the fly”[17]).

2.2.5 The SSL Compressor Clone[18]

The compressor in my setup has one important function: the option for making the sound of the voice more “present”. It is only used for compressing the clean microphone signal (coming from direct out 1-4 on the mixer), and it makes it possible to put “small” sounds, like whispering or talking with a low sound level, up front in the musical scenery. Furthermore, I sometimes use it for very loud passages where the voice sound needs more “power” in order to be heard. The settings for the compressor are normally not changed during a performance, only the level on the return channel, so the usability is not an important issue here. The challenge involved in using a compressor live on stage is of course to avoid compressing the instruments around me.

2.2.6 Computer, Ableton Live and MIDI-controllers

Up until about a year before the start of this project, I had an idea about “not becoming a laptop musician”. This idea came partly from having experienced several concerts where the musicians were looking at their laptops and displaying few signs of interacting with the musicians around them, and sometimes not even with the music played. Furthermore, I had some prejudices about the musical opportunities available from something that I regarded as being “computer music”. Working with sensors and a granular synthesis-patch made in the MaxMSP program[19] in a BOL-project, made me change my mind about this. I discovered that the computer software-based processing in this particular patch could create musical components that were very different from, and also in a sense more organic than, the sounds my outboard machines could produce. For me, much of the organic character derived from the possibilities of randomness that could be implemented during processing. It was also important that the sound that was processed came from my voice. Working with sensors as controllers was exiting, but I also experienced limitations in respect of what I actually could control and play with. I realised that MIDI-controllers with knobs and faders could give me more detailed and varied control than the sensors, and in a more physical and intuitive way than (what I experienced that) the laptop keyboard could offer. I therefore included the Novation Remote Zero MIDI controller with the computer, the audio interface and MaxMSP in my setup. I realised that I wanted the option to include several MaxMSP patches in my setup. To do this, I needed a new setup on my computer, and this led me to the Ableton Live when they introduced Max for Live.

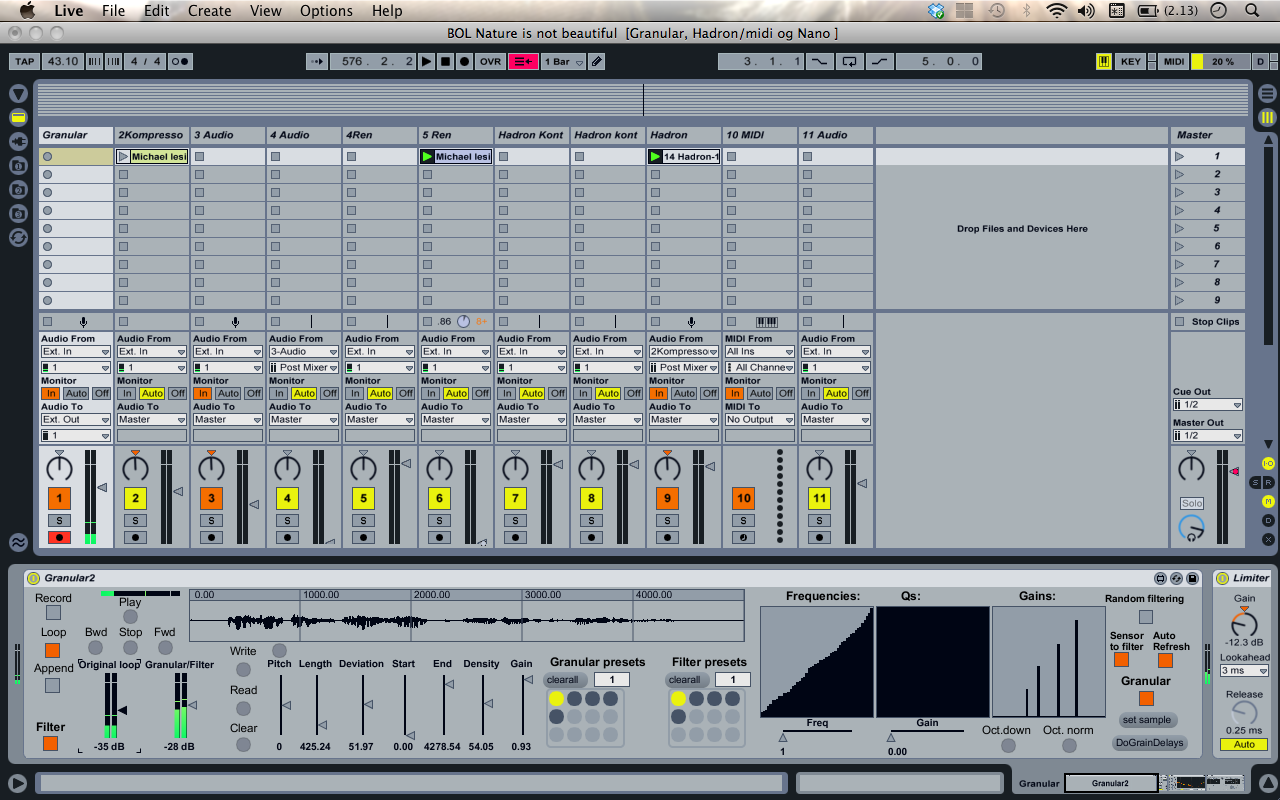

Abletone Live with Max for Live

Ableton Live, scene view

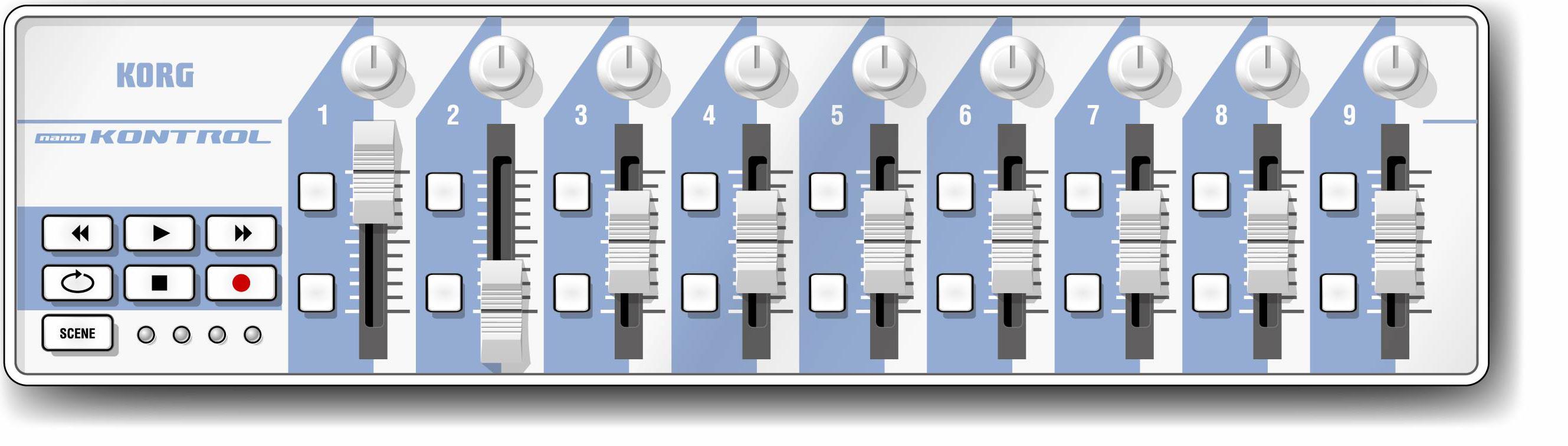

Korg Nano Kontrol, small midi-control unit

With the Ableton Live, extended to Max for Live, I have an “extra mixer”. At the moment I control it with a small Korg Nano Kontrol. This was chosen mostly because of its size, in order to maintain a good working position with my setup, and also because it seemed to have the functions I needed at the time. (I am considering replacing it with a controller with more functions, and I will return to this later on.) With this setup, I can use several MaxMSP-patches as plug-ins:

- The MaxMSP Granular/Filter Patch

- The Live Convolve Patch

- The Hadron Particle Synthesizer

These will be presented below.

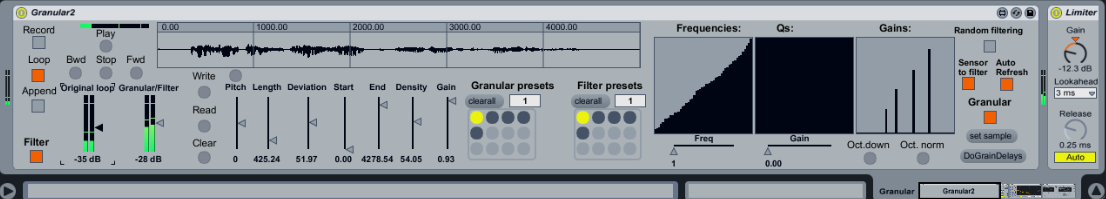

MaxMSP Granular/Filter Patch

MaxMsp Granular/Filter Patch as plug-in in Ableton Live

The Granular/Filter- patch – hereafter referred to as the G/F patch ‒ was designed for me by Ståle Storløkken. This is a patch that combines granular synthesis[20] and frequency filtering. To explain the granular synthesis in simple words: the sound input is divided into small grains and played back with variables such as length of grains, density, deviation (random variation in length of grains) and pitch. All of these variables can be controlled to a certain degree. Furthermore, in this patch the sound can be filtered through a graphic EQ-filter where I can single out very narrow frequency areas, or also use a random function, to make the EQ filtering change randomly.

Example II, 4:

:

Usability

Remote Zero SLmidi controller

Ståle Storløkken also helped me to map the different functions in the patch to the Remote Zero SL midi controller. To work with this patch in realtime, I wanted the controller to “connect” with the visual picture of the patch. As an example, the left upper knobs on the controller are assigned to each variable as listed over (pitch, density, etc.) Furthermore, the two faders to the right are assigned to frequency and gain in the filter frequency control, and the two faders to the left control the original loop and the processed loop.

The Live Convolve patch

The Live Convolve Patch (hereafter referred to as Convolution) was made by my colleges Trond Engum[21] and Øyvind Brandtsegg. It is based on the principle of convolution reverb. [22] In short: I sample a sound or a phrase, in the same way as an impulse response (see footnote).When I activate the convolution-function, the next sound I send will be convolved with the sample, and the resulting sound will be a mix of the two. I often use it to blend one vocal phrase with another.

Example II,5:

Usability:

This is a Beta version that requires some adjustments in order to be fully functional. It is fairly easy to operate, and I am looking forward to a version that is even more suitable for a live setting. For now I have used it mostly in studio sessions and as part of live sequences with low volume.

Hadron Particle Synthesizer

The Hadron Particle Synthesizer‒ hereafter referred to as the Hadron ‒ is an open source plug-in, created by Professor Øyvind Brandtsegg and several colleges at the Music Technology Section, Department of Music, NTNU. The Hadron is based on granular synthesis, and designed to make it possible to “morph” seamlessly between different pre-programmed sound processing techniques, called states. At the moment I do not have all the functions of the patch mapped to a controller, but by using only the Korg nano kontrol, I can record sound-samples and morph the samples between the different “states”. If I want to control the so-called “expression controllers” in the patch, I will need another MIDI controller, and that would be the reason for replacing the small NANO-controller.

Example II, 6:

Usability

This patch has a lot of possibilities, and is fascinating for this reason. The morphing between states gives it a very flexible and varied character. It is very complex, and for me it has been interesting, but after a while also frustrating to explore, because it seems very difficult to control. (I will discuss the need for control and predictability in Section 2.3.) It is possible that I can find a control unit that enables me to work with the Hadron in a (for me) more logical and intuitive way, and probably this would change this situation, at least to some extent. In contrast to the Live Convolve patch, which I could implement in my setup and vocabulary immediately, the Hadron seems to be a “new instrument”. For now, I have implemented in my vocabulary just a small part of all the things that Hadron can actually do. I will discuss this choice further in the next section, but would point out that I experience the “small part of Hadron” that I use, as being sufficiently predictable, and both possible and valuable to implement in the musical vocabulary of myself and my fellow musicians.

2.3 Choice of instruments

In the description of my electronic devices, I have focused mostly on their different functions and usability, while I will go further into the musical parameters in Chapter 3. There are also underlying musical and aesthetic reasons for my choice of instruments that call for more general reflections. These reflections concern the relationship between different genres and the field of music technology, and also the act of improvising.

2.3.1 The role of music technology in music/the role of music in music technology

In the field of music technology and live electronics, new products and devices are being developed and presented all the time, both as commercial products (like most of my devices), and as a result of research and experimentation in a less commercialised, more academic and experimental field. (STEIM[23] in Amsterdam and IRCAM[24] in Paris are examples of important contributors, and also – as already shown ‒ the Music Technology Section, Department of Music, NTNU.) Digital electronic musical instruments today can be many things, for example: Wii-controllers and a computer (Alex Nowitz, (see 1.5.6), a suit or a glove with sensors (Rolf Wallin), a custom-made computer program with a conventional MIDI controller (Maja Ratkje), Ableton Live or other DAWs, an Ipad/Iphone with various apps, a DJ-sampler … ‒ there are numerous possibilities. One important motivate for developing new devices is rooted in the need for a stronger connection between bodily impulses and sound. Hyper instruments[25], new instruments, and the use of motion capture, are all important fields of development in this regard. Manynew contributions in this field were presented at the NIME Conference (New Interfaces for Musical Expression), 2011, in Oslo, which I attended (and where I also presented a project with my vocal ensemble, see Chapter 5). What struck me when presented with the range of new devices and techniques that were used in various concerts, was that I often felt a lack of musical coherence and ideas in the performances. Since this was a conference for technologists, rather than musicians, this was probably hardly surprising. Still, I think this experience reflects an important issue. This issue has often been addressed and discussed at conferences on music technology that I have attended, by my colleges at the Section for Music Technology, and also by my fellow musicians in the field of improvised music. It is a discussion about the role of technology in music on very many levels and from different viewpoints and angles. In my field of musicians this is often simplified with the question: “What comes first, music or technology?” (i.e.: is your motivation based on a musical idea or fascination for a technical invention? Does the technology deliver the premises for the music, or is it the other way round?). This question represents a rather polarised and also biased view on “technology” (especially “advanced technology”), but it also reflects some key issues that I will return to in the following. I cannot go into all the aspects and perspectives of the discussion here, but the choice of tools and techniques in my work (and also the choices of other musicians in my field), should be seen both in the light of different approaches to the various musical tools delivered within the field of music technology, and as a basic consequence of different musical choices relating to aesthetics and personal musical expressions.

2.3.2 The electronic instrument as a contributor to new music

There seems to be agreement in the forums on music technology that I have attended on one important issue: a crucial part of the development of a new instrument is that it should be mastered musically and put into a musical context where the musical ideas are the main focus. This process has to involve a musician (or several). It is a demanding project, as Alex Nowitz has confirmed to me when sharing his experiences about developing and rehearsing his work using Wii-controllers[26] and his new Strophonions[27]. (I experienced the work of Nowitz, presented at the NIME, as an exception from other presentations in this regard ‒ it is obvious to me that his musical work and the development of the instrument have intertwined very closely along the way.) Furthermore, I find that Nowitz’ project demonstrates how the development of new technology can contribute directly to new kinds of music, and that the technology and the new instrument can sometimes be a more important part of this development than the musician’s genre as such.

2.3.3 Development and use of instruments within genres

The use of live electronics is not restricted to a special musical genre (as pointed out in Section 1.5) ‒ on the contrary, it is important in popular music (electronica, rock, hip hop and indie-pop, etc.), noise, jazz, improv and experimental, as well as in modern contemporary music, electroacoustic music and performance art, etc. In the development and use of devices, one could suggest (very roughly and polarised), a principal difference between the major developments in popular music, seen as part of the commercial music industry, and other genres:

- The digital devices are, in the commercial industry, often constructed to fulfil the genres' conventions, on premises that are defined by the genres.

- The devices and instruments in other, more experimental genres are either constructed or usedin new ways, in order to explore and create new musical possibilities. This use of technology, although experimental, can be seen as taking place within a genre (for instance: the genre of noise (Maja Ratkje), and the genre of interactive electroacoustic composition and improvisation (Victoria Johnson)). (It should be underlined here that there is also a long history of experimentation with technology in the history of popular music and rock, especially in connection with work in the recording studios, from the Beatles and Pink Floyd to Radiohead and Bjørk). Moreover, as pointed out earlier, the use of technology can also be seen as being more loosely connected to a genre, in individual expressions that are perhaps more closely connected to the technology involved, as in the case of Nowitz, for instance.

2.3.4 Premises for the use of technology in music

The rough polarisation above does not answer the question: “What comes first, music or technology? ”, which was mentioned in Section 2.3.1. One could argue that the technology in popular music is designed with the music in mind, and therefore that the music is setting up premises for technological development. However, this would involve overlooking the fact that the technology in use definitely also sets up premises for the music produced (see Engum, 2012[28]). Moreover, one could perhaps argue that advanced and complex technology is so unfamiliar, uncontrollable and inflexible for the performing musician that it restricts performance and creates “unmusical” premises. In this respectI think that Alex Nowitz, as an example, demonstrates the opposite.

In my artistic research project, the premises for the use of technology are strongly connected to the musical field (or genre) I am rooted in, and intimately related to my choice of musical projects in which I take part in, the various improvised interplays with other musicians. My musical ideas, the need for control and flexibility, the intuitive appreciation of a sound or a technical option, the mix of acoustic and electronic sound – all of this is fundamentally grounded in my musical experience, my development and my choices along the way. During the period of my research project, I have become gradually become more aware of what actually constitutes my field and my music, even if I consider it to be open and genre -crossing. The discussions and reflections about technology and music also contribute to clarity in this regard.

Choice of sounds

I work with sounds in an intuitive way. My choices of sound reflect my taste for sound as such, and also whether I experience the sound and function as being suitable in the various interplays in which I take part. As an example, I tend to use a slightly different palette when playing the acoustic double bass than with the duo with drums/electronics or with my trio BOL (drums and synthesizers). I have noticed that I often search for a kind of “organic” sound. I have registered (as discussed in Section 1.5.6 ) that if a sound is “too digital”, it is harder to implement it in my mix of the acoustic and electronic. The “organicness” or “digitalness” in sound is not definable as such ‒ it is a matter of experience and taste. An example of this is my experience with the Hadron, as mentioned previously (Section 2.2.6). I first found it to be exiting, but when trying to implement it in my vocabulary, I ended up using a “small part of it“; it was hard to find sounds that were experienced as being organic, and ‒ more importantly ‒ as connecting to my other sounds and the music as a whole. (This could change, as new states in Hadron are being developed.) As I see it, my choice of sounds constitutes my personal, extended voice. (The ideal of “personal storytelling” in my genre is briefly discussed in Chapter 6.) It also defines my relationship with my genre; I am avoiding sounds that are experienced as being alien in the musical expression.

Control and predictability in improvisation

The need to be in control varies from artist to another, and some of the choices to be made when using live electronics concern complexity, both concerns usability and processing. To be in full control (if that is a goal) will often demand either highly developed and specialised skills, or less complexity. To work with live electronics as an improvising musician, it has been necessary to find an “operating level”, where I can work intuitively. I have gradually expanded my repertoire of sounds and techniques, implementing them in the improvised interplay, operating with different musical structures and premises (see Chapters 3, 4 and 6). I have chosen to have access to a certain variety of sounds and effects, and I have focused on simplicity and usability in the setup. My choices regarding instruments, controllers and sound-processors are based on how I experience them at work, however simple or complex. These choices are also based on my former knowledge and skills. [29] The question of control is something I reflect on continuously. On the one hand, I want full control, to be able to “hear what I want to play”, and this is something which I can compare with a jazz musician's “inner ear” when listening to a harmonic progression. On the other hand, unforeseen turns are often appreciated and welcomed in improvised music ‒ where surprises and experimentation are a natural part of the playing. “Accidents” can create new music. Creating situations that bring in unforeseen elements is definitely an interesting possibility when working with live electronics. (It should be noticed that in the improvised interplay, this is always a possibility regardless of which instruments are involved, through the interactive impulses of fellow musicians.) As I see it, the ability to “control the situation of not being in control” is important, as well as deciding on the level of control. There are individual preferences here, as to what makes the improvised interplay work in a musically meaningful way. These preferences are part of what defines a field or genre (see also Chapter 6). In my genre and within the musical contexts in which I perform, I experience a basic preference for instrumental control and intuitive flexibility rather than technical complexity and unpredictability.

Having a personal vocabulary – or repeating yourself?

Given all the possibilities and choices that music technology can offer, my choices in my artistic process might perhaps appear to constitute a very “narrow” approach, especially in relation to other genres, where there is a constant search for new technology and techniques in music. In Chapters 3 and 4 I will, among other things, show how I use the same techniques and setup in different musical projects. As I see it, I work with a vocabulary that has become an extension of how I perform as a vocalist. With this vocabulary as part of my total instrument, I face a challenge which is very familiar for improvising musicians: the balancing between “having a personal expression” and “repeating yourself”, between using your deeply rooted musical language in creative ways, or applying “the same” solutions, phrases and forms. It is interesting to note that sometimes the use of technology can create expectations that are not present (to the same extent) when listening to other instruments. For instance, you recognise the sound of Miles Davis or Arve Henriksen – and many of us appreciate what we think of as their “personal tone” and musical vocabulary. In my artistic project I experience my use of technology as being guided (rather than limited) by choice, rooted in my personal preferences, often fundamentally dependent on inner ear experience and the need for control in the act of improvisation, as mentioned earlier. These choices should of course be questioned continuously, and the vocabulary should be developed, adjusted and sometimes also changed.

2.4 Closing comments

There are, as mentioned above, important discourses relating to the development of new instruments and the commercial aspects of music technology. How does a new tool (con) form the music? How can we be critical and when should we be? The research on and invention of new musical instruments and interfaces in academia and art can be seen as contributors of new technology and ideas for the commercial industry, but also as a reaction towards standardisation in the field, exploring new ways of controlling and producing electronic sound. Moreover, there are ways of being critical by exploring and challenging the use of standardised equipment within the different genres (see Engum, 2012). In my artistic project I have chosen to use rather conventional equipment and also partly conventional techniques – but my outboard devices and the DAW I use were probably not produced with my kind of music in mind. My basic goal, regardless of tools and techniques, is to start from my musical motivation, not the technical possibilities involved. But as soon as a technique becomes a part of my vocabulary and musical thought, these things are not separated.

[13] One of the great concert sound-designers I have worked with over the years is a Norwegian, Asle Karstad. In those days he expressed his frustration saying: ”you get great singers working with very good microphones and effect-machines -but with cheap and noisy mixers!”… I took his point.

[14] The loops on the Repeater only have numbers, so you have to remember which one you want and turn the knob until you reach the number, then select it. If you want to overdub the selected loop, you have to copy it to the internal memory, and then go back to the loop in the internal memory by turning a knob once again.

[15] This is my second machine of this kind, from 2009. It replaces the BOSS SP 303, a much similar, but smaller machine, with fewer features and less memory.

[16] Filter types: Low pass filter (LPF): passes the frequency region below the cut-off. High pass filter (HPF): passes the frequency region above the cut-off. Band pass filter (BPF): passes the frequency region around the cut-off. Notch filter (NTF): passes the frequency regions other than near the cut-off.

[17] To record and play back several loops in realtime on the Roland, you have to do more than just push the record-button twice and select another track, as is the simple operation on the Repeater. It can be done in two ways, both of them demanding several buttons to push. So, it is just slightly more complicated, and for me that means that I will usually be selecting the easiest and fastest method.

[18] My compressor was actually built for me by Ståle Storløkken. It is a “homemade” clone of an SSL Compressor.

[19] Max is a visual programming language, and in MaxMSP it is used to create sound patches. It was originally written by Miller Puckette, the Patcher editor for Macintosh at IRCAM in the mid-1980s,to give composers access to an authoring system for interactive computer music (wikipedia: http://en.wikipedia.org/wiki/Max_(software)).

[20] Granular synthesis is a basic sound synthesis method that operates on the microsound time scale. It is based on the same principle as sampling. However, the samples are not played back conventionally, but are instead split into small pieces of around 1 to 50 ms. These small pieces are called grains. Multiple grains can be layered on top of each other, and may play at different speeds, phases, volume and pitch. (Wikipedia, http://en.wikipedia.org/wiki/Granular_synthesis)

[21] Engum, Trond: Beat the Distance, Music technological strategies for composition and production, NTNU/Norwegian Artistic Research Fellow Programme 2012, (pp. 16-20).

[22] In audio signal processing, convolution reverb is a process for digitally simulating the reverberation of a physical or virtual space. It is based on the mathematical convolution operation, and uses a pre-recorded audio sample of the impulse-response of the space being modelled. To apply the reverberation affect, the impulse-response recording is first stored in a digital signal-processing system. This is then convolved with the incoming signal to be processed. The process of convolution multiplies each sample of the audio to be processed (reverberated) with the samples in the impulse response file (Wikipedia: http://en.wikipedia.org/wiki/Convolution_reverb)

[23] Studio for Electro-instrumental Music, Amsterdam;http://www.steim.org/steim/contact.html

[24] Institut de recherché et coordination acoustique/musique, Paris:http://www.ircam.fr/

[25] Traditional instruments that are expanded through the use of electronics, like the electric violin used by Victoria Johnson, former fellow in the Norwegian Artistic Research Fellowship Program: http://creativeviolin.wordpress.com/

[26] Remote controllers designed for the Nintendo Wii video game, handheld.

[27] I have attended both a demonstration (NIME) and a concert (Trondheim) with Alex Nowitz, working with these handheld controllers, and we have had very useful conversations at these events. Nowitz' work is described further in Section 1. 5. See also: http://www.steim.org/projectblog/?p=3715

[28] Trond Engum (Link:www.trondengum.com/documentation) has, in his artistic research project “Beat the distance”, challenged the premises that standard software sets for creative work in the studio, both as a music technologist and as a rock musician.

[29] A music technologist once asked me if I had ever “questioned the mixer”, and suggested, as a vision, another type of device to replace it, with a dramatically different design and functions. I had to admit that this was difficult for me to imagine. This made me realise how much the mixer is part of my instrument, and that starting to use another type of device would be like learning a new instrument. It was of course possible, but I would have to be convinced about the advantages.