This article describes a project that was carried out between January 2021 and January 2023, known as an artistic research project. The project focuses on the creation of an artistic product, accompanied by a reflection on the artistic work process.

The outcomes of this project are presented in a digital format optimized for computer and tablet modes.

Abstract

The project explores the artistic potential of unpredictability, specifically within the context of electronic music. By referring to an original instrument design—including programming a number of randomized systems—a series of compositions for keyboard instruments were developed. The central theme is the relationship between dialogue and loss of control during the creative process. This project was documented intermediately with new music, as well as technological and procedural reflections.

Project

In this article, I also focus on the process leading up to the actual project formulation. The preliminary studies were as extensive as the project itself, involving the search for an idea that would not only be relevant to me artistically but would also hold significance for others. This relevance applies to the field of music and the academic content embedded in the project.

Communication

Although this article is hosted on the Research Catalogue and is, therefore, likely to be read by an audience with a high academic level, I have taken care to explain the process, assuming that some parts may be entirely new to some readers. As such, the text should also be useful for students at various academic stages.

The inspiration for this project originated from a fascination with Southeast Asian music, specifically gamelan music from the Indonesian islands of Java and Bali. This nearly 2,000-year-old musical tradition remains deeply ingrained in religious ceremonies and secular events, such as theater and dance. Gamelan music, known for strong polyrhythms, is performed by ensembles mainly consisting of different gongs and metallophones. Over time, it has served as a great inspiration for countless Western composers.

One element that especially intrigued me in gamelan music was its microtonal tuning systems. In his book “Music in Bali” (1966), the Canadian music ethnologist and composer Colin McPhee provided detailed accounts of these systems. Gamelan ensembles are typically tuned in the Pelog scale (seven tones) or in the Slendro scale (five tones). These scales are unique in the sense that they do not conform to the equally tempered tuning system of the West.

Pure Intonation

In Western music, when we divide a string into whole-number ratios, starting with the fundamental and continuing up through octaves, fifths, major thirds, and so on, we generate the so-called natural scale. We define these tones as “pure.” However, the problem is that these pure intervals do not “add up.” For example, if we define the 12 tones in Western music by stacking pure fifths on top of each other—Ab0-Eb1-Bb1-F2-C3-G3-D4-A4-E5-H5-F#6-C#7-G#7—the note repetition Ab0-G#7 will not be the result of pure octave doublings; it will be different. This phenomenon is often referred to as the Pythagorean comma.

This difference has led to experimentation with many different tuning systems based on the use of one or more pure tones, driven by the desire to play in all keys. Finally, by the end of the 18th century, a tuning system was established that simply divides a pure octave into 12 equal parts called EDO12, where EDO stands for Equal Division of the Octave. There are deviations from the natural tone system, but it allows for playing in all 12 keys. This tuning system is also called equal temperament.

Javanese gamelan music is tuned in a completely different way. The Slendro and Pelog scales fall outside the equal temperament system, commonly referred to as microtonal tuning. Furthermore, the temperament changes when moving up into higher registers.

A deep gong does not correspond with a similar note in a higher octave on, for example, a metallophone. Each ensemble has its own internal tuning system, and several instruments are also tuned in pairs. That is, two identical instruments are tuned so that instrument A sounds higher than instrument B, creating a certain resonance between the two instruments. In Western music, we talk about the harmonic spectrum, where partial tones on a given instrument often relate to the natural tone system. The tonal spectrum in gamelan music is different here, as the instruments are constructed in such a way that they produce other forms of overtone constructions called non-harmonic overtones.

Microtonal Western Music

In Western music, we typically refer to microtonal music when tuning systems deviate from EDO12—that is, when the notes are no longer separated by semitones (semitone steps) but by smaller units.

Microtonal music in a Western context is relatively new. In the 20th century, intervals began to be referred to as microtonal, particularly in connection with the analysis of Indian music and “blue notes” in blues scales. Over time, many Western composers have worked with tuning systems other than EDO12.

Here are a few examples listed in chronological order:

1925 - Charles Ives, Three Quarter-Tone Pieces

This is written for a special two-manual quarter-tone piano, where the upper manual is tuned to EDO12 +50 cents compared to the concert pitch, and the lower manual is tuned to EDO12 in the concert pitch. It is also written for two pianos with the same tuning difference, as aforementioned.

1954 - Karlheinz Stockhausen, Study II

A frequency range between 100 and 17,200 Hz is divided into equal intervals.

The distance between the notes is calculated as the 25th root of 5 = 1.066494942.

There are 81 tones:

- First frequency: 100 Hz

- Second frequency: 100 x 1.066494942 = 106.649494, rounded to 107 Hz

- Third frequency: 107 x 1.066494942 = 114.1149, rounded to 114 Hz, and so on

1982 - Easley Blackwood, Twelve Microtonal Etudes for Electronic Music Media

Blackwood composed and recorded electronic music pieces using various EDO systems, ranging from EDO13 to EDO24, the latter being a scale of 24 notes made up of quarter tones. In the piece Andantino, EDO16 is used, which shares the following notes with the standard EDO12 (our “normal” Western scale): C-Eb-Gb-A. EDO16 features four distinct dim7 chords, three of which sound identical to regular dim7 chords but in pitches that fall outside the usual concert pitch.

Blackwood used a Polyfusion Synthesizer to record these compositions. The method of tuning the synthesizer to accommodate the various EDO systems is unclear, but it was likely quite challenging, especially in terms of performance.

The music has a neo-romantic quality. Blackwood wanted to experience different EDO temperaments within a more traditional major/minor harmonic framework, and I find it essential for the music that this choice was made. Had Blackwood opted for a more modern electronic style, which typically moves away from a certain tonality, one would not have experienced the clash between traditional Western harmonies and melodies and the alternative EDO systems in the same way.

1986 - Wendy Carlos, Beauty in The Beast

Carlos used the Crumar General Development System, an additive synthesizer with 32 oscillators capable of tuning to various scales. In the piece Beauty in The Beast, Carlos introduced her own scale systems:

- Alpha, which moves in intervals of 78 cents

- Beta, which uses intervals of 63.8 cents

Neither scale includes an octave (1,200 cents). Alpha, with 15 scale tones, is closest to the octave at 1,170 cents, while Beta, with 19 scale tones, is closest to the octave at 1,212.2 cents.

2010 - Georg Friedrich Haas, Limited Approximations

In this piece, six pianos are tuned in EDO12, with one piano at concert pitch. The remaining five differ as follows: +1/6 (+16.66 cents), +1/12 (+8.33 cents), -1/12 (-8.33 cents), -1/6 (-16.66 cents), and -1/4 (-25 cents). When the pianos are combined, they create the effect of a single piano capable of bending notes, producing a unique microtonal sound.

Microtonal Music in Digital Audio Workstations (DAWs)

I analyzed Easley Blackwood’s Andantino and re-recorded the piece in Ableton 11. This was made possible by the Microtuner plugin introduced in version 11. Microtuner works by combining it with a given soft synthesizer, allowing you to either design your own microtonal scales or choose from 6,000 presets. When using EDO13, for example, with C3 as the base note, C4 will correspond to MIDI note C#4. Therefore, a traditional EDO12 MIDI keyboard no longer makes sense visually.

In Logic Pro, different temperaments have long been available, with around 100 presets, but only for soft synthesizers. Unlike Ableton, however, Logic’s scales start over after 12 notes, which is obviously a big limitation. If, for example, we play EDO13 starting from C3, we will not hear the 13th note, as C4 resets the scale from the beginning to one octave higher. The design of the MIDI keyboard with 12 different pitches is, therefore, a limitation in this context.

Parallel to the microtonal studies in the computer, the idea emerged of designing an instrument that could work with variable tuning systems.

Prepared Instruments

I have a passion for using older analog synthesizers. When I released the album Urodela (Escho, 2019), all sounds were generated from analog instruments, either recorded directly into the computer or by sampling the instruments. The goal has never been to look back in music history and recreate music with these instruments but to explore new compositional paths.

Most composers rely on instruments that have already cemented their place in music history, with their sound and use shaped by time. Notably, over time, idiosyncrasies are connected to these instruments. I am fascinated by the idea of rediscovering these instruments and using them in fresh, innovative settings.

Instrument rediscoveries have a rich history: Henry Cowell’s “string piano,” where the piano strings are directly played with the fingers, or John Cage’s “prepared piano,” where various objects are inserted between the strings to create new sounds.

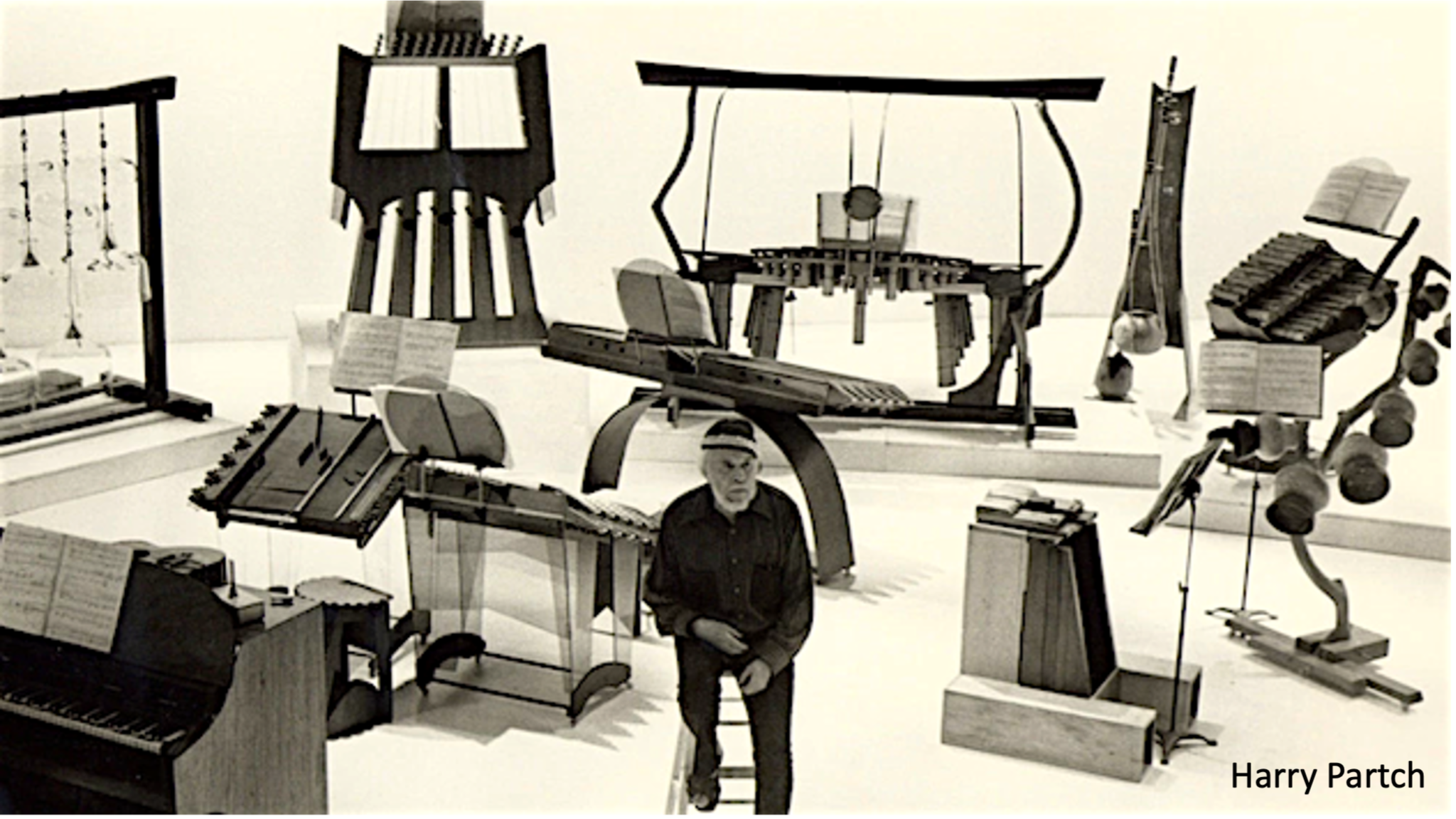

Some composers go a step further and design completely new instruments, like Harry Partch. More recently, Björk did something similar in her album Biophilia (2011), where she introduced the Gameleste—an instrument consisting of bronze metal staves similar to those used in gamelan ensembles but inserted in a celeste, playable either manually or controlled via MIDI.

As previously mentioned, it is only possible to play microtonally in Ableton or Logic by using soft synths. My interest, however, was in playing microtonally on hardware synthesizers, thus marking the beginning of a long and complex process.

Digital Versus Analog

Initially, the idea was to design a multitimbral analog instrument based on a connection of several synthesizers controlled by a mother keyboard. Analog instruments can actually be tuned into various EDO tunings. Typically, when calibrating a synthesizer, it is tuned to concert pitch (440 Hz), and then a parameter is adjusted so that octaves double the frequency, like 880 Hz. This same parameter can be modified so that scales outside EDO12 can occur.

For my project, a multitimbral setup made sense—to design an instrument where each key corresponds to a specific synthesizer. Oberheim specialized in this with instruments like the 4 Voice, which consists of four independent monophonic synthesizers, each with customizable sound and tuning.

At first, I considered controlling a myriad of analog synths in this way, where each MIDI key on a mother keyboard would control a specific synth. This would be practically feasible since I can control CV and Gate on analog synths using MIDI-to-CV converters. However, as I developed the idea, I also wanted to break new ground in terms of sonic possibilities and focus on a more digital sound. This led me to one of the first digital synths, which is also one of the most popular worldwide, with more than 200,000 units sold: the Yamaha DX-7, which debuted in 1983. I wondered—what if each key had a specific DX-7 that could have its specific tuning and sound?

FM Synthesizers

Unlike most analog synthesizers’ use of subtractive synthesis, the Yamaha DX-7’s sound design is based on FM. Analog synthesizers typically use primitive waveforms containing few harmonics, wherein the sound design typically involves removing certain harmonics using a filter. With FM, several waveforms are used to interact with each other. Waveforms are divided into signal carriers and modulators. The carriers create sound, while the modulators affect the carriers.

In the 1970s, several large companies researched digital FM technology and designed special prototypes, such as Bell Labs’ Halle synthesizer, which Laurie Spiegel, among others, used. Some of these instruments were put into production at prices that only a few composers and musicians could afford, for example, the New England Digital Synclavier, which was the first commercial FM synthesizer with a selling price ranging from $25,000 to $200,000.

Frequency Modulation/Amplitude Modulation (FM/AM) Radio Transmission

Originally, FM was regarded as something that took place in connection with radio transmission. Radio transmission is the wireless transmission of sound using electromagnetic waves. It is not an audible sound, as this would require a change in air pressure to be audible.

Electromagnetic waves are sent via masts, sending accelerating and decelerating electrical charges. The electromagnetic information that is sent (telephone, radio, TV, etc.) is called a low-frequency signal and is not dangerous for people. On the contrary, high-frequency radiation, such as that found in ultraviolet light or radioactive radiation, can affect the cells in human tissue.

Humans hear low-frequency sounds between 20 Hz and 20 KHz. These carrier waves cannot be transmitted electromagnetically over long distances. Therefore, data (the carrier wave) is combined with a modulating wave, whereby the total signal is increased to the MHz level. The advantage here is that these signals can be sent over longer distances without requiring particularly large amounts of energy. We typically talk about amplitude modulation and frequency modulation. With AM, the data wave is the difference in amplitude over time. With FM, the data wave is the difference in frequency over time. The latter principle has a larger frequency spectrum, which provides better sound quality. In addition, FM is less receptive to interference from other waves, resulting in less noise. When a radio wave hits a receiving antenna, the modular wave is removed, and what remains is the data wave, which is amplified and thereby becomes audible to the human ear.

FM in Connection With Sound Design

When an oscillator is modulated by another oscillator, in addition to the fundamental tone, multiple overtones or sidebands are introduced. This results in more harmonics than the number of oscillators (signals/modulators) used. It is essentially the opposite of additive synthesis, where each oscillator is a sound source, and the total number of fundamentals plus overtones equals the number of oscillators. FM synthesizers are far more challenging to program, but in return, they allow for the creation of complex sounds containing many overtones.

In the mid-1960s, technician and instrument designer Don Buchla began using FM in synthesizers like the Music Easel. However, it was composer John Chowning’s experiments at Stanford University in 1967 that led to FM technology being patented. In 1973, Yamaha Corporation purchased the license with plans to incorporate FM technology into their future music products. The first such instrument was the GS-1 digital piano in 1980, which generated sounds via FM synthesis. While users could only access presets and not program sounds, the GS-1’s FM synthesis was notable for creating complex transients, including percussive sounds. This was something that older analog instruments struggled to achieve. The GS-1 was, among other things, used by the band Toto, and its distinctive FM marimba sounds became a central part of the soundscape of their hit single “Africa” in 1982.

The following year, in 1983, Yamaha released the DX-7 synthesizer, which quickly became a commercial success due to its unique FM sound and relatively affordable selling price of approximately $2,000, enabling many musicians to acquire the instrument.

The DX-7 features six operators, which correspond to the signal generators or modulators mentioned earlier. The six operators, each of which is a sine wave, can be arranged in various combinations known as algorithms, of which there are 32 versions. Programming the DX-7 involves adjusting the pitch and envelopes of individual operators and switching between the different algorithms. Pitch adjustments are made in natural tone systems (coarse) rather than EDO12.

The DX-7 offers 16-note polyphony and is a mono instrument. Its output is 12-bit, which significantly impacts the sound. After Yamaha and John Chowning’s patent expired, many other companies began designing similar FM synths, particularly soft synths. Native Instruments’ FM-8, for example, can both generate its own FM sounds and read sysex files from the DX-7. I have tested the same sounds in both the FM-8 and DX-7 and found that the DX-7 delivers more punch in the sound, probably due to the lower bitrate.

One could also put a bitrate plugin in front of the FM-8 and maybe get closer to the original sound.

MIDI and instrument design

In many ways, 1983 was a defining year in music history, marking key technological shifts. Alongside the Yamaha DX-7 filling the soundscape of countless pop productions, MIDI technology had just been launched—a breakthrough that standardized communication between electronic instruments and computers. This ushered in an era of cleaner, more digital sound, with synthesizer arrangements increasingly controlled by primitive computers called sequencers, enabling a tighter, more quantized rhythmic sound.

The emphasis on clean sound was also reflected in the design. Apart from its preset touchpads, the DX-7 featured only a volume fader, a programming fader, and a small quartz display. This streamlined interface made the already complicated FM programming even more difficult. This design trend also had an impact on other synthesizer companies like Roland’s JX series and Korg’s DW series.

Programming on the DX-7 required entering specific parameter numbers on the display (e.g., “attack” for a given envelope) and then adjusting with the programming fader.

With each envelope containing eight parameters (4 rate and 4 vol) and with up to six envelopes per sound, managing 48 parameters with a single fader was nearly impossible.

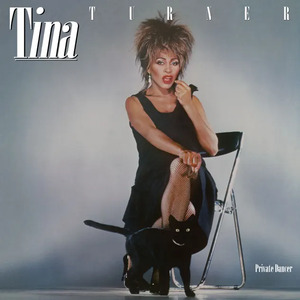

DX-7 Users and Presets

This complexity led many musicians to rely on the included factory sounds or purchase new sounds via sound cards. Speaking of idiosyncrasies, many of the DX-7’s factory sounds became classics in music history: the DX-7 bass, heard on A-Ha’s “Take On Me” (1985), the harmonica solo in Tina Turner’s “What's Love Got To Do With It” (1984), and the iconic DX-7 Rhodes sound, which has established itself as an independent sound in music history and is still emulated in new instruments. It is typically used in softer R&B productions, such as Maria Carey’s “Vision of Love” (1990). Benny Andersson and Björn Ulveaus also used several DX-7 sounds in their musical Chess (1984), including the harp sound in the title track and the Rhodes sound in “I Know Him So Well.”

Aside from the many pop productions in the 1980s, there was one person in particular who used the DX-7 in more alternative music. Brian Eno, the British artist and producer, employed it on diverse projects, such as his album Apollo (1983), created in collaboration with musician and producer Daniel Lanois, U2’s album The Unforgettable Fire (1984), and David Bowie’s Outside (1995). Eno’s unique approach to sound design on the DX-7 was shared in Keyboard Player magazine, which you could use to program sounds into your own DX-7.

Eno used a special hardware programmer called the DX Programmer from Jellinghaus. Here, each parameter was represented by a physical button, making programming more intuitive. This made it possible to gain more control over the instrument. Today, a replica of this controller by Dutch company Dtronics can be bought for approximately $1,400.

Finally, it should be mentioned that jazz musicians also adopted the DX-7. In particular, pianist, composer, and band leader Chick Corea used the DX-7, as well as countless other Yamaha instruments, on albums like The Chick Corea Elektric Band (1986), Light Years (1987), and Eye of the Beholder (1988).

DX-7 in Denmark

In Denmark, the DX-7 was also a major success, especially for composer and musician Jørgen Emborg, who gained recognition for his use of the DX-7 in the jazz fusion orchestra Frontline. Despite not having the Jellinghaus controller, Emborg managed to program his own sounds. Jørgen and I were colleagues at RMC for a number of years, and we occasionally discussed the DX-7.

Jørgen asked me to help him search the internet for a specific sound, “Midnight,” which he had programmed and used in Frontline’s rendition of Thelonius Monk’s Round Midnight (1985). He lost the sound when he sold his DX-7. Thanks to MIDI, the DX-7 could save files in sysex format, allowing sounds to be shared as data files online. Jørgen hoped the “Midnight” sound would resurface, and I still search for it in various large DX-7 sysex banks. Incidentally, Jørgen also used the special breath controller BC1, which came with the DX-7, to control various elements such as LFO modulation, brilliance and volume.

Kenneth Knudsen, from the same generation, also stood out. For years, Knudsen’s setup featured a DX-7, two Roland Jupiter-8s, and a number of rack modules, which can be heard on albums like Heart To Heart (1986) and Bombay Hotel (1989).

DX-7s in a Rack

As mentioned earlier, my idea was to design an instrument where each key on a keyboard could control an independent DX-7. Rather than investing in many DX-7s, I chose to invest in racks containing DX-7 modules.

In 1984, the year after Yamaha released the DX-7, a special rack version called the TX-816 came on the market. In the 1980s, rack-mounted synthesizers were popular, controlled via mother keyboards like Yamaha’s KX-88 (which I also use) via MIDI. This setup allowed for simplicity—a single keyboard on stage could control all kinds of rack modules backstage via various split functions on the keyboard.

The TX-816 was a sound module housing up to eight DX-7 synthesizers (called TF-1 modules). While sounds could only be programmed and dumped from the DX-7, the possibilities for sound design expanded enormously. You could configure all modules to play the same sound, adjusting pitch and panning for each module to create a much fuller stereo sound (since the DX-7 itself was a mono instrument). Alternatively, you could use each module independently and, with the help of a sequencer, build complicated MIDI arrangements.

Artists and bands like Mr. Mister, Chick Corea, and Toto used sound stacking (layering different sounds together), while others like Scritti Politti and Madonna utilized multitimbral arrangements. I had the pleasure of being in a band with two friends whose father owned a large recording studio that included two TX-816 units. I was allowed to borrow them, and between those two modules (one with 8 TF-1s, the other with 4 TF-1s) and my own DX-7, I had 13 DX-7s at my disposal.

The TX-816’s interface was limited: 4 units in size, eight digital displays, and three buttons on each module. While the module could play DX-7 sounds, programming was not possible directly. The three buttons only allowed for basic functions like program changes, tuning, and volume. It goes without saying that it was extremely complicated to operate this instrument, not least in live settings.

Hardware

I acquired several TX-816 units for this project, starting with one from Hamburg, Germany. Since then, I have invested in two more modules—one from Modena, Italy and another from St. Heddinge, Denmark. For the sake of clarity, I’ve named each TX-816 after the city where I purchased it.

In addition to buying TX-816s, much of the work involved setting up the system. This included soldering audio and MIDI cables to all the modules, buying audio converters capable of recording all the instruments simultaneously, and sourcing numerous MIDI converters that could send messages to all 24 TX-1 modules. Setting up the MIDI converters proved especially challenging, with issues ranging from connection stability to handling MIDI speeds, switching operating systems, managing MIDI updates, and so on.

Software

It quickly became apparent that there was a need to design control software from scratch, and it was natural to start by building my own DX-7 programmer to gain full knowledge of the instrument, including knowledge of control options, and to understand which MIDI commands control what. At the same time, I aimed to create a visual design that I could relate to.

As mentioned earlier, there are quite a few expensive hardware programmers and some software controllers, but for me, it was essential to get to the core of the instrument’s construction and to add new control options that do not exist in other hardware/software versions. The DX-7 was released at the same time as MIDI and celebrates its 41st anniversary this year; therefore, I expected quite a few challenges in terms of control. Quickly, the choice fell on the program Max as the software designer, and control had to take place via MIDI.

MIDI and Data Conversion

MIDI, which stands for Musical Instrument Digital Interface, is a system consisting of digital serial commands made up of binary numbers. Previously, you could only “remote control” analog synthesizers using CV (control voltage - pitch) and Gate (trigger), with the limitation that only a single note could be triggered.

MIDI, on the other hand, can send polyphonic messages, allowing multiple tones to be triggered at the same time. However, it is important to note that the tones are triggered serially, meaning one after the other. Since the MIDI speed is 31,250 bits per second (31.25 kilobaud), the “serial latency” is typically not audible.

Furthermore, MIDI in the DX-7 can control all programming parameters, enabling you to trigger, program, and control the instrument from the computer. In the digital domain, control is executed using binary numbers—number combinations consisting solely of the digits 1 and 0.

The size of binary numbers—the number of digits in a binary number—is expressed in bits:

- 1 bit = one digit, with two possible combinations (1 or 0)

- 2 bits = two digits, with four possible combinations of 1 and 0 (11, 00, 01, 10).

MIDI communicates with commands of up to 8 bits (8 digits) called a byte. The first digit in each byte indicates the type of byte: data, key on, status, etc. The remaining seven digits indicate the value.

The highest data value for the seven-bit number is 1111111 = 127, making this the highest number that can be used in MIDI communication. That is why we constantly come across control values between 0 and 127 when using MIDI control.

If we need higher values, multiple bytes are used together.

Pitchbend uses two bytes, equating to 14 bits. Therefore, the maximum value here is 11111111111111 = 16,384.

Bytes are often described in hexadecimals, as this makes reading easier. Hexadecimals consist of the following sequence of numbers and letters: 0 1 2 3 4 5 6 7 8 9 A B C D E F = 16 different characters. Each hexadecimal covers four binary digits, known as a nibble, which has 16 different combination possibilities that can be precisely expressed using hexadecimal numbers.

Consequently, a byte can be translated into two hexadecimals, which saves space. Furthermore, hexadecimals can be converted into decimal numbers. The number of possible combinations for two hexadecimals is 256 (16x16) (max. number is 255 because 0 is also included). Thus, two hexadecimals can be represented as follows:

FF (hex) is equal = 255 (decimal).

When programming control software for the DX-7, you are constantly working between these different types of information:

- 00000000 (binary) = 00 (hex) = 0 (decimal)

- 11111111(binary) = FF (hex) = 255 (decimal)

System Exclusive

When information is sent from Max to DX-7, it takes place in sysex format. Sysex stands for System Exclusive and ensures that MIDI is sent to a specific instrument. Sysex is written in decimals, meaning it utilizes the decimal system mentioned in the previous section, but here, the conversion takes place in the reverse order.

Adjusting the parameter “Algorithm select” in DX-7 looks like this:

240 67 16 6 /variable/ 247

▪ 240: start of message

▪ 67: manufacturer no. (Yamaha)

▪ 16: type (DX-7)

▪ 6: parameter address for Algorithm select

▪ /variable/: where you choose between algorithms 0–31 (32 options)

▪ 247: end of message (EOX - end of message)

Translated to hexadecimal:

F0 43 10 6 /variable/ F7

Translated into binary:

11110000 1000011 10000 110 /variable/ 11110111

In this way, various control messages can be sent to the DX-7. It has taken a long time to clarify which sysex addresses correspond to the individual parameters in DX-7 and how they should be represented graphically. There is limited information regarding these parameters, which are typically only available in Japanese manuals. It has often been easier to “record” MIDI commands from the DX-7 into programs like Logic Pro, where you can register a specific MIDI address and convert it into various formats.

The six main sections for each operator feature their own envelope and pitch, including the algorithm selector, LFO, and pitch envelope. Notice that extra panels have been added: Random tuning, EDO, and Random. Random tuning and EDO cover my specially designed functions for controlling another Yamaha module, the TX-802.

The TX-802 is the rack version of the DX-7 MKII, which is an eight-part multitimbral version of the DX-7. This means you can combine up to eight different sounds at the same time, but it will quickly consume the 16-voice polyphony the more sounds you stack. The TX-802 also allows for the use of microtonal scales, and that is exactly what these extra parameters in my controller facilitate. By clicking on “panel” in the Random panel, green (semitones) and red (fine tuning) faders appear, which control the microtonal programming. If parameters are selected in Random Tuning, the tuning will constantly change at a selected speed, meaning the instrument will constantly alter pitch for each note.

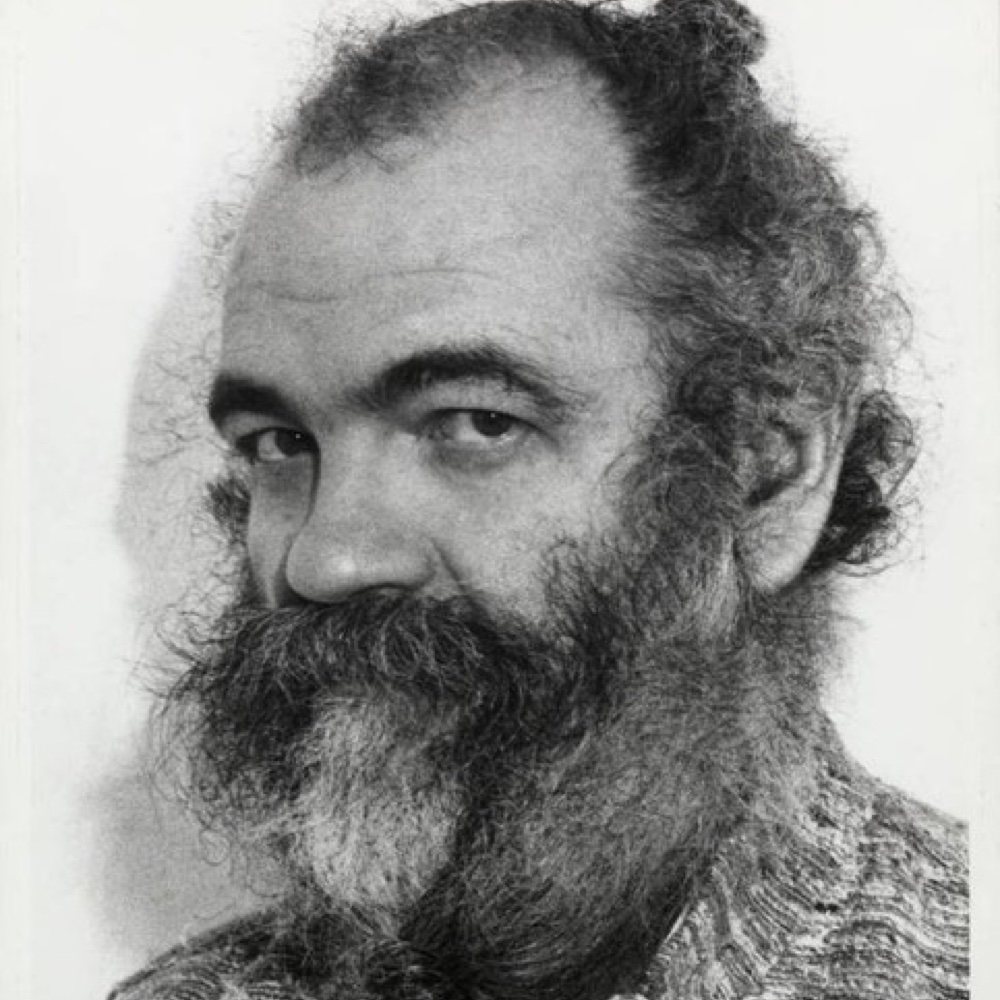

By the way, the person in the photo is Easley Blackwood.

Elsewhere in this controller, I have added similar functions based on degrees of randomness. However, it turned out that the DX-7, due to the very early version of MIDI, cannot adjust all parameters while playing. Noise is added to the audio when changing parameters, which is obviously not optimal.

When working with such an old instrument and the latest software, all sorts of problems arise, and the programming aspect has been extremely extensive. Often, a simple problem could take weeks to solve and require all sorts of troubleshooting methods. I will spare the reader from diving into this further, including a more detailed review of the programmer. Instead, I will just show a picture of the programmer in edit mode. Edit mode shows the “backside” of the system, where objects with the letter “p” indicate submenus containing code. Parts of the code can be saved in a submenu, which can then be saved in other submenus—much like the principle of Russian Matryoshka dolls.

Having designed the programmer, I now had a thorough knowledge of the instrument, and at the same time, the idea of an instrument filled with randomized parameters was born.

Randomized Processes

With the design of the DX-7 programmer, I gained a good insight into the instrument’s capabilities, enabling me to program my own sounds and add various extra control parameters, such as randomized functions that were not present on the original instrument. It quickly became clear that chance functions were central to the subsequent design process.

In Max, we typically work with two types of randomness: random and drunk. Random is based on pure chance—like the selection of a number between 0 and 10. Drunk is a more controlled randomness, where we can dictate which numbers are possible to choose in relation to the current value. For example, if we again choose a number between 0 and 10—let’s say 5—we can add a value for how far above or below number 5 the next random number can be. If this value is 2, the options are therefore 3, 4 and 6, 7, but with a maximum of 10 and a minimum of 0. You can also control whether the direction of the numbers only goes up, down, or both. Drunk should be understood as a drunk person who, despite some detours, still intends to move from A to B—a form of chance that often has a more human character than random.

TX-2416

Based on the knowledge I had acquired in connection with the design of the DX-7 programmer, I set about designing a program for the three connected TX-816s. While parts of the code from the DX-7 programmer could be reused, there was still a significant amount of coding and problem-solving that had to be done along the way.

A myriad of special randomized parameters were incorporated, particularly because all sysex messages could be sent while the instrument was playing—without any digital noise. The TX-816 was produced a year after the DX-7, and it seems the MIDI implementation had already been improved.

An example of a parameter is TF-1 assignment—specifically, how the MIDI keyboard controls the 24 TF-1 modules. This determines whether a single key triggers multiple TF-1 modules or just one. The video showcases the latest version of the controller, which contains the three TX-816 modules.

The names displayed indicate which physical module is activated, corresponding to the cities where the modules were purchased: Hamburg, Modena, and St. Heddinge.

In the picture, a black-and-white graphic can be seen in the center at the bottom. In reality, it consists of buttons that turn TF-1 modules on and off for each individual key (i.e., for each key, there are 24 TF-1 on/off buttons). With 88 keys, this results in 2,112 buttons. The short side represents the TF-1 side, while the long side represents the keyboard side. I can manually switch between different assignments or automate this process using degrees of randomness. I have also incorporated a MIDI controller pedal, which can control various parameters while playing.

Examples of randomized parameters:

• Volume for the individual modules

• Change scales (both microtonal and equal temperaments)

• Random sustain of individual modules

• Random portamento of individual modules

• Change of sounds

• Others

I also added a metaprogrammer, which can be seen on the right side. Rather than programming sounds in the AP-7 alone, the TX-2416 allows me to program all 24 modules simultaneously. For now, however, I have limited it to the parameters Coarse, Fine, Algorithm, Radio, Feedback, and Interference. This could be expanded further, but for this project, it made sense to focus on the above parameters.

Versions and Pedals

I have built several versions of the instrument, with a particularly big challenge being the ability to send large amounts of MIDI information simultaneously without affecting note on/off messages. At one point, after many complicated experiments, I had to develop a special pedal that could stop any hanging MIDI notes.

In addition, I can use the pedals to control the aforementioned randomized parameters, as well as the degree of randomness while composing/improvising on the keyboard.

Design of EDO16 Keyboard

At some point in the process, it struck me that it was trivial to control this new monster of an instrument via a traditional EDO12 keyboard. Having played and composed on the piano all my life, I felt it would be a good idea to challenge myself in relation to the physical design of the instrument. Initially, I wanted to buy a special controller that was completely different from a traditional keyboard instrument. However, due to budget constraints, I decided to buy two M-Audio keyboards, which I then rebuilt into an EDO16 keyboard. As shown in the image, I have designed a keyboard that retains some similarities to the EDO12 in terms of the combination of white and black keys.

Controlled Randomness

Central to the process has been designing an instrument that is constantly in some form of movement. For instance, the pitch is adjusted separately for each TF-1 module while playing, allowing for sliding in and out of different temperaments. A myriad of sub-parameters can change—from small, subtle adjustments of individual sounds to drastic shifts of entire instrument groups. Hence, the album’s title: The Ever Changing Instrument. Many parameters are in constant motion, causing the instrument to take on the characteristics of a living organism during the process of composing/improvising. The sound of a specific key may change from one second to the next, placing you on the border between familiar and unknown territories. With pedals, various degrees and types of randomness for various parameters can be controlled while improvising.

Simultaneous Instrument Design and Composition

While the software design was going on, I also began composing on the instrument and recording various parts. In general, composition and programming have gone hand in hand. The process of software design has inspired me to write music, and composition on the instrument has generated new ideas for its design. I see the entire process as a form of composition.

Chance

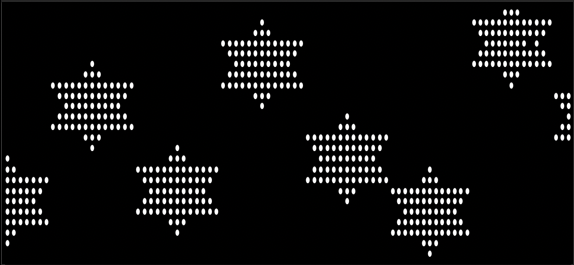

The idea of incorporating degrees of randomness into the compositional process is well-known and is particularly used in the music of John Cage. Cage’s use of chance typically involved choosing between multiple options in the creative process. Among other things, he drew upon the Chinese I Ching system, which consists of 64 hexagrams, where each hexagram is generated by tossing, for example, three coins. Each hexagram represents a certain state, and the 64 states together form a cycle. Cage’s application of I Ching differed from work to work; one example is explained in the interview book “Conversing with Cage” by Richard Kostlanetz. When composing the Freeman Etudes, Cage selected between different star maps (p. 94). In this example, Cage used the I Ching solely as a random generator with 64 possibilities.

In Per Nørgård’s work I Ching, four states were used: I. Thunder Repeated: The Image of Shock (hexagram no. 51), II. The Taming Power of the Small – nine sounds (hexagram no. 9), III. The Gentle, the Penetrating (hexagram no. 57), and IV. Towards Completion: Fire over Water (hexagram no. 64). While it remains unclear how the I Ching system influences the compositional process, it seems to have some impact on the resulting music.

In my project, several electronic coincidences occur at the same time and to varying degrees while I am improvising and composing. One could say that Cage’s forms of chance represent crossroads in a compositional process, where my chance structures generate unpredictability in the moment.

Unpredictability

I have always aimed for there to be some kind of conversation between me and the instrument—where the constant movement of the instrument through various randomized processes requires me to engage with these adjustments while improvising/composing on the EDO16 keyboard. When composing on a “changeable” instrument, it is necessary to accept that ideas and decisions may shift to some extent along the way.

It is imperative to have strong compositional ideas that can withstand even quite radical instrument changes. Conversely, when recording a certain compositional passage, it is crucial to be ready to incorporate improvisation when the instrument demands it. This creates a balance between having or losing control in a creative situation. It presents a type of problem-solving that prevents overthinking in decision-making and opens up the possibility for something deeper. Achieving that state of mind has been my goal throughout the process of building and operating the instrument.

Compositional Ideas

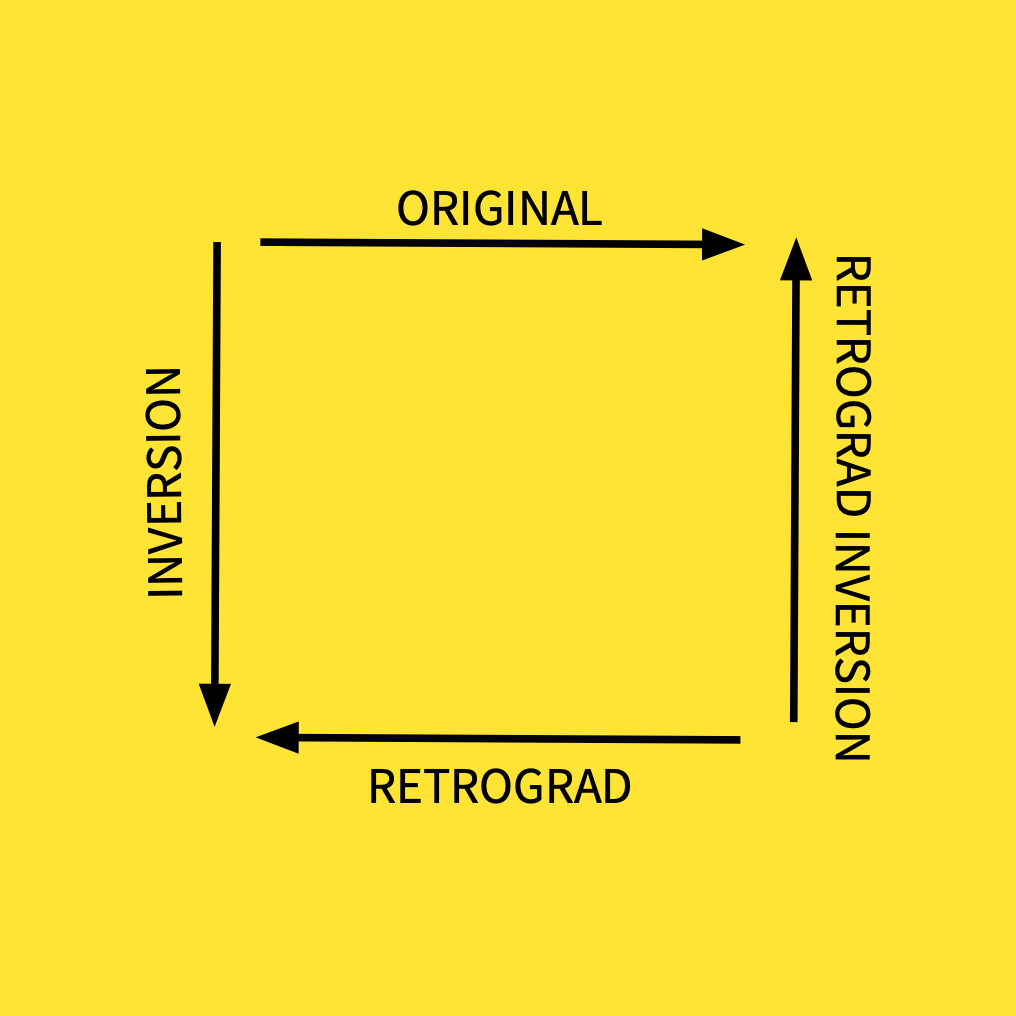

Several methods have been used when composing the music. One example is the use of tone rows, a method that dates back to Arnold Schönberg’s 12-tone music. A tone matrix is created by selecting a tone row. Tone rows do not necessarily need to be based on 12-tone music; they can also include repetition of tones. When a certain tone row is established, the inversion of the row is found. From each tone in the inversion, the original tone row is recreated but in different transpositions. Transpositions can be either diatonic (within a certain scale) or chromatic (specific intervals).

Once the matrix is created, it is possible to select different parts of rows: original rows, inversions, and reversed rows—all in different transpositions. This method, like working with unpredictability, combines decisions made by me (the first tone row) with rows I didn't plan (inversions and reversed rows).

Recording

Countless recordings have taken place, each with 24 tracks per take, resulting in an almost unmanageable amount of material to work with. Typically, I have edited recordings in Ableton and Logic, structuring some sessions around very detailed parts of a composition, which are subsequently downmixed and imported into a large master session where the entire album has been assembled. This approach has resulted in a positive change in listening perspective during the process—something that I prioritize more and more.

Downmixing in the process and thereby closing creative decisions is important because it provides the necessary room for new ideas to arrive. As a listener, you don’t necessarily hear the many DX-7s used on the album, and that’s probably because the TF-1 modules are effective at mixing with each other to generate a unified sound.

You constantly hear many takes put together, and each take consists of 24 TF-1 modules, so perhaps over 100 TF-1 modules are constantly audible. This way of stacking sounds creates depth in the soundscape and offers an opportunity to design sound by combining one sound with another. It has been less necessary to use, for example, compressors, as the same effect can be achieved by stacking modules.

Complexity Versus Narrative

Throughout the process, I have composed and recorded many hours of music. As mentioned before, there has been a continuous adjustment of the instrument, focusing on special features at different times. Sometimes, I have chosen to go back to previous versions of the instrument to rediscover a particular control method.

With such a large instrument that can deliver so much information, it is easy to fall into the trap of creating very complex arrangements that constantly use all modules and move in all parameters, thereby risking the overall narrative. Therefore, a large part of the work on this album involved gathering the compositions, removing unnecessary information, and focusing on the overall narrative. The art of limitation has been important; often, many microtonal studies have ended up being represented in a single movement, passage, or timbre.

Despite the divided sections, I regard the music as a unified symphony with many movements. Since several of the compositions are sonically saturated, I intended that the individual movements be quite short. It is essential that there is a sense of both flow and lightness when listening to the album—a desire to start over when X12 ends.

Melody Versus Texture

In my previous album, Urodela (Escho, 2019), the focus was on microtonal analog sound clouds and transients that mutate over time. This served as the starting point for this album, but during the process, I found it interesting to integrate more traditional major/minor harmonics and corresponding equal-tempered melodies as a contrast to the microtonal sounds. Working with traditional harmony/melody in an electronic context can be challenging, as melodic statements often risk becoming cliche and old-fashioned.

Unintentionally, several pieces have taken on an Asian sound (or rather, my subjective experience of something Asian). Perhaps it has something to do with my initial studies of gamelan music combined with the DX-7’s Japanese origin, which includes countless presets that evoke koto-like sounds. In movement X9, the melodic part became so central that a soloist’s contribution felt obvious. I found guzheng musician Zhang Yu online. Yu is from China; the guzheng is also of Chinese origin. The guzheng consists of a number of strings strung over a resonating soundbox, and the strings are plucked with the right hand. Tuning can be adjusted, and each note can be treated differently by bending the strings with the left hand. The guzheng has a similar design to the Japanese koto, Korean gayageum, or Vietnamese dán tranh.

We recorded the guzheng for several movements, but it was only in movement X9 that the blend of this acoustic and electronic instrumentation made sense. The recording took place in A-Studio at RMC, with Yu, who lives in Aarhus, bringing a special large concert guzheng for the occasion.

The movement involve wild glissandis and pannings. All TF-1 modules move independently in pitch. To create contrast, primitive melodies have been added, providing a central point around which the glissandi move. This movement and most others are audio recordings recorded from the MIDI keyboard to the TX-2416 and then into the computer. No MIDI quantization has been applied.

TF-1 modules are used here as percussion. Percussion sounds can sometimes be heard, like electronic Taiko drums. The brass sound comes from a guitar synthesizer tuned in alternative tunings. Different types of scales and temperaments are used in the underlying TF-1 sounds that support the drums. Several portamento parts can be heard as either percussive or more melodic instruments. A gamelan figure is present, dating back to the very first ideas of the project.

MIDI regions consist of music parts based on a tone matrix, as well as various scripting elements in Logic. Instead of being played from start to finish, the song position line “scratches” around the composition by dragging the SPL back and forth across several MIDI regions with the mouse. This creates a glitch effect when information is sent to the TX-2416, which is simultaneously running all sorts of randomized processes. At times, there is so much MIDI traffic that several control messages arrive with a certain delay. I have chosen to view this as another randomized step in the creative process.

Big sounds from PPG Wave. Added to this are synth bass and synth guitar. The chord progression is quite simple, serving as a contrast to the more chromatic parts on the album.

A more texture-based piece. The focus here has been on sound spectrum and dynamics, with a narrative carried by the individual sounds. It is electronic temple music with a focus on silence. Harmonic elements appear through the aforementioned allocation app, mixed with a myriad of pitch-driving clouds. It is a meeting between equal temperament harmonies and microtonal clouds.

A solo piece where all TF-1 modules use the same sound, but the pitch changes in different ways in the individual modules. Technically, this is a repetition of a simple motif, where the pitch variations create the final tonal material and shape the melodic development. Since the album is generally saturated with information, this simple piece was necessary. For the same reason, the album’s total playing time is only around 35 minutes. Several movements were written but later removed to ensure the album as a whole maintained the right progression and an appropriate balance of information.

A composition where the dogma was to use diatonic triad chords in fundamental positions, resulting in a medieval, almost prog rock sound. Simple arpeggio patterns with small rhythmic shifts. As mentioned before, it also made sense during the process to write more traditional melodies, serving as a contrast to the more texture-based parts.

A repetitive chord progression that, through an Allocate app, is constantly varied both in chords and sound. The different sounds in each TF-1 module appear in various ways in relation to transpositions, stacking sounds where the sound constantly changes depending on which layer of sounds is “on top.”

A more romantic piece. Again, more melodic—this time, the main motif is played on the guzheng by Zhang Yu. Notice how Yu constantly manages to interpret the simple theme by bending the notes in different ways. At the end of the piece, motifs are played by electronic kotos, creating a meeting between the acoustic guzheng and primitive digital imitations.

Nancarrow arranged for many TF-1 modules. The focus here has also been on integrating more percussive elements, such as a jazz drummer marking certain elements in the music. A large number of stacked modules contribute to heavy MIDI traffic. In a way, this version points toward Frank Zappa’s later works, especially those featuring the New England Digital Synclavier, like the album Jazz From Hell (1986).

Outro

Light repetitive elements with dark contrasts featuring different microtonal roles and timbres. Percussion meets a driving cluster sound, slowly building up to more equal-tempered harmonies. Finally, a brief revisit of swarms of TF-1 glissandi is replaced by a brighter outlook into the future.

Mix and master

Like most people who work in DAWs, mixing took place simultaneously while recording. Typically, the mix is in groups, as sessions often contain more than 100 tracks. This has made it possible to process the many tracks. Mix has taken place in Ableton and Logic with the included plugins as well as purchased plugins such as UAD plugins, Fabfilter and so forth.

Since I have been responsible for both recording and mixing, it was important for the end result to have other people involved - the album master. I therefore went to Stockholm and mastered the album together with Björn Engelmann. I brought the L/R mix and stems of each movement - the latter typically in 6-12 stereo tracks where each track is a downmix of parts of the overall mix. We chose to master from stems, as this allows for adjustments in sub-areas of the mix. Processing was analog and used e.g. modified NTP compressors and Massenburg eq. The biggest challenge was the volume balance between the individual movements. Dissonant movements such as X1 can tolerate less volume than the more traditional melodic movements. We had to make several versions to achieve the desired result.

The project was released in album format on April 5, 2024.

Title: Det Foranderlige Instrument (The Ever-Changing Instrument)

Catalogue No.: ESC188

Label: Escho

Format: 250 copies, 180 g clear vinyl

Tracks: 12 tracks with a total playing time of 34:21 min

Featuring: Zhang Yu on guzheng in X9

Master: Björn Engelmann

Photography: Diana Velasco

Sleeve Design: Nis Bysted

Supported By: RMC og Statens Kunstfond

All music was composed and recorded by me; however, the track Study No. 11 was composed by Conlon Nancarrow.

Iannix - Graphic Notation

In my research on microtonal compositions, I also studied Iannis Xenakis. During a trip to Paris, the city was celebrating the 100th birthday of Xenakis. I visited an exhibition at the Cité de la Musique, where, among other things, the original score of Metastaseis was on display. This graphical score represents time on the X-axis and pitch on the Y-axis, with each instrument having its own system. No instruments play the same parts. In the score, the 46 strings move in and out of each other using glissandi.

Adjacent to the Xenakis exhibition is the music museum, which houses the unique UPIC computer (Unité Polyagogique Informatique CEMAMu) devised by Xenakis in 1977. Graphics corresponding to the axis of Metastaseis are drawn on a tablet-like screen, and drawings are subsequently digitized and played back from a computer. The French Ministry of Culture has recently developed a free version of UPIC called Iannix. As part of my design of the controller for the TX-816, I integrated parameters that can be controlled via Iannix and designed various graphic elements for this purpose. Using Iannix, I can control all TF-1 modules via a graphical score. Here is a simple example of graphics: time is represented on the X-axis, and values between 0 and 1 on the Y-axis. Each line represents a TF-1 module, and the orange cursor indicates the position in time.

Use of Other Electronic Instruments

As I continued to immerse myself in FM synthesis over several months, it made musical sense to expand the sound repertoire with other instruments. Among these was the PPG Wave, a hybrid synthesizer that combines digital wavetables with analog circuits like filters. Having a wavetable means the synthesizer can move between a number of digital waveforms, which can produce some interesting sounds. Thus, a digital synth that blends well into an FM arrangement is created.

Given my focus on pitch manipulation and glissandi, I also turned to stringed instruments for their ability to slide between notes, adding a further dimension to my sound exploration. I chose Roland’s guitar synthesizer, the GR-300, which converts each guitar string’s pitch into an analog oscillator. This instrument is probably best known from artists such as Pat Metheny (solo synth sound in Are You Going With Me?, 1982), King Crimson guitarists Adrian Belew and Robert Fripp (The Sheltering Sky,1981), and Andy Summers of The Police (Secret Journey, 1981; Don’t Stand So Close To Me, 1980). Summers often tuned the synthesizer’s two built-in oscillators in fifths, resulting in harmonically rich chords (e.g., a C major chord also includes a G major, forming a Cmaj9). Similarly, I worked with different oscillator settings and strings tuned in various open tunings. Like the PPG Wave, the GR-300 added an extra dimension to the sound universe.

Rotation and Transposition

As mentioned, it is possible to adjust countless parameters for each TF-1 module, including transposition and pitch, allowing continuous transitions between different scales and temperaments—from diatonic equal-tempered scales to microtonal temperaments.

Along the way, I found it interesting to design and integrate another piece of Max software: an allocation app. The inspiration for the app came from the Oberheim synthesizer company. Oberheim started in the 1970s by designing the Synthesizer Expander Module (SEM), which could be connected to other synthesizers like the Minimoog, adding two extra oscillators. Later, they combined several SEM modules and added a keyboard. Depending on the number of modules, these instruments were called 2-voice, 4-voice, and so on. For example, on the Oberheim 4-Voice, you could play polyphonically and multitimbrally, with each voice assigned its own dedicated synth module. It was also possible to play in other modes: Unison, where all modules play simultaneously, or Rotate, where the modules activate sequentially. If you tuned the modules differently in Rotate mode and subsequently played, say, three chords, each consisting of three notes, by repeating the same three chords, there will be a change of modules and, thus, a harmonic change. My Allocation app uses a similar rotation system.

Each TX-816 has up to three TF-1 modules that are always activated (Unison mode), thereby allowing specific harmonic tuning. The remaining five TF-1 modules operate in Rotate mode and are activated depending on the number of notes triggered from the MIDI keyboard. Each TF-1 module can have its own tuning, and the rotation can be customized: down, up, or up/down. The same settings can be applied across all three TX-816s using Global mode, or they can be adjusted independently. In conjunction with the control app, the Allocation app opens up many intriguing sonic possibilities.

Conlon Nancarrow - Study No. 11

All tracks on the album are labeled X1, X2, etc., except for track 11, titled Study No. 11, composed by American composer Conlon Nancarrow (1912–1997) in the 1950s. Nancarrow, a Communist Party member who fought against Franco in the Spanish Civil War, emigrated to Mexico in 1940 to avoid persecution in the United States. Unable to find musicians capable of performing his complex music, he turned to writing music for the player piano. The compositions were made on rolls of paper using a punching machine to create holes. The paper rolls were then inserted into the player piano. Air is blown over the paper, and when there is a passage, which means a hole, a given command is activated.

All of Nancarrow’s compositions are called Studies. His first 20 studies adhered to a fixed rhythmic pulse, with 32nd notes as the fastest subdivision, making them adaptable to MIDI conversion. The subsequent studies used a modified punching machine that was no longer locked in subdivisions but completely free. This can be heard in Study No. 21, where the bass part starts slowly and gradually increases in tempo, while the treble part moves in the opposite direction, from fast to slow.

Since Nancarrow’s compositions were originally for piano, I found it interesting to arrange one of his studies for the TX-2416. My recording of Study No. 11 draws partly from Nancarrow’s original score and partly from transcription, as there are discrepancies between the original score and the original recording of Studies for Piano Player (1977). Presumably, Nancarrow adjusted the music when moving from score to paper roll. Moreover, I analyzed the tempo from the original recording and retained Nancarrow’s variable speed. Player pianos had a built-in stepless speed control, and presumably, the mechanics themselves also caused the tempo to change a bit as opposed to a fixed tempo in a computer. In addition, the music was originally recorded on tape, whereby speed differences also occur. The final result offers a new perspective on Study No. 11, situating it in the Black MIDI tradition, where high tempos and massive MIDI information are key elements.

Adam James Wilson, University of California, San Diego. “Automatic Improvisation: A Study in Human/Machine Collaboration,” 2009. This study explores artistic interaction with a mechanical fellow musician. A guitarist with MIDI triggers activates computer-controlled mechanical instruments, prompting pre-recorded musical sequences to form a duo-like performance.

Richard McReynolds, Cardiff University, Wales. PhD project: “An exploration of the influence of technology upon the composer’s process,” 2019. McReynolds explores chance-based operations between humans and machines. His work is based on a number of samples activated by the musician/composer via Wii controllers, with sample selection occurring through randomness, ensuring the music never sounds the same.

It has been a long process.

What has struck me most is how the project idea evolved naturally over time—beginning with gamelan music, polyrhythms, microtonality, instrument design, chance, and so on. There has always been a clear progression and direction in the ongoing works, which gradually shaped the project. In some cases, it is important to adhere to the initial idea, but here, where there are so many unknown questions in relation to instrument design, among others, it was essential to let the process and overall concept guide each other.

Interestingly, the music turned out to be less experimental than expected. The many moving elements and layers of TF-1 modules are not immediately noticeable, which I believe is a strength—it can be experienced on different levels. The instrument, with its many random parameters, took on a life of its own and dictated the musical direction. The project’s melodic focus was not part of my original intention; it emerged as a consequence of the project’s overall idea of dialogue and loss of control in the creative process.

Despite the extensive work described, I feel like I’m only just beginning with this new instrument. Many new questions and ideas arise: developing more moving parameters, finding more ways to control parameters via the EDO16 keyboard and pedals, and integrating rhythmic elements that will require fresh approaches to polyrhythms and polytempos.

Designing an instrument while composing continually forces you to ask fundamental questions in the creative process, pushing you to rediscover the core elements of music. It has been a deeply inspiring process. I hope this project, or even parts of it, can inspire others.

APJ, Copenhagen

October 2024