Binaural recording and playback is widely used for many reasons:

- It is simple and relatively cheap to record and reproduce.

- It can create an incredibly realistic impression when listening on headphones, the listener being able to percieve the reproduced sounds as occuring outside the head.

- It is usually very discreet, suitable for recording in public spaces or covertly recording in places where recording is prohibited.

- It is very good at capturing the feeling of movement through spaces, encoding all the movements of the recordist into the sound.

- Sounds recorded using other techniques, including purely electronic sounds can be "binauralised" using software tools to position and move sounds in a virtual binaural space. This allows the composer to mix true binaural recordings with other sources in a convincing way.

There are, however a number of disadvantages to the technique.

- Reproduction over loudspeakers often does not compare favourably with other stereo recording techniques

(A-B, XY, MS, ORTF) which can give a greater sense of space and/or sharper location of sources.

- Everybody's ears are different, so there will always be a mis-match when listening to a recording made by somebody else. Some people, even having good hearing in both ears, have problems "decoding" the sounds presented to them on headphones.

- As noted above, our brains integrate input from other senses to confirm or improve the accuracy of our perception of sound. When listening to a recording it is highly likely that the listener will experience conflicting information from other senses, their own movements and position within the listening space. This can cause the perception of sounds apparently happening at different positions around us and outside the headphones to blur or fail completely.

Beyond Binaural: Headtracking and ambisonics.

At the moment binaural reproduction is undergoing technological advancement driven by the economic interest in virtual reality and gaming. Some commercial headphones can sense the movement and positioning of the head of the listener and there are also a number of add-on "head trackers" available. These can allow a software application to process sound so that it appears to be located in the same place no matter how the listener moves their head. This would appear to remove one of the disadvantages of binaural technique - that of conflicting sensual cues. Indeed, in my experience, headtracking improves binaural perception greatly along with an enhanced sense of being immersed in the sonic space.

There is however a catch to this development. Up until now, it has not been possible to apply this reproduction technique to actual binaural recordings. The captured cues in a binaural recording are so personal and so interdependent it is (currently) impossible to tease these out into an accurate 3D model of the sound occuring around the head. Such a model would be needed in order to synthesise a new binaural signal using HRTF's which could change by responding to the movements of the listener.

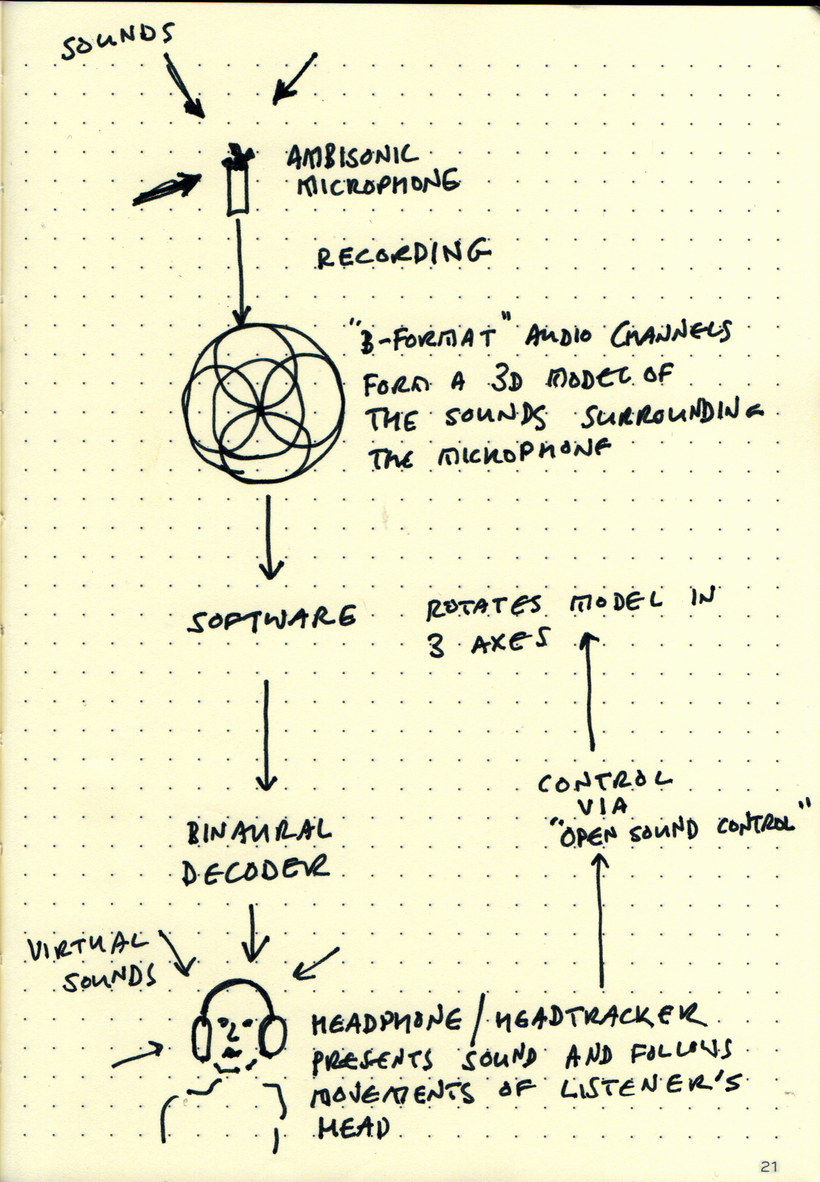

Where headtracking does work though, is when (non-binaural) audio signals are positioned synthetically within a virtual space. One of the most effective ways of doing this is by using ambisonic recordings. These encode a 3d representation of the sounds occuring around a microphone into 4 or more audio channels. This, particularly with higher order ambisonics, approaches the above idea of a 3D model. This multi-channel recording can then be reproduced by decoding these signals for the loudspeaker system at hand or - binaurally, using HRTF's - for headphone listening. Because the spatiality and orientation of ambisonic signals can be manipulated very easily it is simple to create an interactive "virtual reality" style of listening by adding a headtracking device into the decoding chain.

This technique is of course not useful within a traditional stereo-playback environment but Echoes (the programmers of the Tracks app) have implemented a number of experimental tools to be able to use this. The playback of ambisonic audio is supported, as is the placing of "spatial" directional sound sources which can be "walked around" by the listener. These recent developments open up many new possibilities for the creators of audio walks.

Binaural Audio Walks:

While the use of headtracking and ambisonics require more technological progress before they can be widely implemented, the combination of stereo audio walks using headphones and binaural recording already looks like a perfect match: cheap, portable, high quality, hyper-realistic recording and reproduction. The efficiacy of the technique in capturing space, and movement through spaces, makes it highly suitable for reproducing environmental sound. Sources appear to be "outside" the headphones allowing us to clearly follow, for instance, multiple voices moving around us.

On top of this, listening to reproduced sound while moving through a real (sound) enivronment takes it to another level - it appears (reported by many listeners - anecdotal) that a kind of synthesis or coupling occurs which melds the reproduced and the actual sound spaces together. Particularly, sounds that we can reasonably expect to hear in the real environment are then believed to be "true" - because we know the place, or because we have been led to expect them, perhaps by the narrative of the piece. This also affords the composer of the piece a tool whereby reproduced sounds can be sometimes perceived as originating in the "real" world and sometimes as reproduced sound effect or music depending on the context. This can be related to a different creative context - that of film sound. Michel Chion writes that:

"...in the cinema, causal listening is constantly manipulated by the audiovisual contract itself, especially through the phenomenon of synchresis."

I gladly steal Chion's concept of synchresis, (a neologism from synchronism and synthesis) where sound and image are percieved as one entity. I consider it an appropriate description of the fusion of elements in the reproduced sonic world and elements in the actual (sonic, visual, tactile) world of an audio walk.

In the notes about my past audio walks there are many comments about the use of binaural sound as it is a technique that I have used widely since the mid 1980's. This section builds on some of these observations.

- Playing back recordings in the same location:

A technique that I have used since the piece Rumours / Resonances is that of making binaural recordings of background sound while walking the actual path that is to be taken by the listener. This is then used as a basic layer of sound over which others are overlaid. I try not to capture sounds which conflict with the narrative, so sometimes part of the route has to be recorded again, at a different time of day for instance. Concentrating on ambiences - diffused sounds - rather than sharply localisable details or events means that the sound world presented on headphones remains believable despite conflicting sensory cues from the movement of the listener and fuses easily with the actual surroundings.

- Locating sounds in a particular place / direction:

An example of this is Hoor de Bomen where the listeners are instructed to stand at a particular point and look at the tree in front of them. I can then assume that the listener will not move very much, and that frontally presented sounds will always appear to be coming from the tree itself. By asking listeners to walk on a particular path, I can place sound virtually as if it comes from sources either side of them.

- Linking sound to the movement of the listener:

In a few walks the listener is encouraged to move in a particular way, allowing the sound to "follow" them. For example in track 9 of Rumours / Resonances the listeners are told to look at the centre of a fountain while walking clockwise around it. This is accompanied by a circular motion of sound in the opposite direction, adding to the circling effect and causing dizziness.

- Voice:

The binaural treatment of voices is a very effective way of playing with the relationship between the listener and the speaker(s). Mono sounds heard on headphones are perceived as originating inside the head. Voices close to the microphones appear to be very close to the head of the listener, etc. There are also various psychological effects: for instance the sound of whispering always appears closer than someone apparently raising their voice. In the audio walks of Janet Cardiff changing this distance is one of the key techniques used, along with voice recorded onto dictaphones and played back to represent an earlier or a remembered event. An additional technique that I have often used is the use of reverberation chosen to match the particular actual space which serves to blend the voice with the other, real sounds. It is possible to record the reverberation of indoor or outdoor spaces in order to simulate their acoustics in an "Impulse Response Reverb" which is often included in Digital Audio Workstations or DAWs.

I think that I can generalise by saying that the synchresis of recorded ambient sounds with the actual surroundings works very well when the location of the sounds are relatively undefined - think of a busy road in the distance, voices in a busy shopping street, air-conditioning drones in a quiet alley, birds in a park.

Synchresis of clearly localisable sources works when the narrative controls, or at least suggests, the position, direction and visual focus of attention of the listener. This is something that one must leave behind or re-think when working with a non-linear format, where the position and orientation of the listener might not be known.

All the above comments refer to the realistic-sounding reproduction of sounds that could be expected to be present in the real environment. However, in my opinion, the combination of virtual acoustic space and the real surroundings does not need to be accurate or even realistic to give a sense of believablilty. It only needs to be consistent, even if its internal logic is surreal or fantastic.

In the audio walks Ticket to Istanbul and Ticket to Amsterdam Renate Zentschnig and I used the physical and visual aspects of one city as the backdrop for stories and sounds from the other city. Rather than synchresis, what happens here is more a sensation of a parallel world, of ghostly presences or a film that is playing in the imagination. A related work from cinema is Christian Marclay's Up and Out - a film superimposing the images from Antonioni's Blow Up with the soundtrack of Brian de Palma's Blow Out. Not only are the films almost the same length, there are enough parallels between the plot lines and dramaturgy that both stories can be followed simultaneously.

An example from a piece of my own is the demonstration / procession that ends the walk Zuidas Symphony. The audience sits on a large square listening to a procession of characters that we have met during the narrative. Here the empty space becomes the screen on which the listener projects the action in their imagination.

Music in an audio walk that is not perceived as coming from a source in the "real world" can take the same role as a film soundtrack: it creates drive and rhythm and can affect the listener emotionally and physically but it remains non-diegetic, outside the narrative.

Sometimes though, particularly when the music becomes intense or when it is the only element present in the sound, it goes a step further: enabled by the "bubble" that headphones create, musical listening takes over completely and we become immersed in the sound. We become lost in music, disengaged. In a nod to Sister Sledge's famous hit, one could call this "sledgidity". Although immersion can be an important part of the affective experience it can also trap us, preventing engagement with the narrative and with the real world outside.

Listening with two ears - Binaural hearing, recording and rendering in audio walks.

"The world is in my head. My body is in the world" - Paul Auster

"Although the acoustic and psycho-acoustic principles on which it is based are mostly well known, our understanding of how humans create their sense of acoustic space based on just two binaural inputs is still fragmentary, and until recently based mainly on speech and music perception, not environmental experience in general where the variables are far more complex." Barry Truax

According to the literature there are three mechanisms whereby we locate sound with our ears: timing differences between the ears (Interaural Time Difference or ITD), amplitude differences between the ears (Interaural Intensity Difference or IID) and filtering (timbral) artifacts created by the shape of our ears and other parts of the body. It is important to realise that these three are not independent and not universal. Our brains (probably) form perceptions by combining the results of these mechanisms and integrating this data with input from other senses and memory.

Of all the available stereo recording techniques, only binaural recording can capture and reproduce all the cues to allow the re-listener to accurately locate sound sources on headphones. It does this by using small microphones placed in the ears of the recordist, or a "dummy head". The spacing of the microphones captures time differences, the presence of the head, creating a "sound shadow" in between the microphones, leads to intensity differences and the shape of the ears, and the position of the microphones in regard to the shoulders and the environment (e.g. the ground, walls) allows the microphones to capture filtering, delays and phasing effects.

Episode where voice and other sounds are layered over a recording from the actual listening location.

This example from Multiplicity combines many techniques - voices recorded in real spaces, impulse response reverbs, binaural recordings and "synthetic" binaural electronic sounds.