2. Technologies of Presence

The artistic component of Embodied/Encoded required experimentation with digital tools that are linked in some way to physical or perceptual dimensions of sound. Such tools are allied in a technical sense with ‘physical presence’ described by Biocca, functioning to abstract information from physical contexts. Lee describes this mode of presence “as a consequence of sensory stimulation provided by an immersive system; the more advanced the system is, the higher the level of presence is.”22

In practice, these systems are compromised to widely varying degrees by the finitude or limits of their digital design. There are also digital techniques which move away from materiality toward an idiomatic address of the computer in the form of abstract processes.23 A preoccupation within this research is embodied meaning derived from idiosyncratic constellations of acoustic representation, digital disconnection, and corporeality.

2.1. Sound Field & Ambisonics

Guided in part by the well-developed spatial composition methodology of Natasha Barrett, the artistic components of Embodied/Encoded utilize ambisonic techniques for sound field recording and representation. Rozenn Nicol explains, “the sound field approach is based on a non-speaker-centric, physical representation of sound waves.”24

A sound field reproduction system should create a complex acoustic wave field over an extensive listening area characterized by precise time, frequency and spatial properties. High density loudspeaker arrays are typically required for this task—each loudspeaker acts as a secondary source, with the sum of all the loudspeaker contributions leading to the reconstruction of the target sound (the composed sound field) within the listening space:

The loudspeakers are considered secondary sources in the sense that they create only a synthetic copy of the target sound field, as opposed to a real or virtual sound source, which would have created a sound field. The virtual or real sound source is called the primary source for this reason.25

Ambisonics represents a sound field as an aggregate of spherical components (or spherical harmonics). The ambisonic order determines the spatial resolution—high orders yielding greater numbers of precise directional components within the sphere, thus higher fidelity reconstructions (an analogy can be made to the sampling rate of digital audio). The number of loudspeakers required for sound field reconstruction at a given ambisonic order is roughly equivalent to the number of spherical harmonics. At a minimum, four directional components are defined in first order ambisonics (FOA). Mathematical, technical, and historical premises of ambisonics are discussed in Nicol (2017), and Zotter and Frank (2019) among other sources.26

One advantage of this format is its translatability. The de-coupling of the spatial composition from the loudspeaker configuration allows translation between different studio or concert setups. Furthermore, ambisonics can maximize the degree of immersion and visceral impact of high-density loudspeaker systems which are now common at electroacoustic and experimental music concerts, while being composed for practical reasons in a down-scaled university, commercial, or home studio.

The relation of ambisonics to the XR concept of physical presence is crucial—the physical reconstruction of a sound field should mirror the acoustic experience of real life. Biocca reinforces that “from a design viewpoint, physical presence is critical in applications that must involve spatial cognition, the transfer of spatial models from the virtual environment to the physical environment.”27 However, technologies of presence make compromises due to the necessary limits of their digital design. Spatial resolution in ambisonics is frequency dependent with low ambisonic orders incurring notoriously destructive effects such as comb-filtering, blurry spatial resolution, and a small reconstruction area (or ‘sweet spot’). This has resulted in low incentive for many to adopt the techniques given the ubiquity of stereo imaging in commercial audio production, even with the abundance of ambisonics freeware currently available. Commercial surround systems such as 5.1 and 7.1 are also limited in spatial resolution.

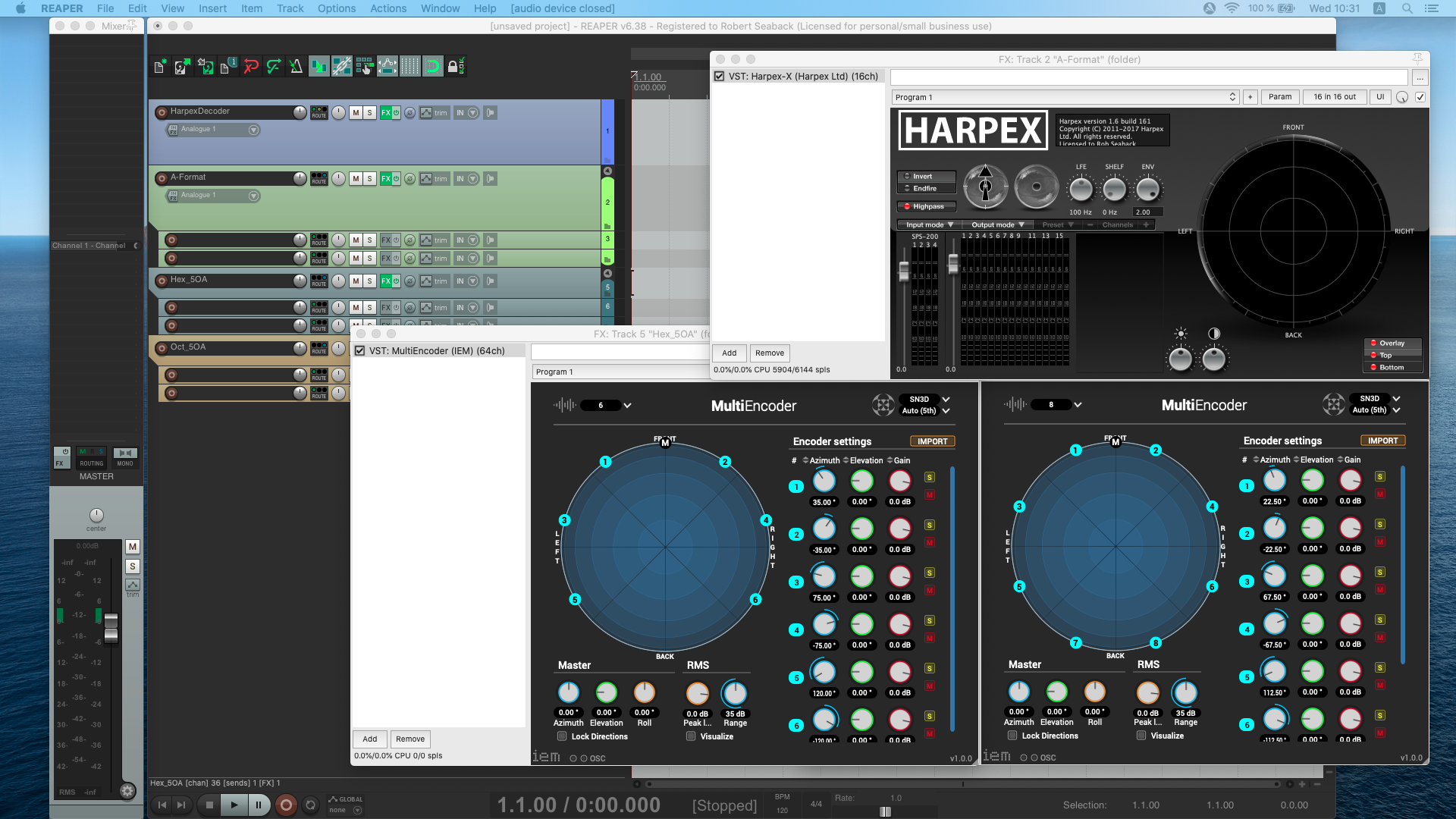

Despite these setbacks, ambisonic technologies have been implemented at production (recording) and post-production (mixing/editing) stages of Embodied/Encoded research. In the latter case, Reaper was the chosen DAW. Reaper supports up to 64 channels per track, enabling ambisonic resolution up to the 7th order (7OA, etc.). Working primarily in quadrophonic or 7.1 channel studio spaces, I preserved high ambisonic resolution at the encoding stage while monitoring (decoding) in FOA, made possible by the VST plugin Harpex. Harpex is a specialized decoder which reconciles destructive effects of low order ambisonics over standard loudspeaker configurations. The plugin uses psychoacoustic filters (active matrixing) which allows “far greater channel separation than what is possible with passive matrixing,” with minimal phasing and a larger ‘sweet spot.’28

At the production stage, I made a number of recordings with the Soundfiled SPS200, a tetrahedral microphone limited to FOA. Recordings were then ‘upsampled’ to 3OA resolution with Harpex. Final mixes of acousmatic works consist of 3OA stems derived from the SPS200 and higher order stems (5-6OA) from multichannel sound files synthesized as virtual sources.

2.2. Traces of Presence After Signal Decomposition

Integration of the FluCoMa suite of externals for the programs SuperCollider, Max, and Reaper was a crucial addition to my workflow at the early stages of Embodied/Encoded research. FluCoMa provides a means to access various perceptual dimensions of sound with accuracy and flexibility. The collection brings techniques for signal decomposition and machine learning to techno-fluent composers.29 In the context of this artistic research, FluCoMa connected the acoustic dimensions of presence to experimental processes in sound design and composition.

FluCoMa contains a variety of audio ‘slicers,’ which identify different types of onset characteristics in an audio stream and output the data for use in other processes or instruments. Slicers can be configured to identify and isolate perceptually salient sound events. I automated ‘cut & splice’ techniques at large scales, with the ability to address each slice independently. Almost all works of Embodied/Encoded implement onset analysis and slicing in some way, particularly in the form of algorithmic re-orderings of slices coded in SuperCollider. In Example 1 (heard in City Lines), a drum-kit sample30 is sliced and re-ordered according to a simple pattern specifying how to proceed through the slices; for example, step forward three slices then jump back two. The music takes on a different formal character without rupturing the performative continuity and realism of the original.

Later experiments focused on the ordering of slices according to different perceptual criteria such as pitch, loudness, or duration information. Example 2 is a small etude in algorithmic composition in which two different improvisations from Ingar Zach are sliced according to onsets, re-ordered according to the duration of each slice starting from the shortest, and played-back alternating the two sets. Because one set contains more slices, they recycle at different points in time, creating shifting interleaved/hocket patterns or hybrid sound shapes.

It is more traditional to slice audio according to technical or logical specifications which are not predicated on analysis of the input. At a small enough time-scale, these techniques are described by Roads as microsound, encompassing a broad range of time and frequency-domain processes.31 One example used in Embodied/Encoded is granular synthesis, which was coded in SuperCollider according to two different methods underscoring the diverse ways ‘grains’ can be constructed and addressed. In the first, I used the GrainBuf UGen to construct granular streams from a soundfile with audio rate precision, but with limited access to individual grain parameters. This was used primarily for synchronous granular synthesis, in which grain frequency is periodic, imposing modulable formant peaks onto sources. Example 3.1 demonstrates synchronous granular as a kind of time-stretching, incrementally ‘scrubbing’ through the drum kit source at close to audio-rate with overlapping grains.

In the second approach, I used the OffsetOut UGen to schedule a sample-based synth at audio rate. This allows for detailed control of individual grains such as time-varying envelopes on grain pitch or the mixture of different soundfiles to create cloud or hybrid textures. The former is known as glisson synthesis (Example 3.2). Defined by Roads, “in glissson synthesis, each particle or glisson has an independent frequency trajectory—an ascending or descending glissando.”32 This technique was explored extensively with results documented in the works Still Life (3.2), Lightness in Transit (3.3), and Skin and Siren (3.4). When based on logical operations rather than perceptual criteria, transformation processes tend to co-opt the identity of sources. Digital artifacts become evident instead of transparent.

2.2.1. Reflexivity

Reflexivity is a concept embedded in certain methodologies of Embodied/Encoded – from the compositional loop between listening and computing, the development of materials by performing/recording and editing/curating, or the intentional design of sound transformation processes. Hayles discusses reflexivity in the context of second-wave cybernetics, which began to understand the observer of a system as a fundamental component of the system itself:

Reflexivity is the movement whereby that which has been used to generate a system is made, through a changed perspective, to become part of the system it generates. … Reflexivity has subversive effects because it confuses and entangles the boundaries we impose on the world in order to make sense of that world. Reflexivity tends notoriously toward infinite regress.33

Reflexivity was later absorbed by the theory of autopoiesis, which evolved further into third wave cybernetics and the concept of emergence. The rich historical context of reflexivity is beyond the scope of this research.34 I wish to focus instead on the notion of boundary confusion and its destabilizing potential. Boundary confusion inspires my sound experiments which use reflexive processes, dissolving or making elastic the boundaries that contain recognizable forms. Techniques informed by this concept involve the superimposition of material and informational entities, or the modulation of one by the other, whereby data streams abstracted from a source are re-mapped to processing parameters. This creates couplings between the natural fluctuations of sources and arbitrary digital modifications. While predicated on the parameters of specific audio tools, straightforward examples include the mapping of spectral centroid data to resonant filter frequency and bandwidth, or pitch analysis data to sample playback rate. Specific musical examples are given in sections 3.3., 3.4., and 4.2.

2.2.2. Digital Disconnect

Hayles’s posthuman epistemology frames presence as an intersection with digital disembodiment. In her configuration, presence and absence interact with (while distinguished from) the parallel dialectic of pattern and randomness which defines information technologies and the crossover to digital-immateriality.

In prior research, I have employed Hayles’s framework in attempt to identify an informational ontology for sound, using example compositions and techniques by G.M. Koenig, Herbert Brün, Iannis Xenakis, Yasunao Tone, and Ryoji Ikeda.35 These composers worked with different flavors of non-standard synthesis, in which “sound is specified in terms of basic digital processes rather than by the rules of acoustics or by traditional concepts of frequency, pitch, overtone structure, and the like.”36 Non-standard and related techniques I have referred to as anacoustic (or soundless) modes of sound composition because of their immaterial origins. I think of anacoustic modes as a counterpart to technologies of presence and, if not implemented technically in Embodied/Encoded, offer some kind of foundation to propose digital archetypes for creative purposes.

Some general observations can be made about the disposition of sound materials generated with non-standard techniques, which link to a digital ontology characterized by replication, quantization, and stasis. At extremes states, they exhibit:

1. flat spectrum—or a relatively equal distribution of energy among partials in contrast to the model of acoustic resonance, in which energy is clustered around vibrational modes closest to the fundamental frequency;

2. static morphologies of infinite duration from wavetable-lookup techniques, i.e. digital oscillators

3. quantization at different time scales, from sample-level to sound event

4. anechoic spatial profile, without reflections, reverberation, or resonance

Re-stabilizing the digital disconnection of anacoustic modes, technologies of presence address sound through parametric models characterized by hierarchic levels of coding, carrying physically or perceptually meaningful variables into the necessary low-level functions.

22 Lee, “A Conceptual Model of Immersive Experience,” 6.

23 Robert Seaback, “Anacoustic Modes of Sound Construction and the Semiotics of Virtuality,” Organised Sound 25, no. 1 (2020): 4-14.

24 Rozenn Nicol, “Sound Field,” in Immersive Sound: The Art and Science of Binaural and Multi-Channel Audio, ed. Agnieszka Roginska and Paul Geluso (New York: Routledge, 2017), 276.

25 Ibid., 285.

26 Ibid.; Franz Zotter and Matthias Frank, Ambisonics: A Pracitical 3D Audio Theory for Recording, Studio Production, Sound Reinforcement, and Virtual Reality (Springer Open, 2019), https://doi.org/10.1007/978-3-030-17207-7.

27 Biocca, “The Cyborg’s Dilemma,” 17.

28 Harpex LTD, “Technology,” https://harpex.net/ (accessed May 4, 2022).

29 P.A. Tremblay, et al.,“The Fluid Corpus Manipulation Toolbox”,Zenodo (July 7,2022), doi: /10.5281/zenodo.6834643.

30 A stem-release titled “crow’s perch” from the band black midi.

31 Curtis Roads, Microsound (Cambridge: MIT Press, 2001).

32 Ibid., 121.

33 Hayles, How We Became Posthuman, 9.

34 Particularly Chapter 6 of How We Became Posthuman titled “The Second Wave of Cybernetics: From Reflexivity to Self-Organization.” Hayles, How We Became Posthuman, 131-159.

35 In addition to the previously cited article, my PhD dissertation. Robert Seaback, “Anacoustic Modes of Sound Construction: Encoded (Im)Materiality in Synthesis” (PhD diss., University of Florida, 2018).

36 S. R. Holtzman, “An Automated Digital Sound Synthesis Instrument,” Computer Music Journal 3, no. 2 (1979): 53.