Control system version 2

In late 2020, I started redesigning and unifying the control system used in the time-based organization of the movement materials for the figures, sound and lights. The redevelopment focused on creating consistency in the OSC communication protocol, looking at alternatives to Reaper as the primary tool used to organize (movement) material in time.

In researching possible approaches for the first version of the figures' control system, I studied available solutions. Most control systems employed in robotics are complex systems geared towards robots used in manufacturing, often hardware-specific and always closed-loop systems.1

The actuators used for figures #1 - #12 are implemented as open-loop systems (although some of these are currently being redesigned using actuators with closed-loop control systems), meaning that there are no sensors attached to the figures providing feedback of positions. For the actuator systems used in several of the figures, such as those that are vacuum-driven, a closed-loop system, meaning a motion control system that employs sensors to continuously monitor the position of an actuator and make adjustments relative to a goal position, would not make sense.

For the communication protocol, OSC was and remains the obvious choice since it is scalable, human-readable, and can be communicated using various transport layers. For the figures in this project, I am sending OSC messages and OSC bundles2 via UDP over a wireless network and, in some instances, slip-encoded3 serial communication.

Central control

There are some OSC sequencers available. These are software packages that can organize OSC messages in time or make gradual changes over time.4 However, in this project the interplay between different media such as audio, light, and human physical movements, with the figure's activities is essential, particularly the integration of sound. A fundamental requirement was that it be relatively straight forward to compose movement material for the figures and sonic material in parallel.

Ease of interfacing with other stage production technologies such as lighting desks and audio systems was also important. Generally, when creating an installation or performance using the figures, the ability to adjust the timing of a movement, the speed of the motion, or the movement's extent quickly is the highest priority. The precise placement of physical activity in time is, to me, far more important than the precision of the action itself. The ability to quickly experiment with combinations of different movements materials with sound and even produce the sonic score in the same software as used to control physical movements greatly facilitates experimentation. Surround-sound processing and live audio processing of musicians can be done in the same software used to control the figures' movements, allowing easy synchronization between elements.

Transport layer

Since the transport layer is wireless, I made the command protocol using simple go-to messages. UDP has no checks against dropped network packages and does not guaranty the order of network packets. In the communication from the central control system to the animated figures, this is mainly addressed by sending the commands as OSC bundles when the ordering of messages is important (for example, setting a motor's speed before triggering movement).

These commands cause the figures to execute the desired action by having the microcontrollers on the figure implement those commands, avoiding the need for transmitting a large amount of continuously updating position values. A system based on centralized motion control, continually updating the desired motor position control remotely, would demand much more from the transport layer. A second motivation for transferring the trajectory calculations for each actuator to the microcontroller is that it is much easier to create standalone versions of the figures that do not require centralized control. Because the messages sent to activate motion in the figures are so minimal, it will be quite simple to generate these on the onboard microcontroller.

However, since there are no built-in checks against lost packages, I am currently investigating the possibility of TCP as the transport layer. Most of the messages sent to the figures are "send and forget", with motion control(acceleration, at-speed movement and deceleration) generally handled on the motion controllers. This means that in most cases, it is a higher priority that the message arrives at the cost of a higher latency than UDP's higher speed at the expense of omitting checks. This makes the relative higher security of TCP an attractive choice for further development.

Control system v.1

The first version of the control system used Reaper as the primary tool for organizing movement and other materials in time. Reaper has an OSC system implemented but it does not offer the opportunity to freely create custom OSC strings. It was necessary to develop a translation software, implemented in Max/Msp, to translate the OSC output from Reaper and scale the 0 – 1 values from Reaper to OSC commands the figures would respond to.

This synchronization between audio and movement is central to the transmedial association between sound and movement. To quickly be able to match audio and movement material takes aides this process. Therefore accepting the tradeoff in configurability for the robustness and ease of editing both audio and movement materials offered by a streamlined DAW seemed like the best choice, even if it depends on a "workaround" to communicate with the figures.

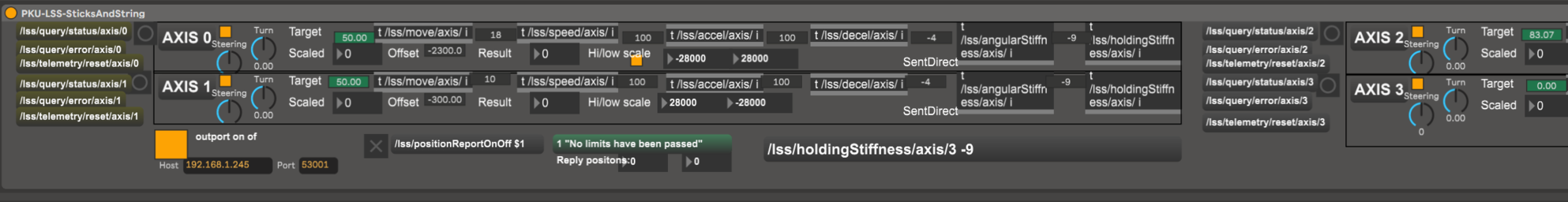

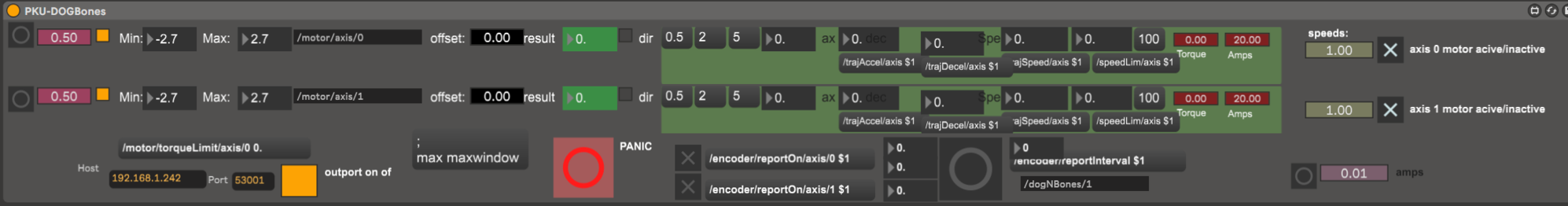

Control system v.2

Version two of the control system is run as a set of Max 4 Live patches in Ableton live. The network infrastructure has been simplified by assigning static IP's to all figures. This has made the automatic IP assignment superfluous. The rationale for this was that in productions that are technically demanding, such as the ones in this project, and managing the technical aspects myself, maintaining simplicity is paramount. Since the communication protocol controlling the figures is relatively minimal, it is quite possible to create matching Max 4 Live patches to communicate with each figure. The choice to redesign the control system came from a sense of V1 being unnecessarily complex. Using Ableton Live allows the entire show control system to run in one software package. Besides, the non-linear nature of Ableton's Session view facilitates quick experimentation with movement material in the various figures. The downside is that it has limited support for surround sound production and processing(though possible). I have therefore created a set of multichannel sound file players to run in Ableton Live. I can create audio material alongside movement material by synchronizing Ableton Live and Reaper during development using time code to use Reapers superior surround sound capabilities.