Within my practice, artistic works are initiated through the development of a new electronic music instrument. The initial point of departure revolved around the idea of making an instrument that was very simple, involving a minimum amount of elements, while also exhibiting a wide and complex range of chaotic sonic behaviors. One source of inspiration came from thinking about the Three-body Problem in physics and mechanics.

“[...] the modern concept of chaos was found by Poincare in his study of the three-body problem, almost 100 years ago. Poincare himself predicted that a long time would pass, before this kind of concept would be relevant for actual computations to be used in dynamical astronomy. Whether we like it or not, this time is now.”

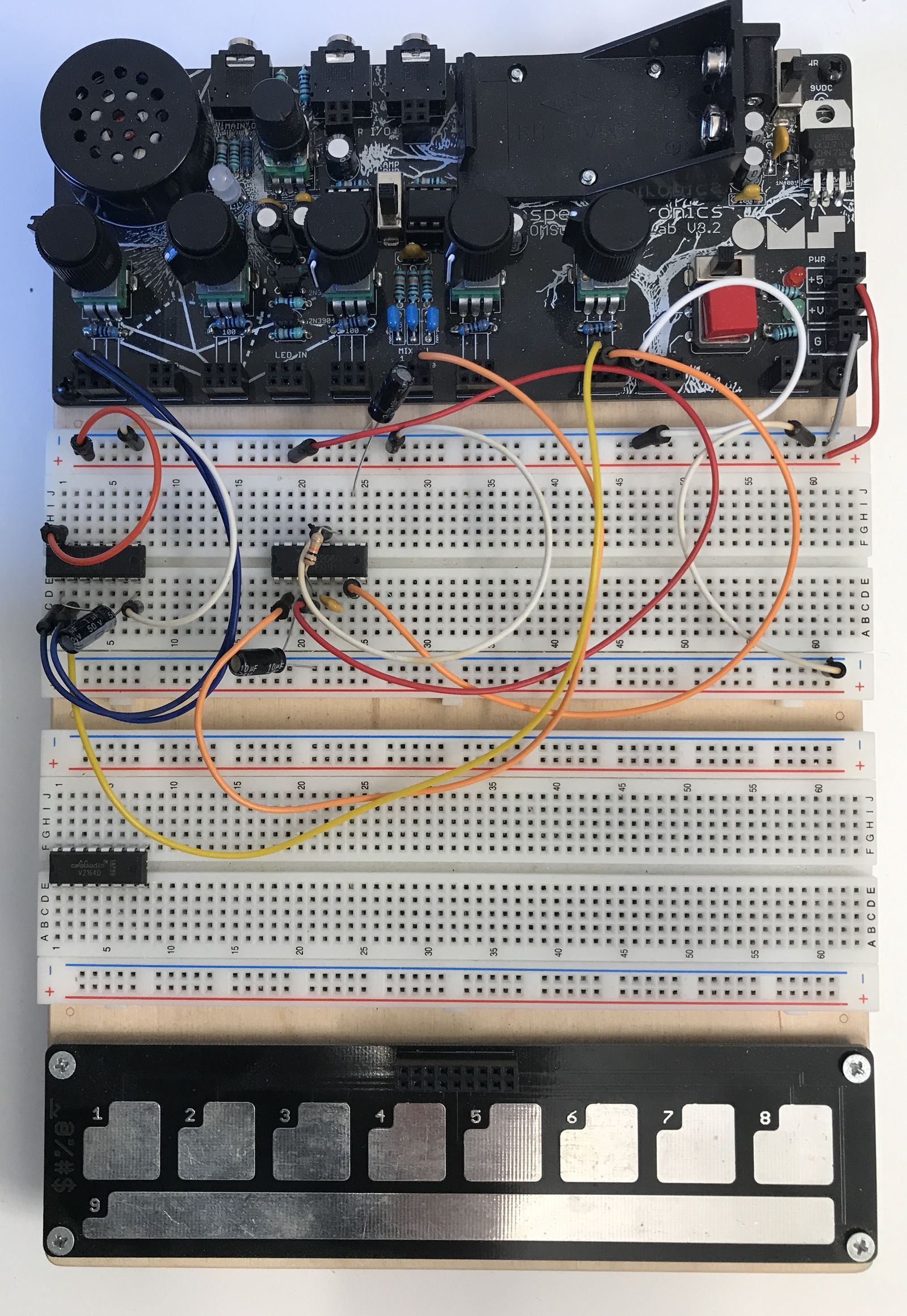

There is something entirely unintuitive, yet captivating, about the proposition that chaotic behaviors emerge when only three point-masses are trying to lock into orbit with one another. Here, complexity arises from a humble starting point. This notion pointed me to the idea of developing a synthesizer based on only three oscillators, each having an effect on the operation of the others. By keeping the elements as simple as possible, it became feasible to realize this concept using only analog electronic circuits. Up until this point, my experience with the design of analog electronics was mostly related to circuit bending and the occasional work of connecting sensors to microcontrollers such as the Arduino. However, a few months before starting the research, my interest in designing analog circuits was ignited through the purchase of an OMSynth, a circuit development and performance interface created by the artist and instrument designer Peter Edwards. The OMSynth consists of two rows of breadboards, and a circuit board on top that makes it much easier to power prototype circuits and to listen in on the sonic output of the electronic voltages.

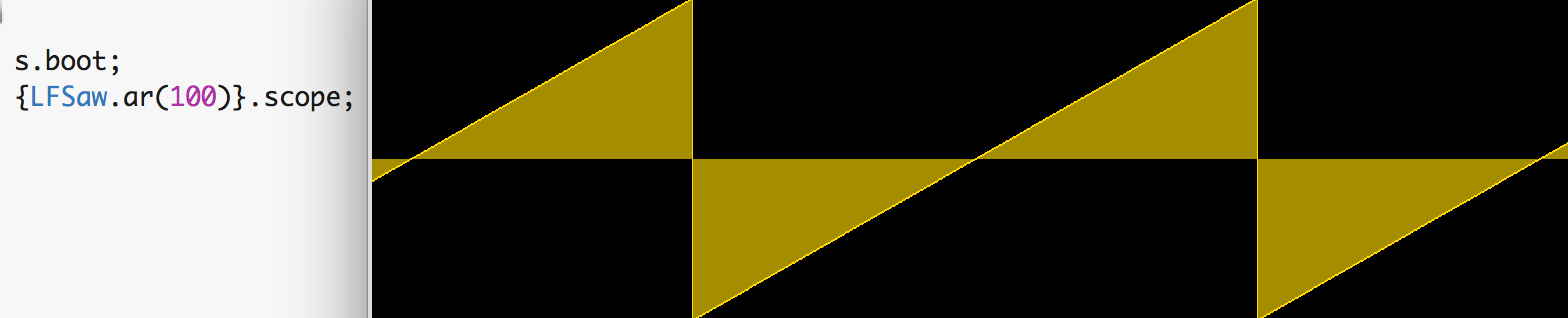

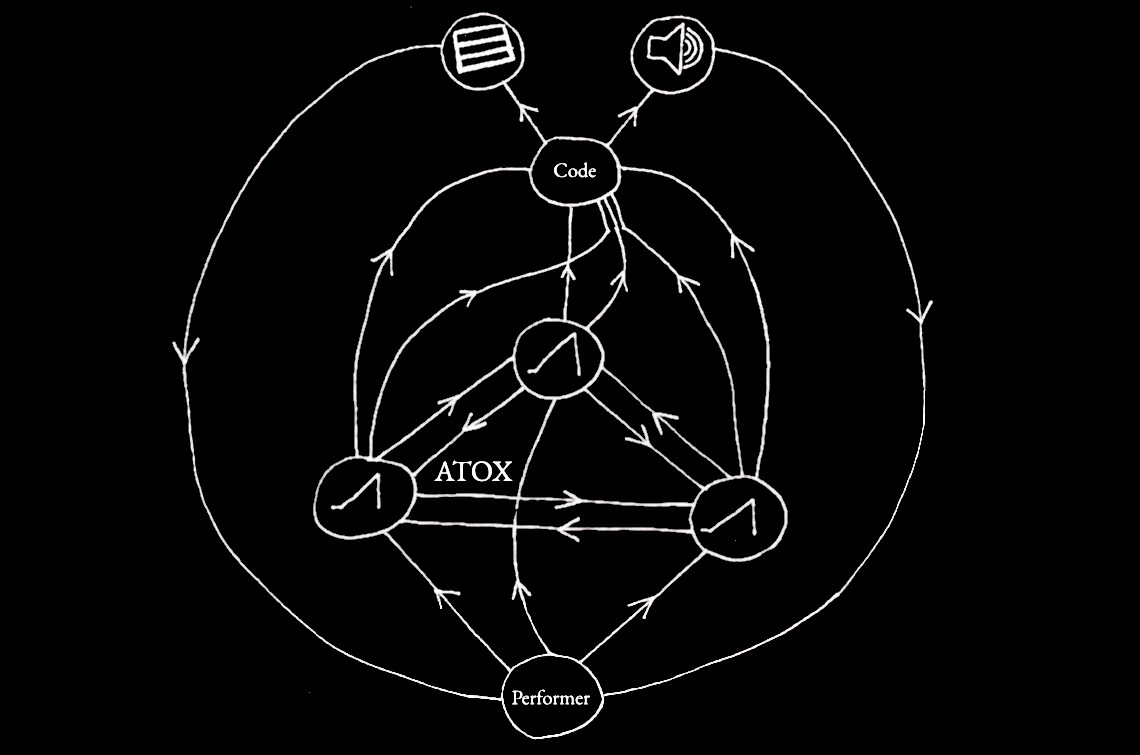

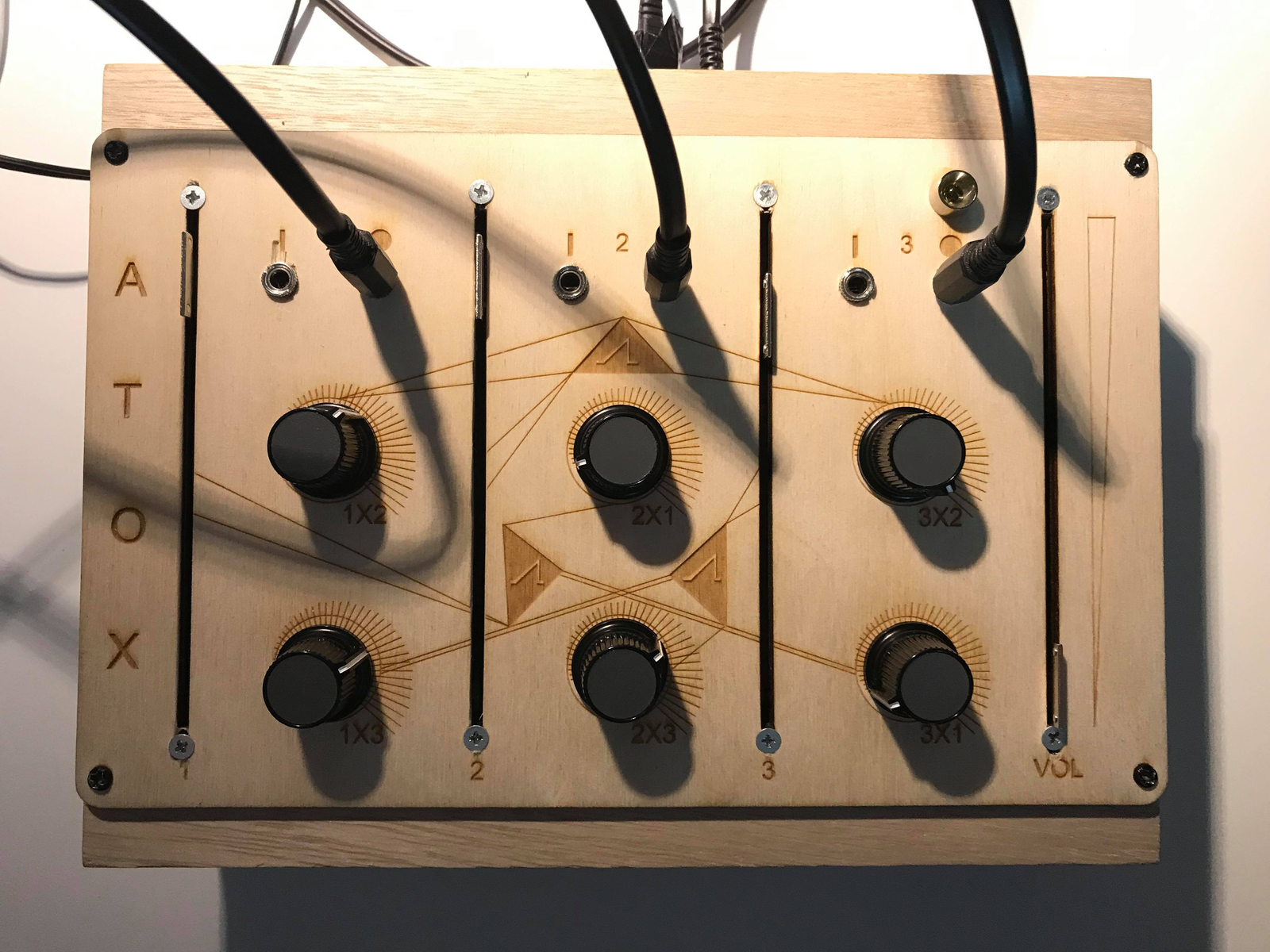

The linear ramp between the lowest and highest values increases the amount of complexity achievable, when the signal is used to modulate the frequency of other oscillators, or themselves. The ATOX synthesizer consists of three of these sawtooth oscillators. The output of each oscillator is then split into various copies that are attenuated and used as modulation signals, affecting the frequencies of the other oscillators. By changing the positions of the attenuators, meshworks of interconnected signal streams are established, and the sonic behaviors follow suit.

When the circuit was working on the OMSynth, it was soldered onto a prototype board and installed into an enclosure with a laser-cut faceplate indicating which attenuator was connected to which oscillator. Once the synthesizer was built and operational, a period of time was devoted to the exploration of its sonic behaviors. During these explorations an important realization took hold. The sonic behaviors emerging from the instrument really demonstrated the type of chaotic qualities that lie at the heart of the research. Melodies, rhythms, drones, and textures emanate from the speakers as the crossmodulating waveforms produce a number of sonorous behaviors. However, the timbral qualities of the sawtooth waveform remain overly present, despite the various manners in which these waveforms are being modulated. The sonic vocabulary at this point is still rather nasal and the question arises whether this will become a defining signature of the instrument. At these stages in the development of the instruments, such conflicts between functionality and aesthetic appreciation are to be expected. Although there is a conceptual desire to strive towards the development of an instrument with a minimal amount of elements, leaving things as they were was not a viable option, as the sonic limitations also had a narrowing effect on the compositional and performative possibility space. Early experiments with adding a hardware filter effect yielded an improved sonic palette. This version of the setup was used during a concert, organized by Prøverommet at "Landmark" in Bergen, alongside the South Korean musician Sunju Park (Gayageum(Kayagum)), as well as during another concert alongside Roald van Dillewijn (Electronics) and Gareth Davis (Bass Clarinet), organized by Moving Furniture Records at "Cafe De Ruimte" in Amsterdam.

These tryout concerts are a vital resource during the development of the instruments. When prototypes are played in concert environments, the added dimension of collaborators and audiences becomes a useful lens through which the instrument can be examined. In studio situations, when instruments are explored, there may be stretches of time during which the sonic material is lackluster, or to be more precise, lacking the kinds of sonic tensions that are found near the edges of chaotic tipping points. The sonic behaviors are either too stable, displaying very little variation, or too unstable, when there are no patterns to be found at all. My aim is to create instruments that balance between these extremes, that are always unstable, yet always full of patterns, rhythms, and textures. Performing with the instruments in concert settings, introduces a sense of consequence. One important challenge presents itself: how to navigate between different positions that are each located on the fragile edge of chaos? In the best case scenario, sonic behaviors collapse, and rebalance close to the edge of another tipping point. The tension is maintained, and only a minimum amount of time is spent searching for another tipping point.

There is one more aspect to the setup that needs some elaboration. Visualizations have been added, illustrating, or perhaps illuminating, the inner workings of the ATOX Synthesizer. These visuals consist of three horizontal bars that respond to the sounds of each oscillator. The loudness of the sound is mapped to luminosity, and timbral qualities influence the color and texture of each bar. The visuals have been developed in Vuo.

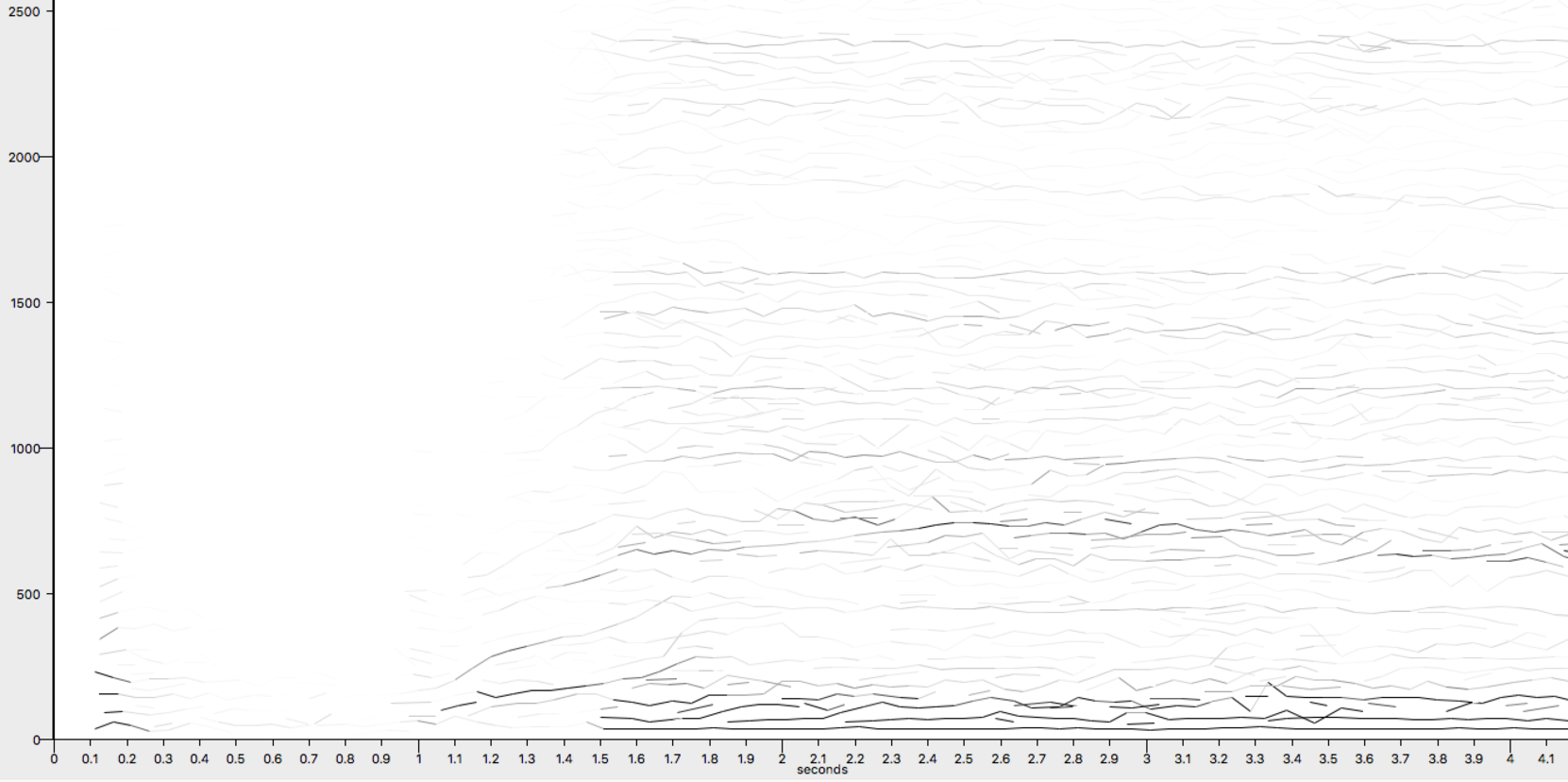

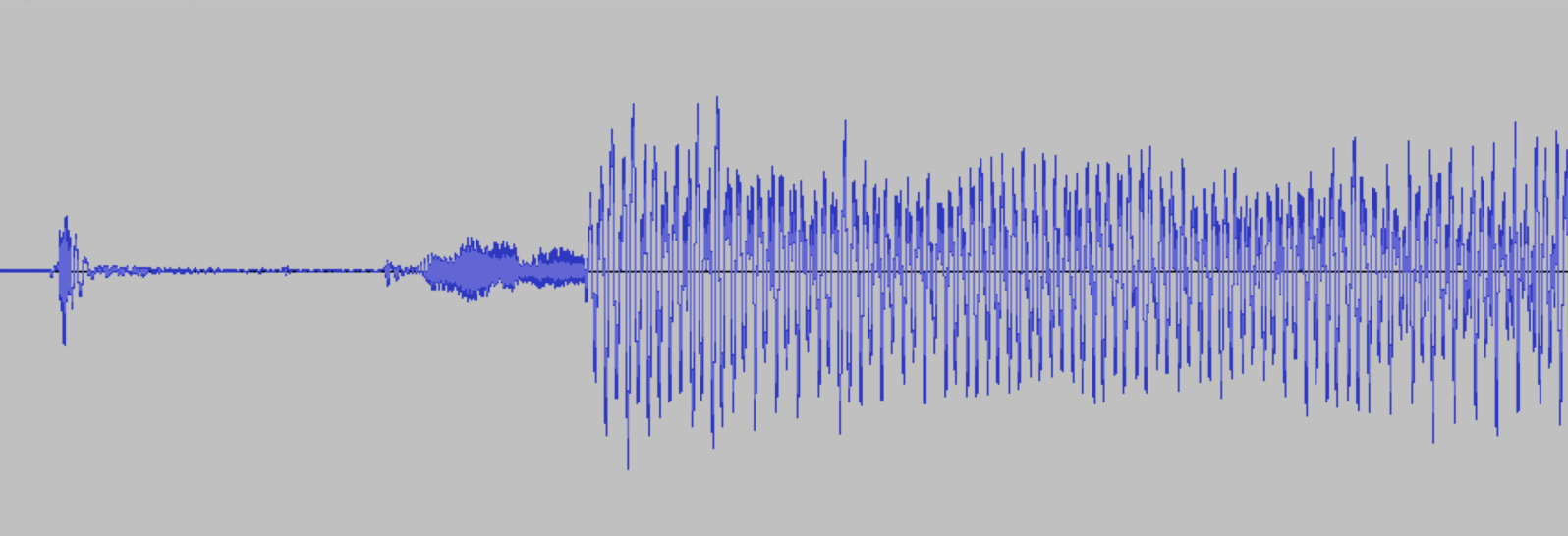

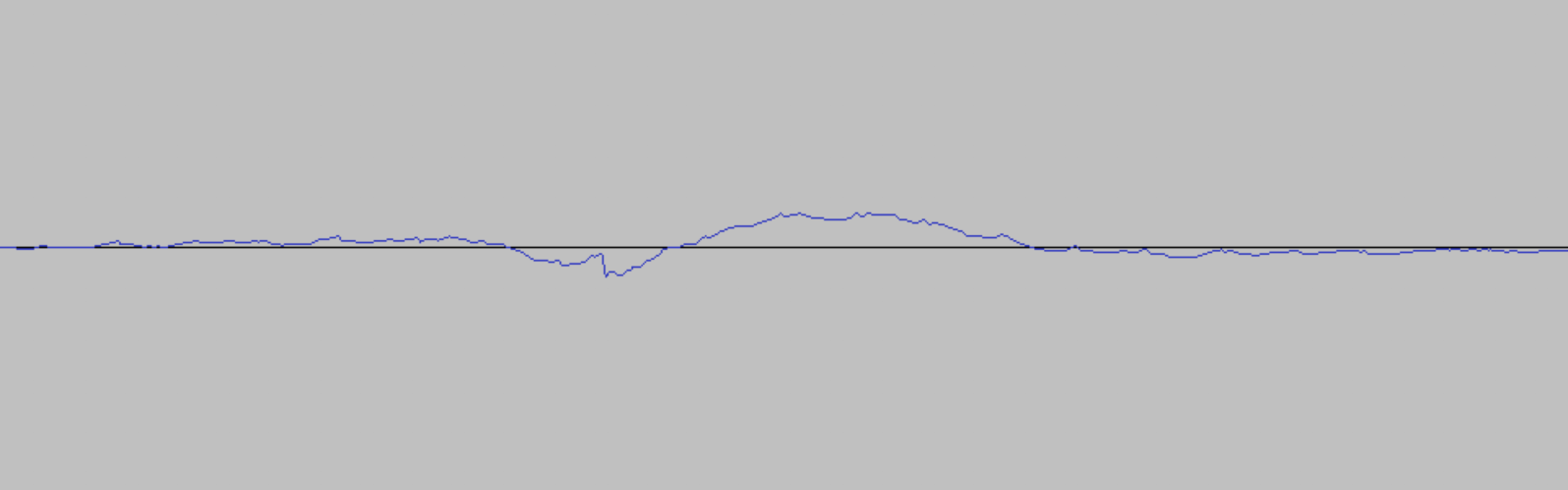

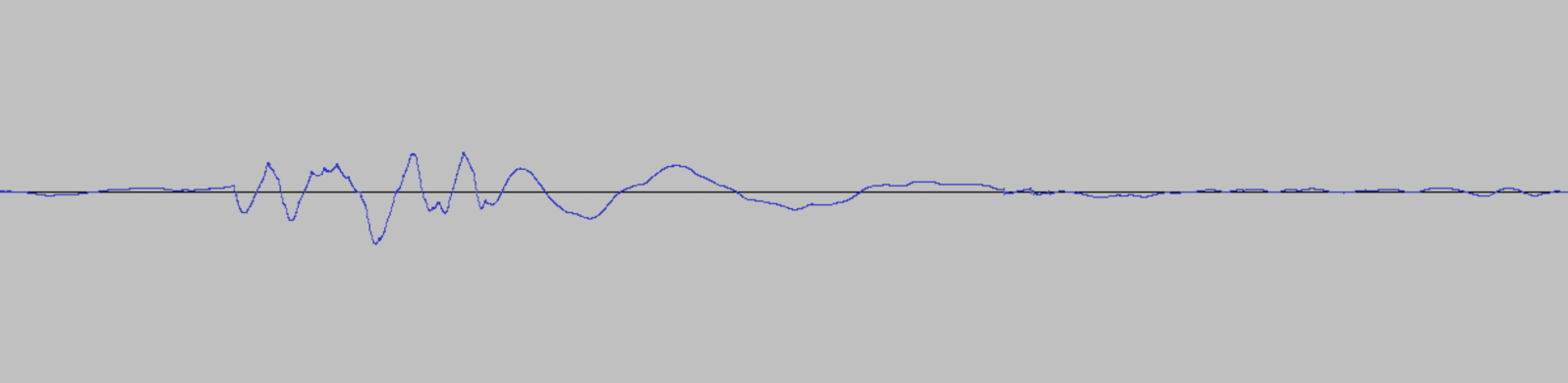

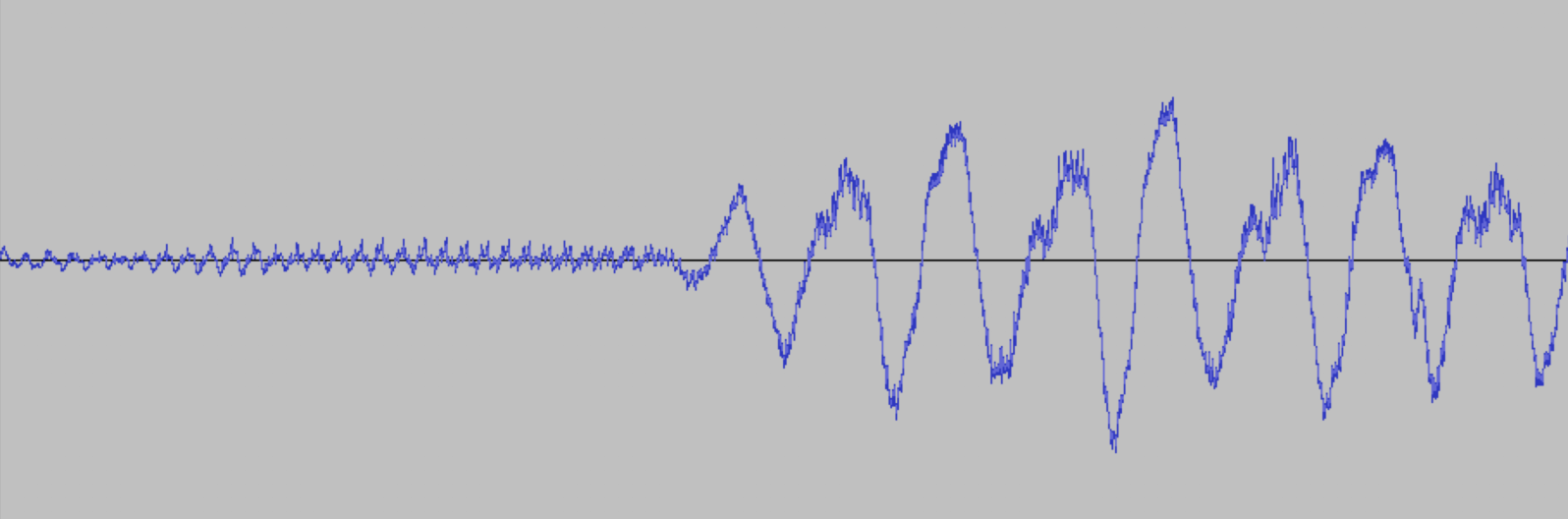

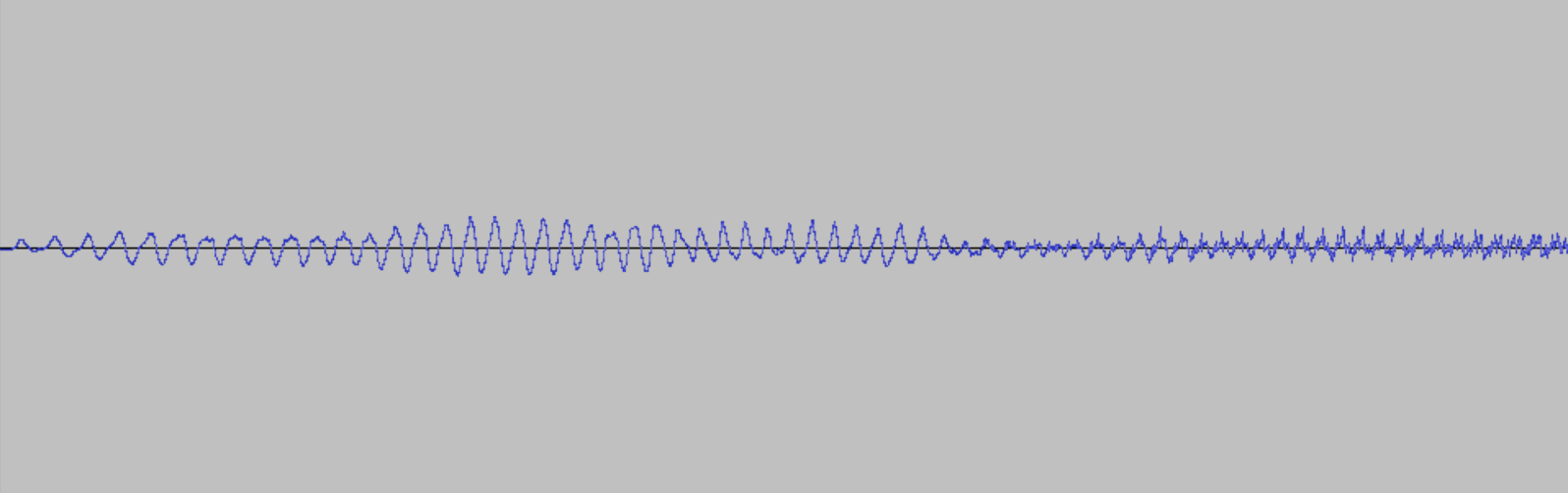

This sense of "settling into" is an important feature of the instrument. In the closeup of the waveform the three elements are clearly distinguishable. The popping sound (from 0.1 to 0.3 seconds) is most likely an artifact of the digital processing element of the setup.

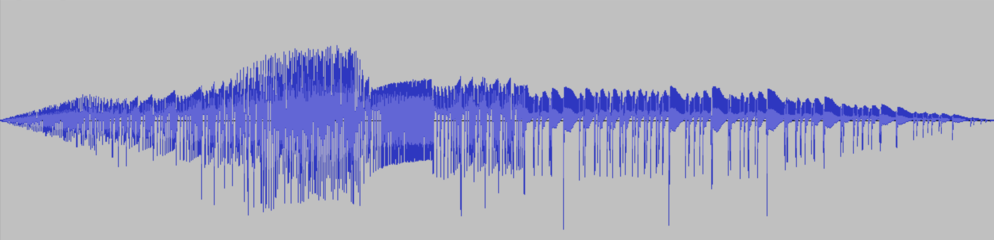

As a performance begins, the music information retrieval section of the code undergoes a rapid change from analyzing silence to sonic input. This rapid change causes a swift change in the parameter space of the granular delays and filters, which can sometimes lead to a percussive sound. As the volume increases, the oscillators begin to entangle. For a fraction of a second, the instrument produces a sound as if it is winding up. The frequency rapidly sweeps up.

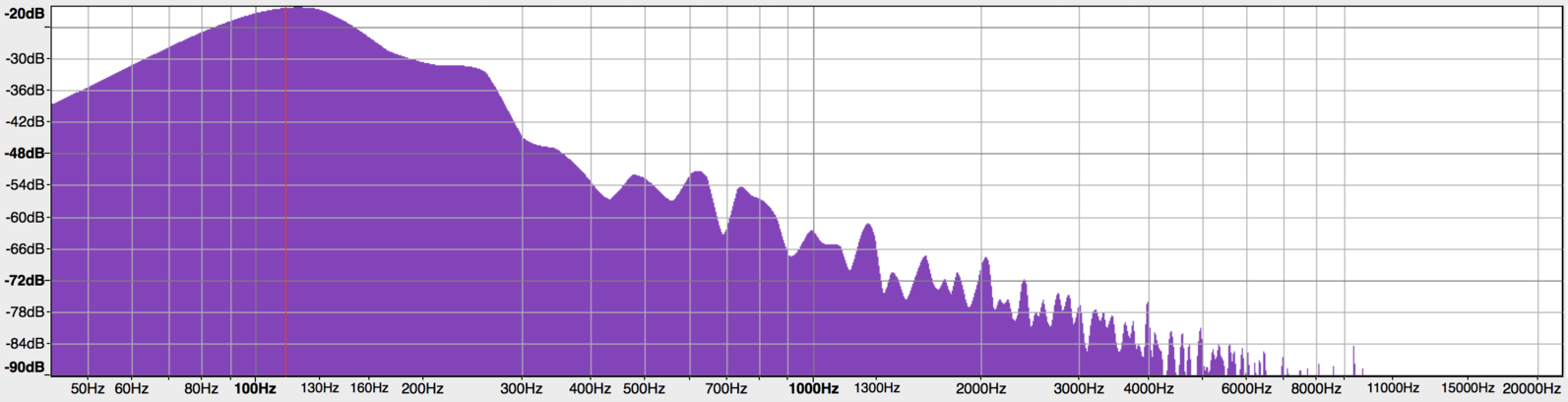

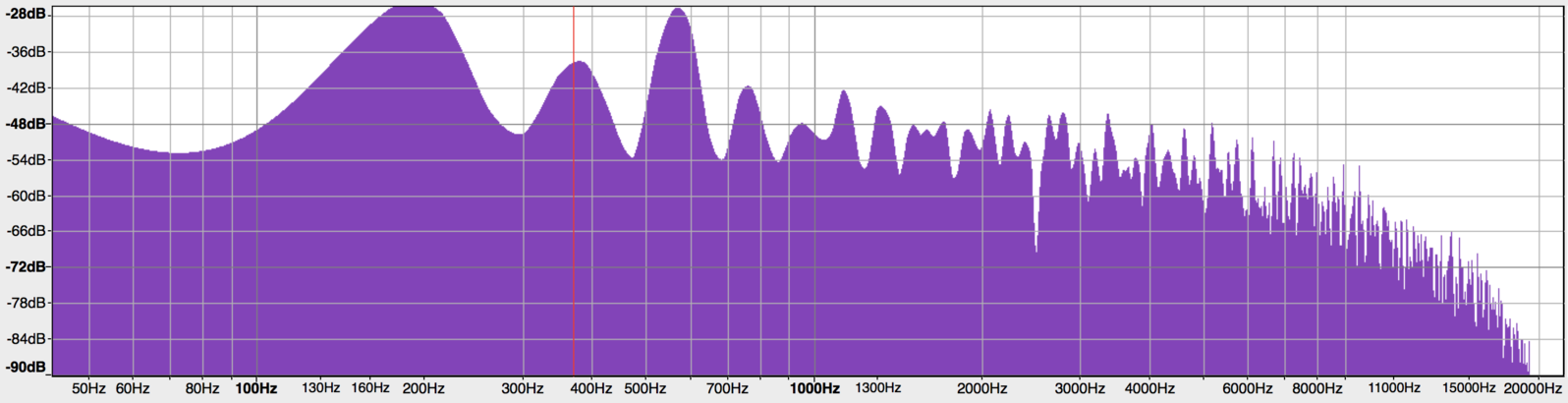

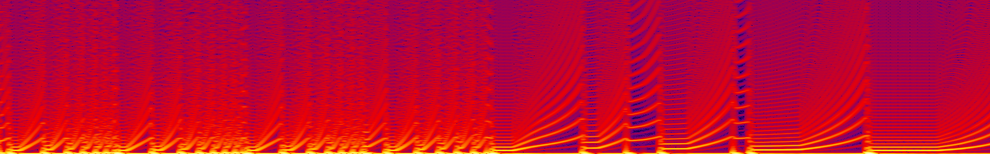

Zooming in even further, just on this winding up sound, it becomes clear that there are some additional processes at play. The fundamental frequency ramps up from roughly 113Hz to 192Hz, but as the waveform progresses, its timbral complexity increases as well.

Comparing the spectra at the start and end of this "winding up" clearly shows an increase of activity in the higher end of the sound spectrum, pointing to an increase in complexity and instability as the sound becomes less harmonic.

This is what it looks like when the instrument approaches a tipping point, as it moves towards a phase transition. As a listener, only the ramping up of the frequency is noticed at once. The increase of complexity sounds like a slight increase in the noisiness within the signal. In reality, a renegotiation takes hold, as the modulations among the oscillators clash and enmesh, forming a new temporary stability. In this example, this renegotiation happens very quickly. Too fast to fully process and perceive. Before the sweeping sound is registered, the instrument has already moved on to the next sonic behavior.

Eventually, the winding sound spirals outwards and settles into a more complex form of balance. While there are some fleeting melodic movements to decipher, the sound is warped by rapid, upward glissandi, functioning almost as an exaggerated vibrato. As the instrument settles into a complex, shifting, drone sound, a fragile sense of stability emerges. At least, the sound appears stable enough to be influenced without fully collapsing or tipping into vastly different behaviors.

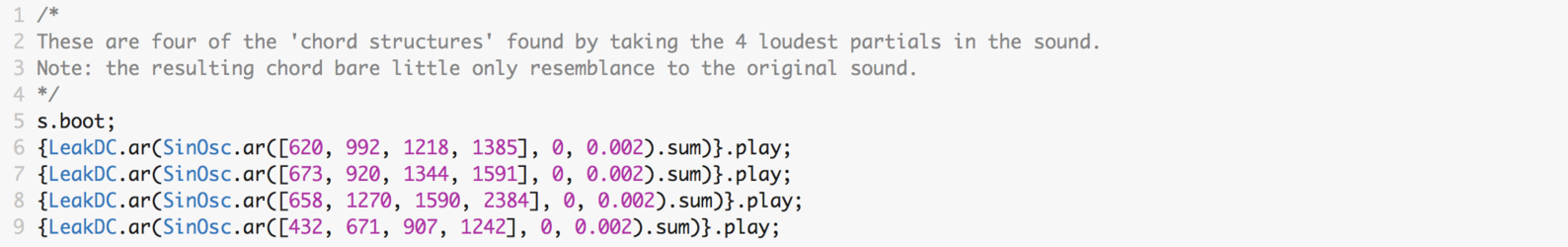

A decision is made, allowing the instrument to sound with only minor performative interferences, knowing that even the smallest touch could destabilize and wipe out the current behavior. For now, it sounds as though the synth is rapidly jumping back and forth between two or more "chord structures," which, from a morphological perspective, could be seen as the gestural aspect of the sound, although these structures are themselves microtonal and heavily distorted.

SuperCollider code showing the four loudest frequency partials of the chord structures (listed in the square brackets [] in hertz).

The textural aspect of the sound is equally, if not more, important. The sonic quality is best described as vibrating, which is likely due to a low frequency modulation of one of the oscillators. Within this section, the motion of the sound could be described as multidirectional, as there are multiple aspects within the sound that are undergoing their own developments. The chords seem to wander and change every two seconds or so, while the speed of the vibrato changes in speed as well. In the way that these elements interfere with one another, the sonic behaviors could be described as turbulent and granular, as if the vibrato chops up the otherwise slower movements. As the section continues, the speed of the vibrato accelerates and decelerates, adding another layer of flux.

As a performer, especially within these first moments leading into a piece, there are many split-second decisions that need to be made. First, there is the act of listening. Already within these first few seconds, there is a wealth of sonic activity to attend to. This is the first encounter with the soundworld of this particular performance, and depending on the sonic qualities that are present, there is a choice to either let the instrument take the lead, or to influence the sonic behaviors into different unforeseen trajectories. In these types of sections, when the sound is present for long enough to be experienced as a normative, an imaginative form of wondering takes form, querying the possibility space for the sound to mutate and evolve.

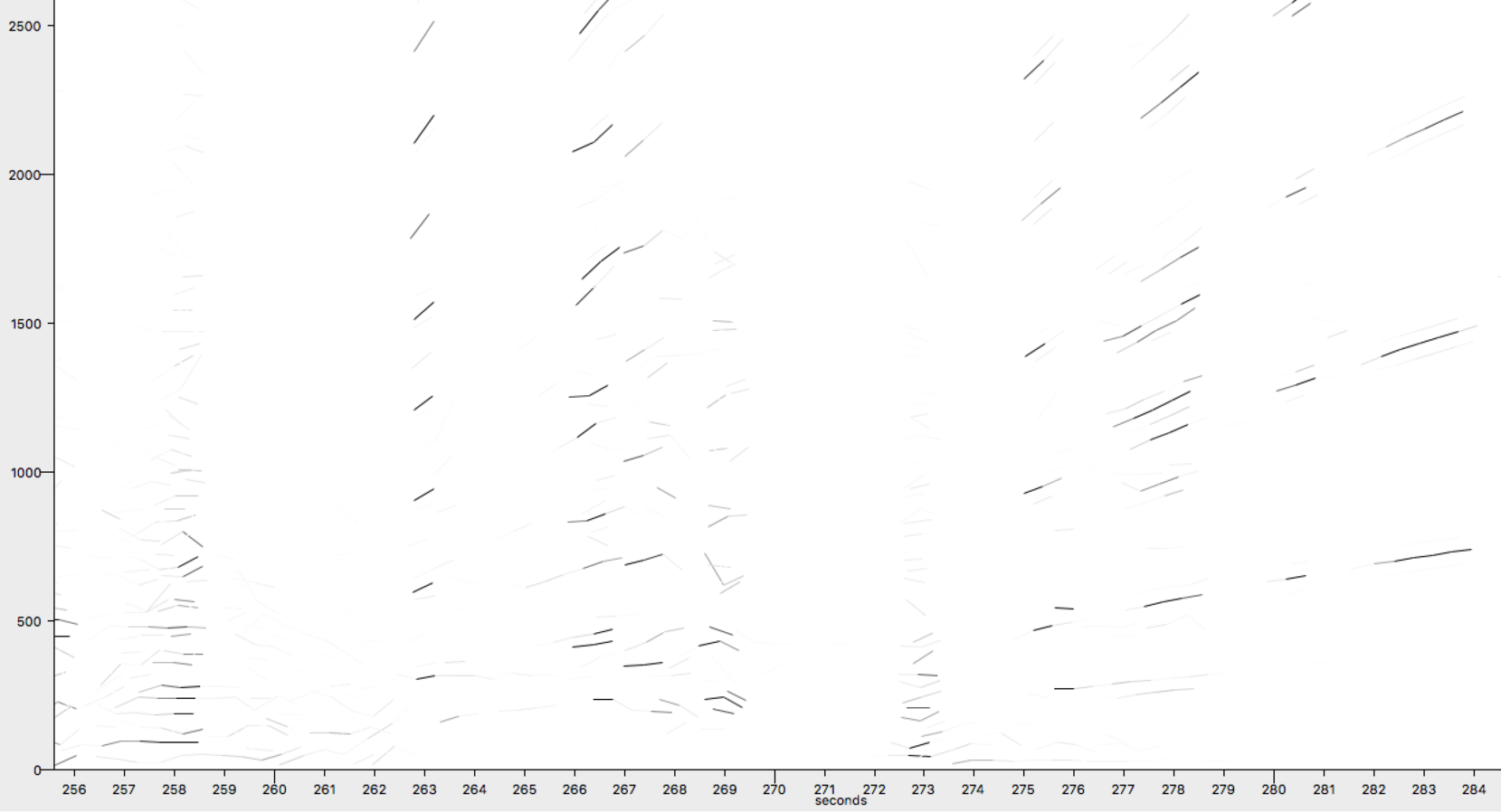

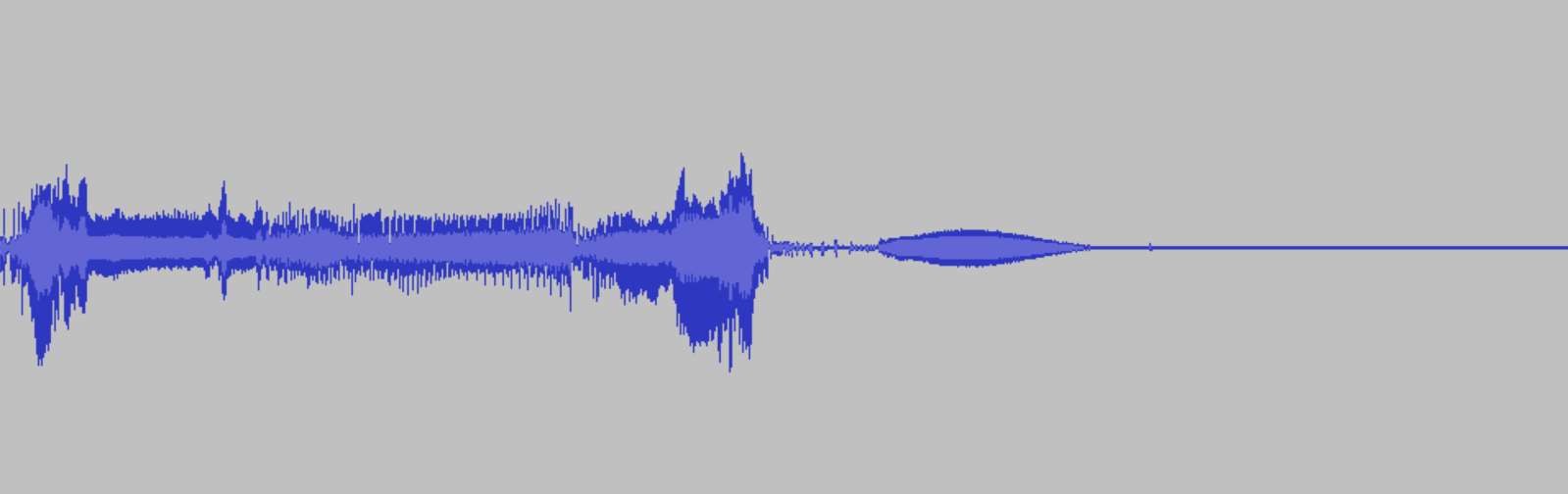

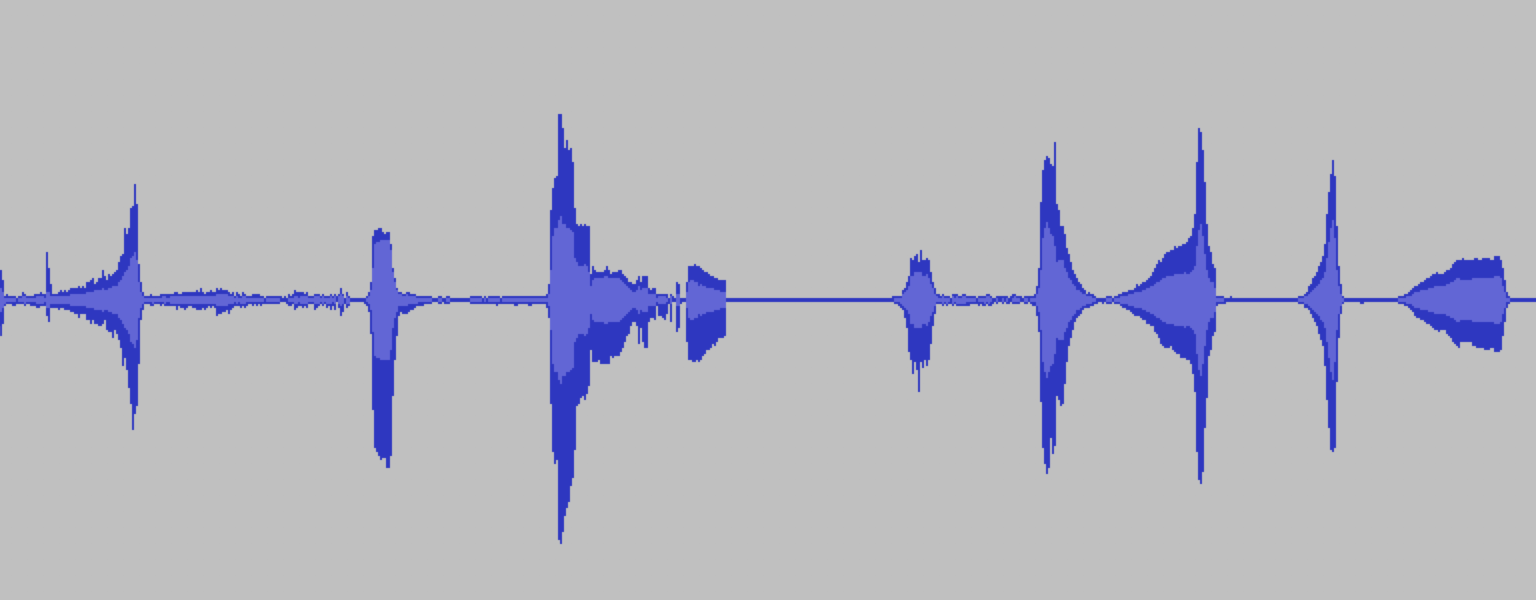

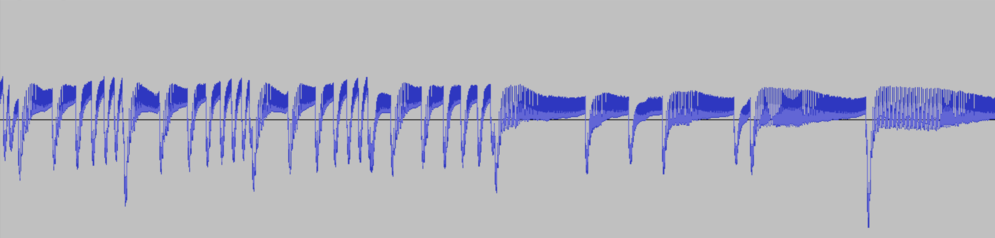

Two thirds in, the densely stretched qualities of the piece make way for a section that sounds much more sparse and open. This section is achieved by cutting away the main volume of the analog synth, allowing the digital processing to sound out, until another burst of sound is added. Some of the bursts seem to have a quality of becoming spectrally stretched out, as higher partials make a steeper, upwards glissando compared to the lower fundamentals. Because the bursts of sound from the analog synth are so short, it is impossible to know what sonic material will emerge in advance. Each burst causes the digital processes to respond in a different manner. Sometimes only a reverb is heard, while at other times the granular delay sputters along, extending the phrase of the incoming signal. This style of performing creates a contrast to the drones, and other dense and stretched sonic behaviors, that the instrument produces. The technique could be seen as a call and response, in which the analog synth and the digital processing are engaged in dialog.

The ending of the piece is abrupt, cutting out the sound of the analog synthesizer and letting the digital manipulations sound out. I often use this type of ending when performing with chaotic instruments, influencing them toward a tipping point, increasing the intensity, only to suddenly pull the plug. The chaotic processes that drive the instrument have a tendency to keep producing variety, and if left unchecked, performances could last many hours. Ending a performance is therefore an important artistic decision. In this particular recording, the final minutes of the piece are rather hectic. First, there is a drone, consisting of two frequencies, roughly a minor third apart. This interval changes to roughly a major second, and eventually bounces back and forth between these modalities. This movement is interrupted by four short bursts, acting as a quote from earlier in the piece. Then the piece launches into its last unstable phrase as is pictured above. These last sounds convey most of the sonic behaviors that are present in the whole piece, condensed down to about seventeen seconds. In a way, it points to the notion of self-similarity. All of the sounds and all of the modulations that happen within the piece are in some way related to the sawtooth waveform. Zooming in even further confirms this. While examining just a single oscillation, the spikey and whimsical movements within the waveform are a testament of the nonlinear ways in which the oscillators are affecting one another.

This is why there is a tendency for frequencies to gradually rise and suddenly jump back down. Rhythms are formed as sounds fade in and suddenly turn silent. Even the passages that are the most obscured and complex, still feature a nasal and piercing reminder of what is happening underneath the faceplate of the instrument. The shape of the sawtooth, pushed to its chaotic extremes, reveals the particular sonic vocabulary of this instrument.

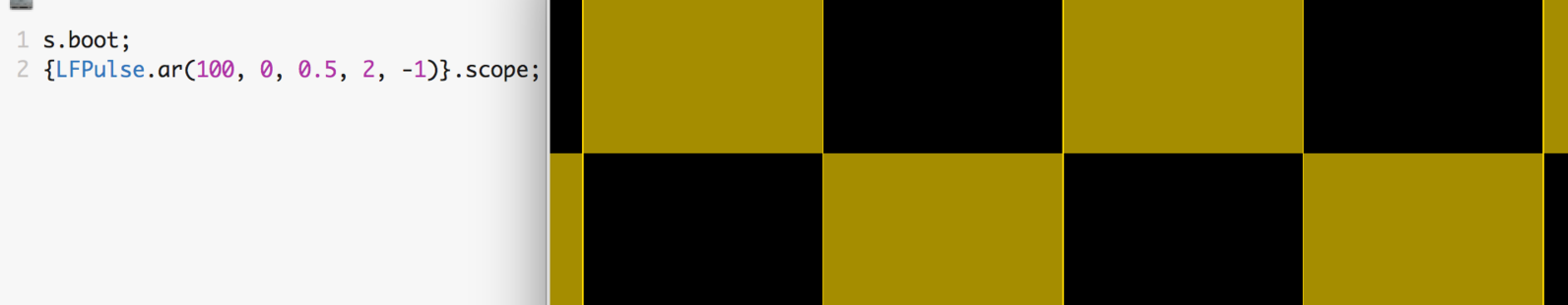

The OMSynth enables the user to tinker around with circuitry and playfully explore the possibilities of creating analog synthesized sounds using some very basic integrated circuits and a handful of electronic components. Furthermore, by adding only a few jumper wires, the flow of electricity can be routed from outputs back into their corresponding inputs, quickly turning simple oscillators into chaotic processes by establishing nonlinear feedback loops. One of the easiest oscillators to build is a square wave oscillator, requiring only three components and a few jumper wires on the OMSynth. The pulse wave consists of an oscillation between only two different states, high or low.

Using this high or low signal to modulate its own input yields results that, although interesting in their own right, lack the sonic complexity that can be created when continuous signals are modulating one another. With the addition of only a few more components, the square wave signal can be converted into a sawtooth signal.

Multiple Minds (Solo)

Multiple Minds is the title of the performance that is developed around the ATOX synthesizer in combination with its digital sonic and visual counterparts. This work has been presented as a performance paper at the International Conference of Live Interfaces (ICLI), in 2020.

This paper will form the basis for the presentation of the work, allowing me to diffract relevant thought-processes at the time that the work was developed, with my current insights. This form of second order reflection is meant to document both the work itself, and the development of the reflections around the work.

“‘Multiple Minds’ is an audio-visual performance that poses questions about the origins of the creative mind behind the sonic behaviors and images that are produced. [...] The combination of a broad sonic lexicon combined with unpredictability suggests that the instrument can be interpreted as having its own form of agency, acting independently from the intentions of the performer.”

The intricate relationship between mind, intent and agency lies at the heart of Multiple Minds. A question is posed: Does the instrument have a mind of its own, contributing to the artistic expressions that are experienced? The terms, mind, intent, and agency require some unpacking. Each of these terms can easily be attributed to human beings but when it comes to technology the question becomes more ambiguous. For the purposes of the performance, mind could be seen as that which revolves around remembrances, experiences and imaginations. The interferences between how things were (recalled, in memory), how they are (sensed, in perception), and how they might become (expected, in anticipation), enables the production of intentions (desiring specific outcomes). Agency is the ability to influence the perceived world in such a way that the likelihood of these intentions coming to pass, will increase.

It may prove to be a worthwhile exercise to examine these overly simplified notions in relation to the instrument that is developed. First, it can be stated that the instrument possesses forms of memory. Due to the recursive nature of cross-modulation, the current output of the instrument is always based on its prior state. Furthermore, the granular delay actually stores fragments of sound in memory, to be reused later on. It can also be stated that the instrument has a rudimentary capability of experiencing. The instrument responds to the changes made to the knobs and faders. The audio analysis aspect of the code could be said to be listening to the sonic behaviors. This analysis is then processed and used to alter the parameter space of the digital manipulations. However, when it comes to imagination, things become more difficult. While it can be said that the instrument behaves according to its own internal, chaotic logic, this logic is in no way capable of imagining or wondering what may lie ahead. It only processes flows of electronic currents according to its present conditions, but it does not develop a burning desire for things to evolve in any particular way. Even though the instrument displays a wide variety of sonic behaviors by activating the speakers to resonate in intricate patterns, it can not be said to have developed a curiosity toward the emerging music.

“Although there may be only one human performer present, the situation feels much more like playing a duet. The instrument poses sonic suggestions, and reacts to each touch according to its own determination. With each iteration the instrument functions more and more as an actant technology, capable of responding to performative gestures in perhaps disobedient, but nonetheless intricate ways.”

While playing with the instrument it does feel like there is a ghost in the machine. This ghost does not come about through a kind of mind or intelligence, but is rather implied due to the unpredictable nature of the sonic behaviors. Because it is impossible to foresee what the response of the instrument will be, it is tempting to project a sense of animacy onto it, as if it has its own temperament, character, or mood. Within the context of chaotic instruments, this kind of projection is rather common. Researcher and Scholar John Granzow reflects on this propensity to imagine that there is a mind at work, in his paper, "Encounterpoint."

“Perceived as an autonomous agent negotiating adjacent actors, our instruments can be conceived as though they were alive. The analogy maps the unpredictable encounter with other life forms onto the sonic output, a stance promoted through our chosen mode of design, performance and listening.”

Even though the instrument lacks the type of mind capable of forming intentions and then acting upon them, the analogy of the instrument as a living entity may still serve as a useful purpose. The attempt at establishing dialog with the instrument inspires precisely the form of attention and curiosity that enables the performer to recognize and appreciate the emergent sonic behaviors. The music that comes about is neither the result of intentions by the instrument, nor is it those of the performer. Instead, the music emerges through the correspondences that happen when both are engaged in play.

“The actual sonic output can only be uncovered through the act of play. This requires that the prior intentions of both the performer and the instrument have to be unfinished and open to the suggestions that are offered through the performance.”

The reflections in the ICLI paper connect these unfinished intentions to the concept of intra-action, developed by the theoretical particle-physicist, and professor in feminist studies and philosophy, Karen Barad. During the presentation, this concept was adapted to investigate the parameter space of the instrument as an intra-face rather than an interface. This notion was also presented in another performance paper, as part of a seminar on The Idea of Feedback in Music organized by the Orpheus Institute (BE) in collaboration with the University of Canterbury (UK),

“Another way to approach the relationship between the performer and the instrument is to take cues from the writing of Karen Barad, and to interpret the music and visuals as the results from the playful intra-actions between the performer and the instrument, each embodying their own form of artistic agency.”

Again, the important thing to stress in these reflections is their usefulness within the artistic practice. Using the term interface can cause confusion due to the common assumption that the interactions performed through interfaces connect intentions to outcomes. With chaotic instruments, however, a change in the conditions of the chaotic process can set in motion a whole range of interconnected ramifications that are impossible to foresee from the outset. The performer may have some intention in mind when a knob is turned, but there is no guarantee that the instrument responds in line with the desired outcome. The use of the term intra-actions performed through an intra-face serves as a reminder that whatever the sonic behaviors end up becoming, they should never be taken for granted, as their formation is always mired with fragilities and uncertainties.

My current thinking does place some question marks around the idea that the agency of the instrument is artistic in nature. It seems to me that there may be a conflation between the appreciation of the art that emerges through play, and the notion that the activity of the instrument is artistic. As an analogy, a waterfall may be astonishingly beautiful to observe, even though the water currents and rock formations that give rise to the waterfall are not in the least bit occupied with the way it looks when viewed from any particular angle. It is easy to anthropomorphize the behaviors of the instrument and to project artistic incentives upon their operations, but in the end, it is up to the attentive listener to recognize the qualities in the sonic behaviors that emerge from the instrument. The sonic behaviors become music when they evoke the curious mind.

“Chaotic instruments refuse to repeat the same thing twice, turning rehearsals into sonic explorations, driven by curiosity rather than virtuosity. The instrument is responding to the performer as much as the performer responds to the instrument. The act of playing the instrument changes the instrument.”

While it could be said that this statement: “The act of playing the instrument changes the instrument,” holds true for most instruments, in the context of an instrument centered around chaotic processes, there is a vast difference in degree when regarding how much the instrument changes, and to what extent this change is irreversible. The change is of such a nature that it may hold the key to explaining why instruments centered around chaos have a tendency to invoke the idea that there is some kind of mind at play. Instruments can usually be trusted to produce similar-sounding sonic behaviors when played in a similar fashion. When this trust is broken, the logical reaction is to search for a reason why. While chaotic behaviors such as turbulence are easily recognized in fluids and vapor, the instruments appear solid and encased in wood, metal, and hard plastics. Underneath that solid enclosure, electricity sloshes through the circuits, splashing its electrons back and forth like turbulent vortices. Changing a setting on one of the knobs translates to a reconfiguration of the conditions upon which the chaotic process is built. A new set of relations, correspondences, and behaviors emerge; a new instrument to be explored in wonder.

“The instrument doesn’t merely execute instructions, instead, it processes performative input through its own chaotic logic. ‘Multiple Minds’ requires a deep and unaverted attention by the performer, who can not fully rely on the knowledge gained through prior experiences. The instrument has to be explored and discovered anew each time it is performed. The music of the piece itself begs to be uncovered, examined, inspected, extended, diminished, and concluded. Performances take the shape of sonic expeditions where instrument, performer, and audience explore the ever shifting sonic landscapes together, seduced by the tensions and expressions that are encountered along the way.”

Next to the performance at the ICLI and at the University of Canterbury, Multiple Minds has also been performed as part of the Grieg Research School (GRS) in Knut Knaus at the KMD in Bergen, and at the Blue Rinse Concert series at Lydgalleriet in Bergen. Due to Corona-related restrictions, the piece has not been performed afterward.

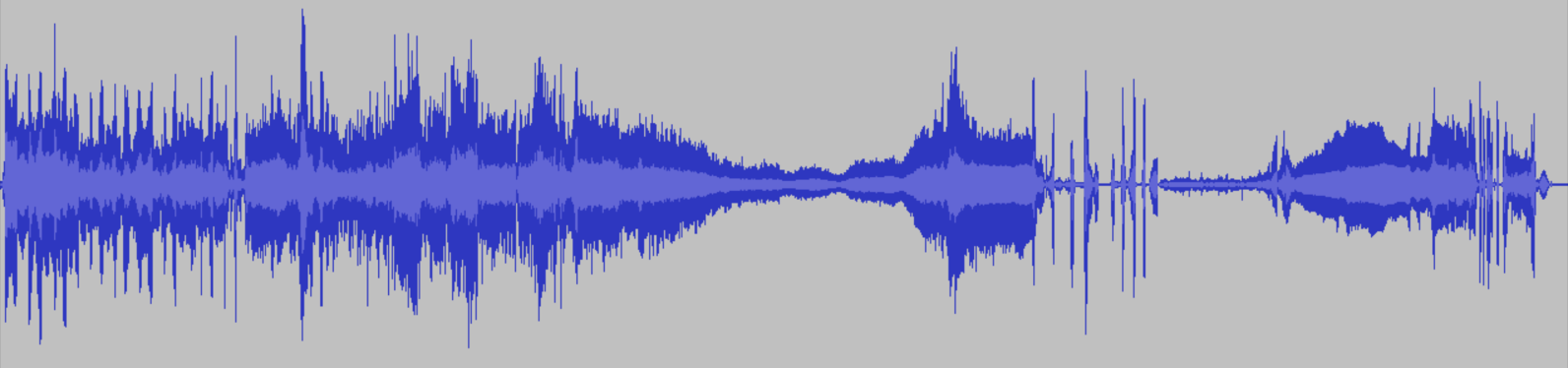

This recording was made in august of 2019, in my studio, while exploring the sonic vocabulary of the instrument. The recording starts with a pronounced popping sound after which the instrument accelerates and settles into a dense, yet shifting, drone passage.

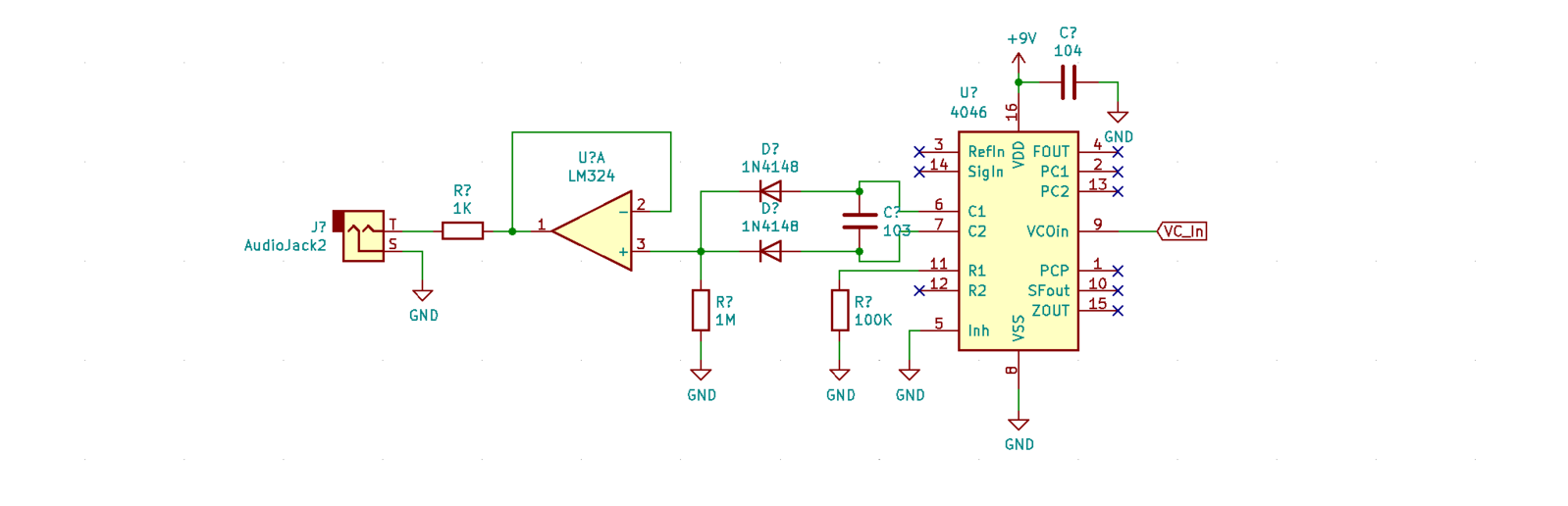

Another experiment involved the processing of the output of the ATOX synthesizer through software that was developed for an earlier project entitled, Ecdysis. This software was capable of elaborate transformations of the sound of the ATOX synthesizer. On the downside, a more performative issue presented itself. While playing with the combined setup, my focus often drifted toward influencing the software rather than the source material. Eventually, a middle ground was found, and the choice was made to digitally process the sonic output of the ATOX synthesizer. Next to the main output, each individual oscillator also had its own output. These four channels of sound became the input of a program, written in SuperCollider, that analyzed the sonic behaviors of the individual oscillators, and used this analysis to change the settings of audio manipulations in the form of granular delay lines, a waveshaper, and a filter bank. Because the software responded directly to the sounds of the ATOX synthesizer, the sonic behaviors became much more elaborate without adding additional hardware to influence the functionality of code.

This approach, of obtaining data through audio analysis, used to influence the parameter space of the code, is used on several occasions throughout the research.

A schematic of the full instrumental setup including the ATOX synthesizer, code, sound, and visuals.

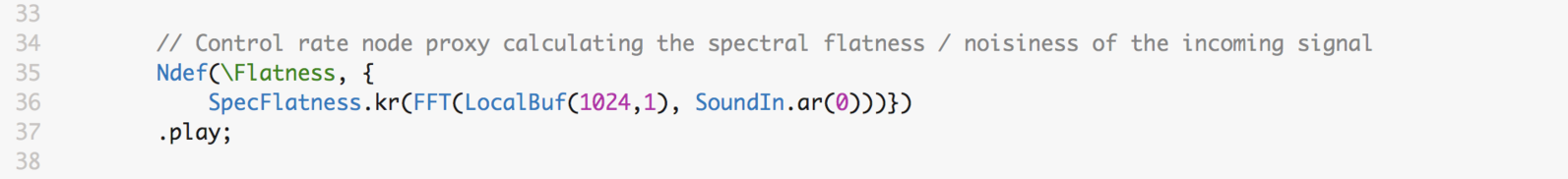

SuperCollider code showing the "Ndef" used for calculating the "Spectral Flatness" of the incoming signal. The outcoming values range between 0.0 (sinusoid) and 1.0 (noise). In SuperCollider, Ndefs can be used directly inside of other Ndefs, making it easy to rescale and map the sound analysis onto various parameters within the code.

This is a recording of the ATOX synthesizer as an input to the SuperCollider patch for the Ecdysis performance. The software is able to process the incoming sound in various ways: looping; modulation; granular freezing; delays; reverbs; and filtering.

The examples above are excerpts of the concerts played at Prøverommet and Café De Ruimte. The video was made while exploring the sonic vocabulary the ATOX synthesizer.

From 0 to 19 seconds.

In this excerpt, the ATOX synthesizer is influenced to exhibit a variety of behaviors. Each of these behaviors have different sonorous qualities. Also, they are all highly sensitive, and a mere performative touch will destabilize them. Zooming in on one of these moments of change, shows how the instrument fluently changes from one behavior to the next:

From 9 to 11 seconds.

The waveform clearly shows the change from a texture (ramps up roughly seven impulses), to a more rhythmical section, jumping back and forth between longer and shorter tonal stabs:

261 Hz (long), 453 Hz (short), 1064 Hz (short), 391 Hz (long), 1205 Hz (short), 310 Hz (long), 267 Hz (long).

The spectrograph shows that there is a timbral quality (an upward glissando), that remains present both before and after the change, resulting in a sense of consistency. The glissandi are an artifact of the main building block of the instrument, the sawtooth oscillators.